Table of Contents |

guest 2025-07-01 |

GPF Creator

GPF Importer

PRM Conductor

Expert Review

Sequence Import Configure

PRM Conductor Walkthrough

Absolute Quantitation - PQ500

Neat Unscheduled Runs

Plasma Wide Window Scheduled

Plasma Narrow Windows Scheduled

Label Free - E. Coli

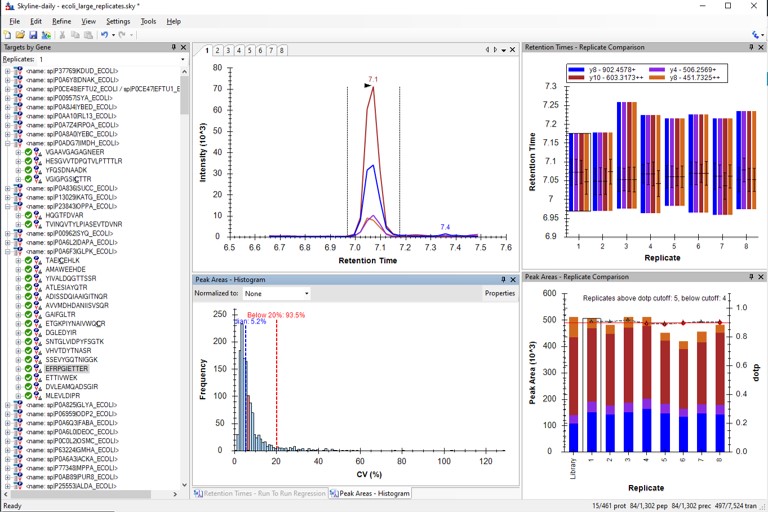

Validation with Subset Replicates

Analysis of Final Assay Replicates

Known Issues

Stack Overflow when Exporting Methods

Hypothesis-driven discovery with Multiple Target Monitoring

Step 1: Pilot experiments with gas phase fractionation

Step 2: Building method with multiple target monitoring

Expert Review Walkthrough

Absolute Quantitation - PQ500

Label Free - E. Coli

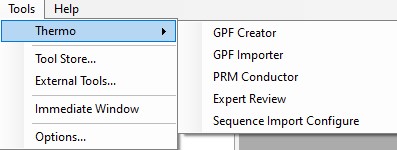

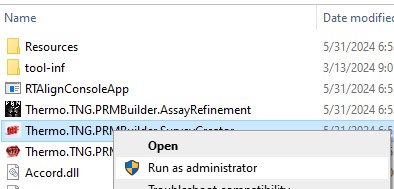

Thermo External Tools Overview

Introduction

As of mid-2025, the Thermo set of External tools downloaded under the name PRM Conductor includes 5 different tools

- GPF Creator

- an small application that automates the creation of multiple instrument method DIA files to span a precursor range for a gas-phase-fractionation experiment

- GPF Importer

- a small application that automates the import of GPF or any peptide search data from Proteome Discoverer. This program imports the data with defaults that are appropriate for the Stellar MS, but the same result can be achieved through the Skyline File / Import / Peptide Search wizard.

- PRM Conductor

- an application that aids in the creation of targeted MS methods. This program filters "bad" transitions, and selects "good" peptides via a set of criteria, and helps the user to create one or more instrument methods that will be acquired with at least a required number of points per peak.

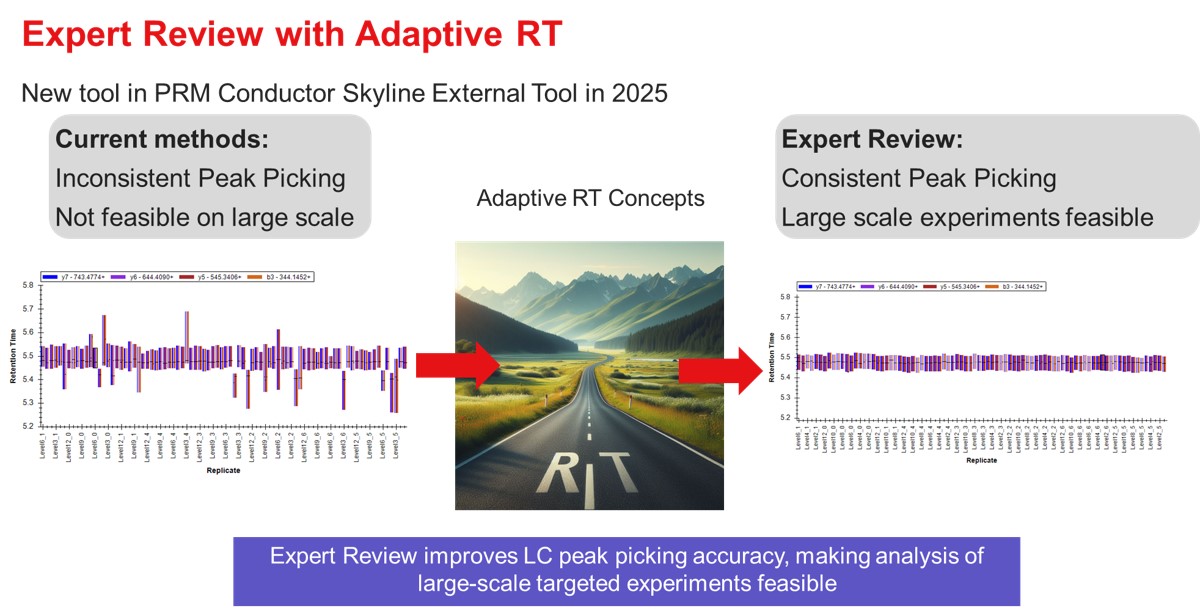

- Expert Review

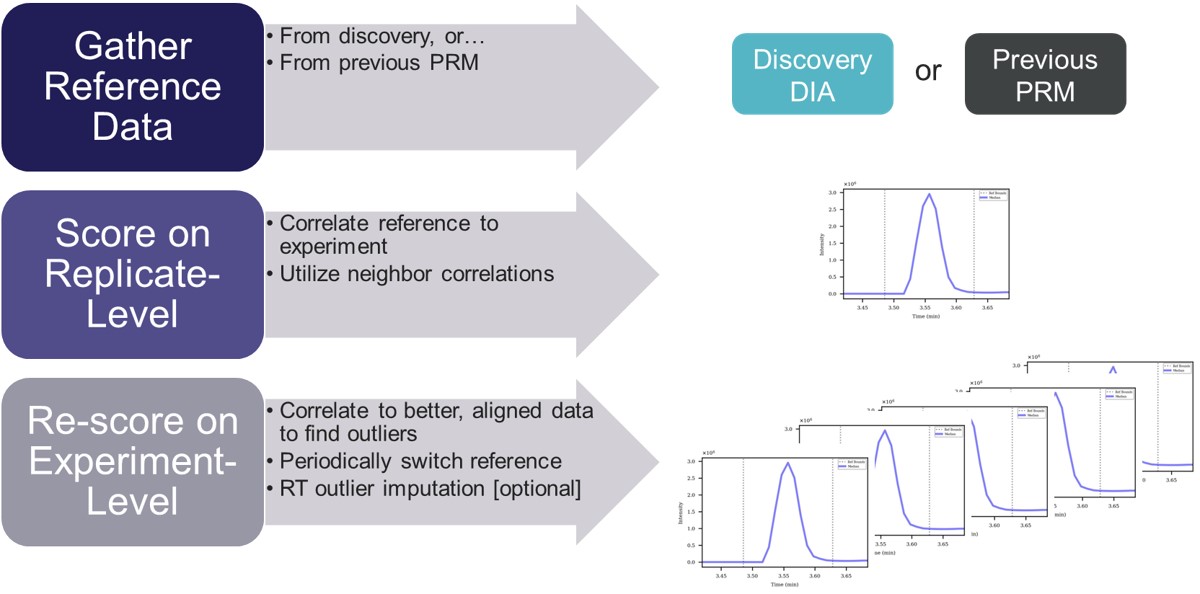

- an application that helps achieve consistent peak integration boundaries, through the use of replicate-level and experiment level correlations to reference data of various types.

- Sequence Import Configure

- a tiny application that saves a file on the instrument computer, which allows to automatically import .raw files into a Skyline file after the .raw file acquisition is finished

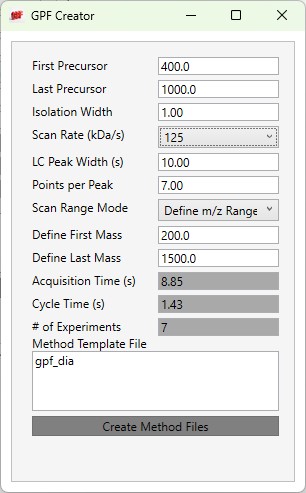

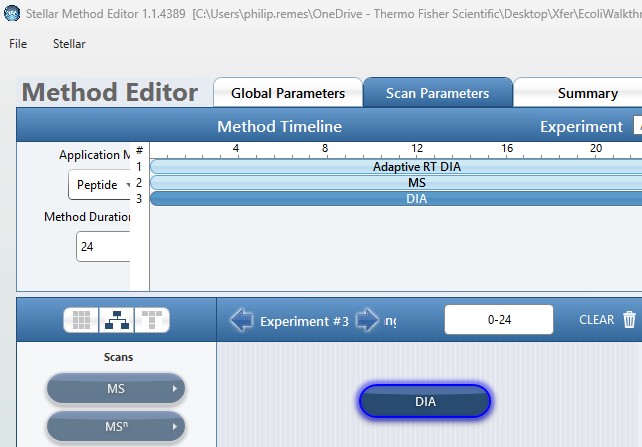

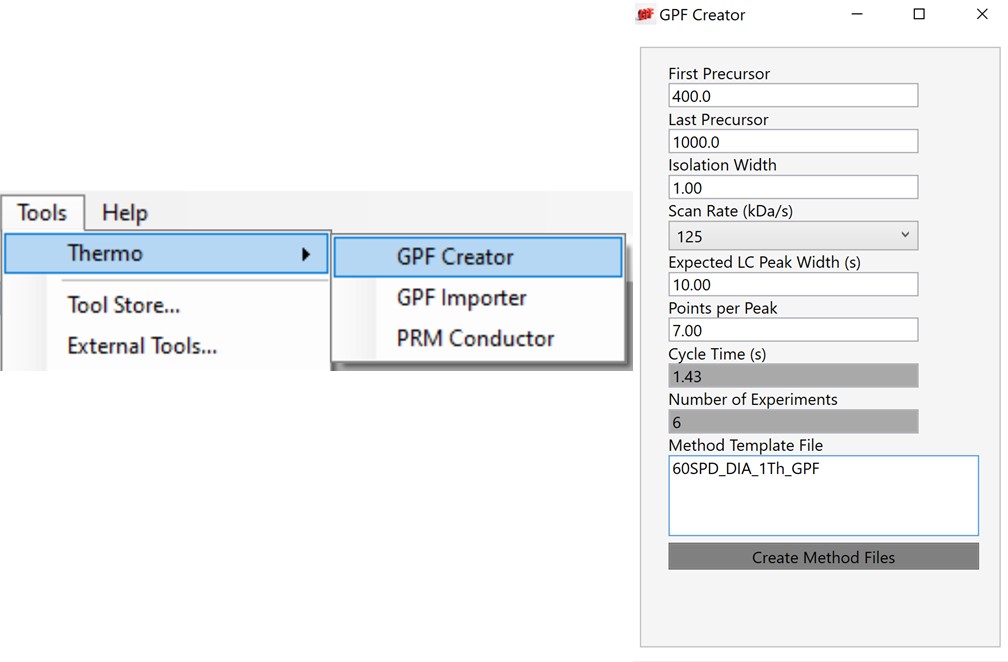

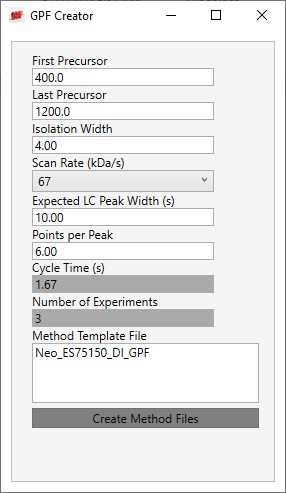

GPF Creator

GPF Creator Quick Reference

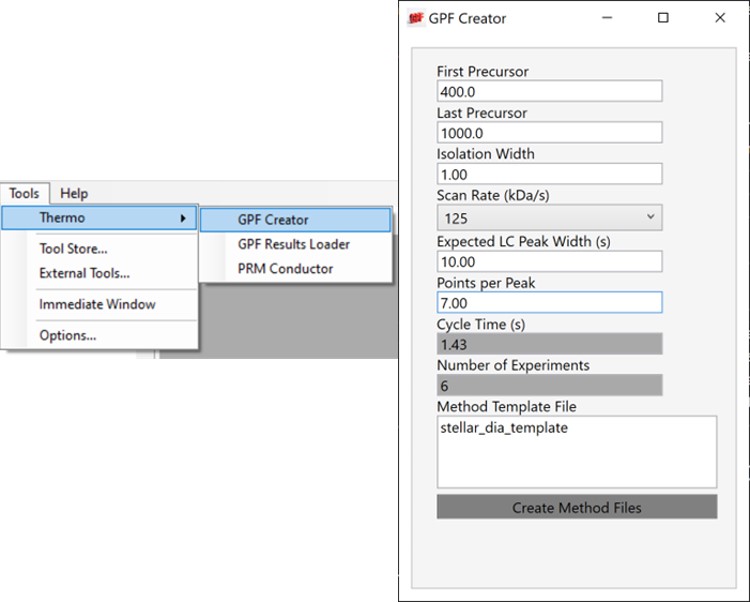

GPF Creator is small application that automates the creation of multiple instrument method DIA files to span a precursor range for a gas-phase-fractionation experiment.

- First Precursor

- The lower bounds precursor m/z

- Last Precursor

- The upper bounds precursor m/z

- Isolation Width

- The quadrupole mass filter isolation width. The precursor range is split up into a series of acquisitions having this stride.

- Scan Rate (kDa/s)

- The ion trap mass analysis rate. This value determines how fast the acquisitions are acquired, and affects the number of experiments needed to span the precursor range.

- LC Peak Width (s)

- The expected LC peak width at the base. The cycle time is this value divided by the desired points per peak.

- Points per Peak

- The minimum acquired points per LC Peak width. The cycle time is the LC peak width divided by this value.

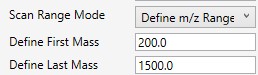

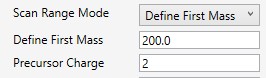

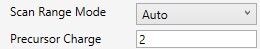

- Scan Range Mode

- Define m/z Range

- Define an explicit first and last mass for the acquired spectra. The acquisition rate is inversely proportional to the size of the m/z range.

- Define m/z Range

- Define First Mass

- Define a constant first mass for all acquisitions. The last mass is determined based on the charge state, such that last_mass = precursor_mz * charge + 10

- Auto

- The first and last mass are determined automatically. The last mass is determined as in the Define First Mass mode. The first mass is determined as the lowest mass that still retains most of the ions at the last mass, based on experimental evaluations.

- Acquisition Time(s)

- The total estimated amount of time to acquire data for all the acquisitions from the First Precursor to the Last Precursor, with the given settings. The # of Experiments is this value divided by the Cycle Time, rounded up to the next integer.

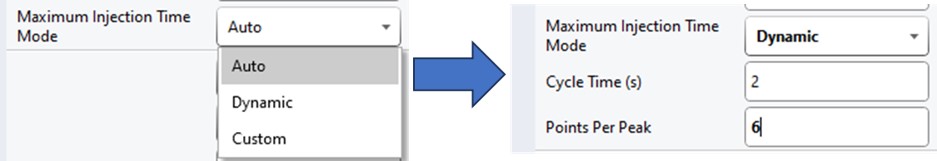

- Cycle Time (s)

- The desired cycle time, based on the LC Peak Width / Points per Peak

- Number of Experiments

- The total number of experiments required to span the Precursor range with the current settings. This is the number of instrument methods that will be created when Create Method Files is clicked.

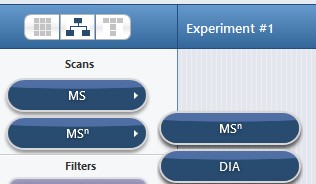

- Method Template File

- Double click this text box to open a file chooser for instrument .meth files. The file should have at least one DIA experiment, not counting Adaptive RT experiments (ART). The first non-ART experiment will be modified in the newly created files. The method should have all the LC details desired, and have the appropriate Method Duration and Experiment Durations.

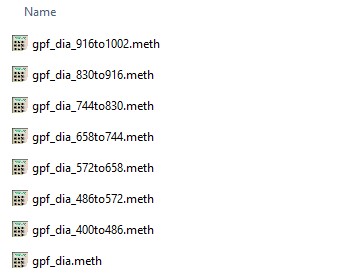

- Create Method Files

- Click this button to create the instrument methods. Each method will have the name of the template method, with the precursor mass range appended to the end. For example for the gpf_dia.meth template method, files with names like gpf_dia_572to658.meth are created.

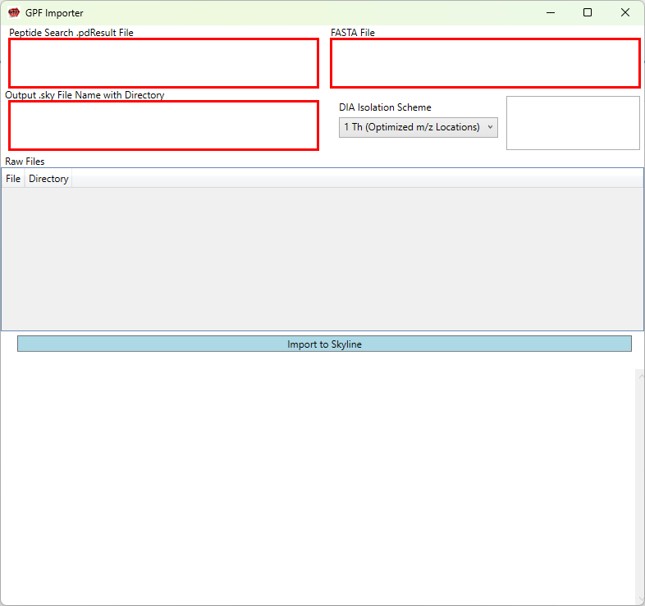

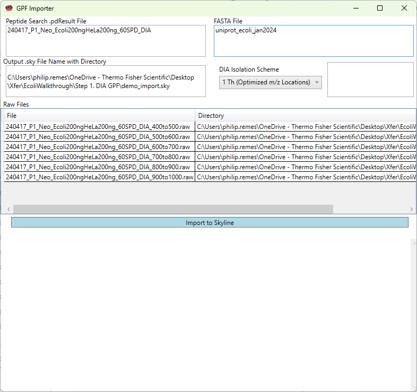

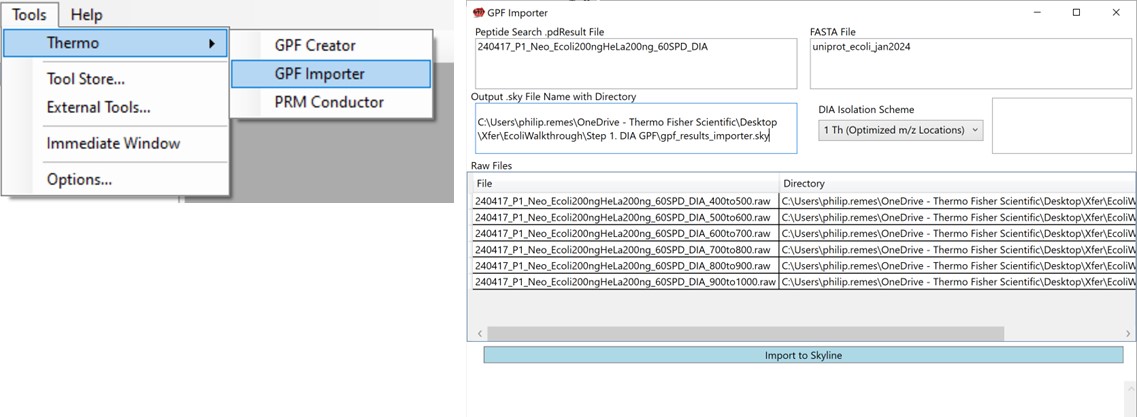

GPF Importer

GPF Importer Quick Reference

GPF Importer is a small application that automates the import of GPF or any peptide search data from Proteome Discoverer. This program imports the data with defaults that are appropriate for the Stellar MS, but the same result can be achieved through the Skyline File / Import / Peptide Search wizard.

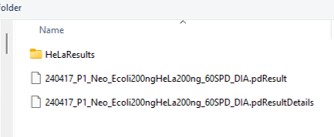

- Peptide Search .pdResult File

- Double click to open a file chooser for a .pdResult file. Since PD v3.1, a corresponding file with the .pdResultDetails extension must be present in the same folder.

-

Fasta File

- Double click to open a file chooser for a .fasta file.

-

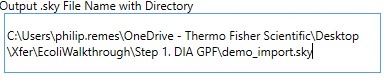

Output .sky File Name with Directory

- Enter the full path to where you want the output .sky file to be. The folder must exist, and the extension of the file must be .sky for the red outline around the box to go away. Usually one can copy the path to a folder and paste here, and then type the desired .sky file.

- Enter the full path to where you want the output .sky file to be. The folder must exist, and the extension of the file must be .sky for the red outline around the box to go away. Usually one can copy the path to a folder and paste here, and then type the desired .sky file.

-

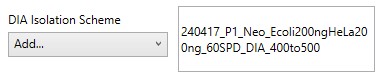

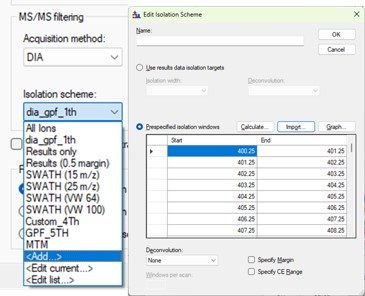

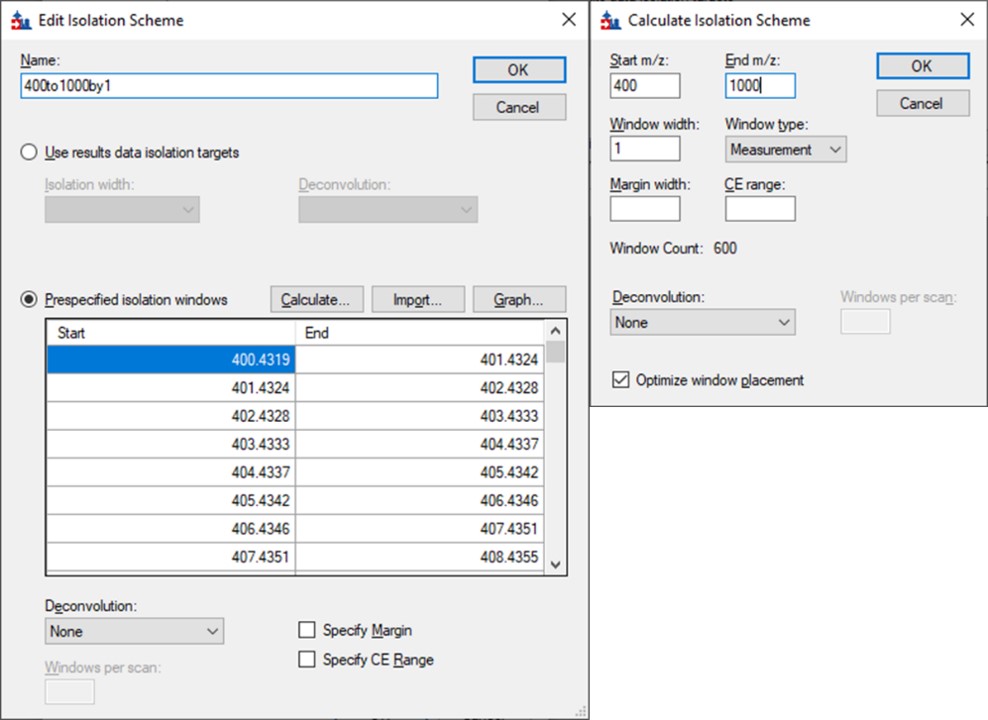

DIA Isolation Scheme

- Select the isolation width used for your experiment. The chooser assumed that you used the Window Placement Optimization option in the Method Editor.

- Select the isolation width used for your experiment. The chooser assumed that you used the Window Placement Optimization option in the Method Editor.

If for some reason, you didn't use Window Placement Optimization in the DIA method, it's possible to select a .raw file that has the DIA isolation scheme that you want to do. This is equivalent to using Skyline to Add an isolation scheme, and selecting Import.

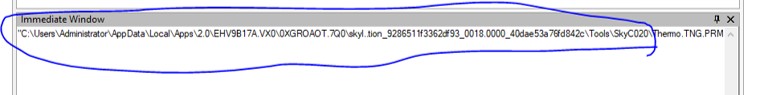

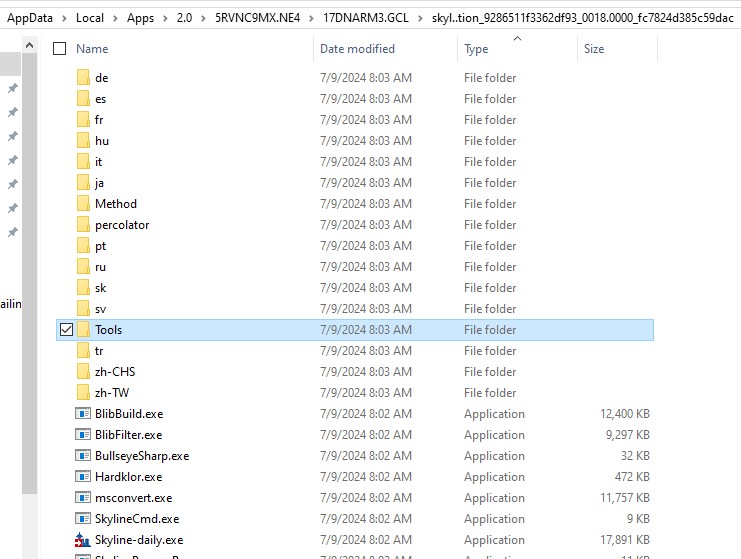

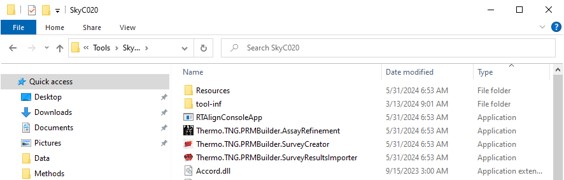

The GPF creator does this for the pre-defined schemes, by creating a short 1 minute instrument method with a scheme, and acquiring the .raw file through Tune. You can find the template raw files if you can find the installed Skyline Tools directory by inspecting the Skyline Immediate Window when you launch a tool.

-

Raw Files

- Double click this box to select one or more .raw files that will be imported to the created .sky file after the spectral library is created from the .pdResult files.

- Double click this box to select one or more .raw files that will be imported to the created .sky file after the spectral library is created from the .pdResult files.

-

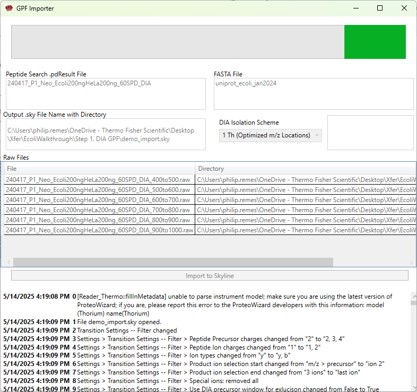

Import to Skyline

- Click this button to start the import. As the import happens, the Skyline API logging text will print to the lower text box.

- Click this button to start the import. As the import happens, the Skyline API logging text will print to the lower text box.

Once the import is finished (it can take minutes), the output skyline file will open, with all the data imported. You would be ready to create an assay with PRM Conductor next.

PRM Conductor

PRM Conductor Quick Reference

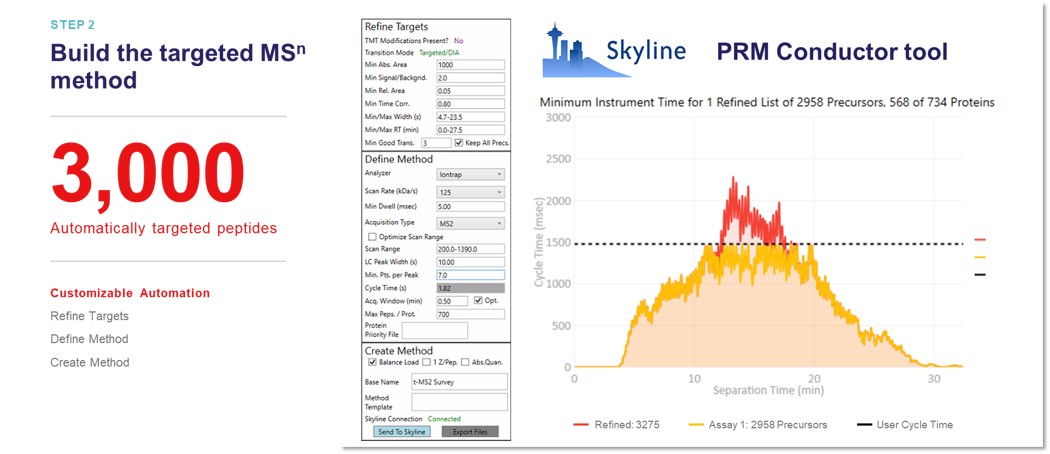

PRM Conductor is an application that aids in the creation of targeted MS methods. This program filters "bad" transitions, and selects "good" peptides via a set of criteria, and helps the user to create one or more instrument methods that will be acquired with at least a required number of points per peak.

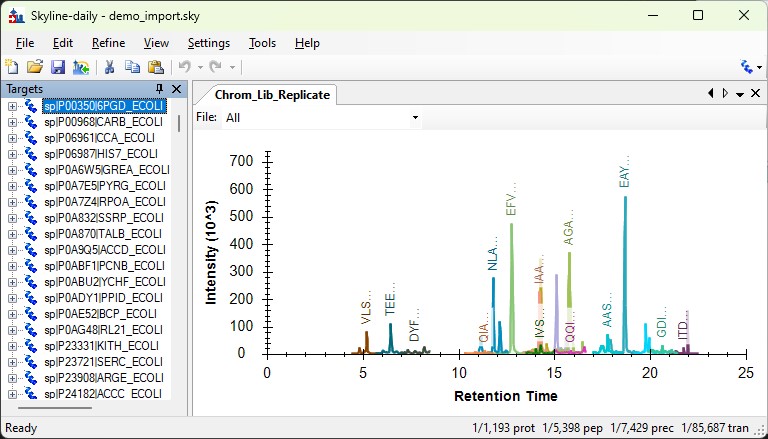

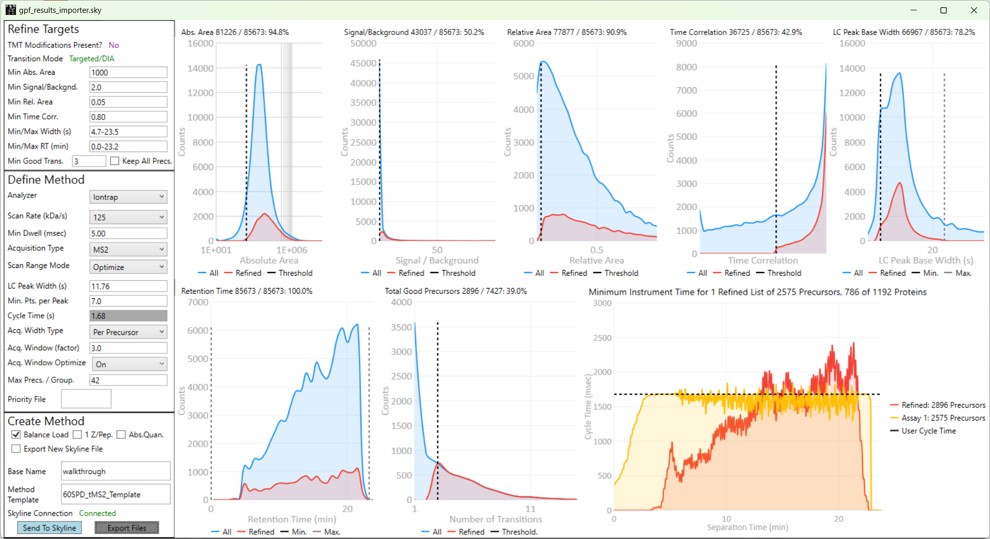

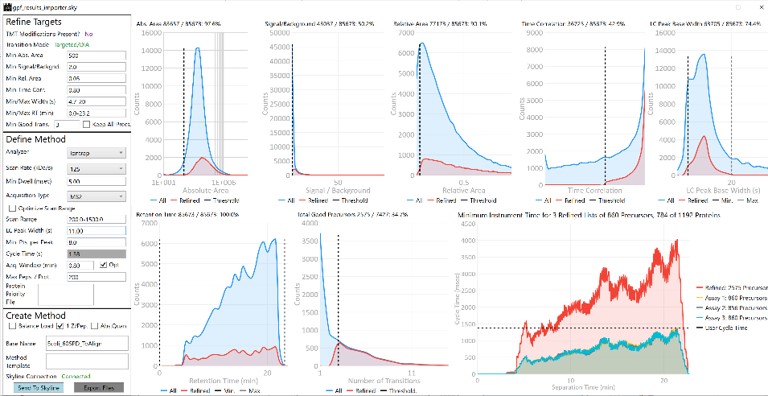

Launching PRM Conductor from the Thermo tools menu will open the application. If there was data in the Skyline document, a report will be exported from Skyline and imported to PRM Conductor. The view will look similar to below.

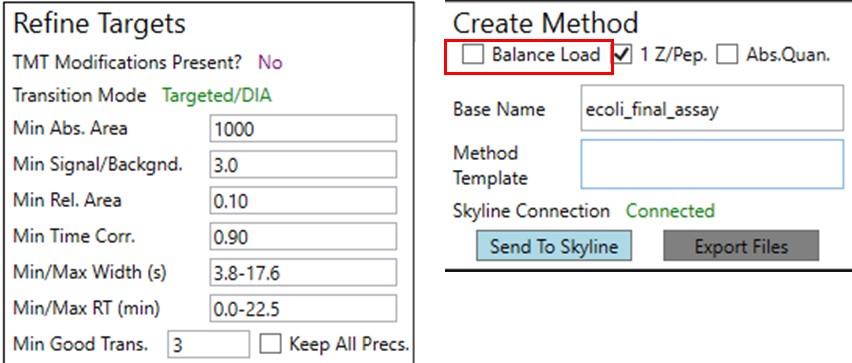

The user parameters are split into 3 sections; Refine Targets, Define Method, and Create Method

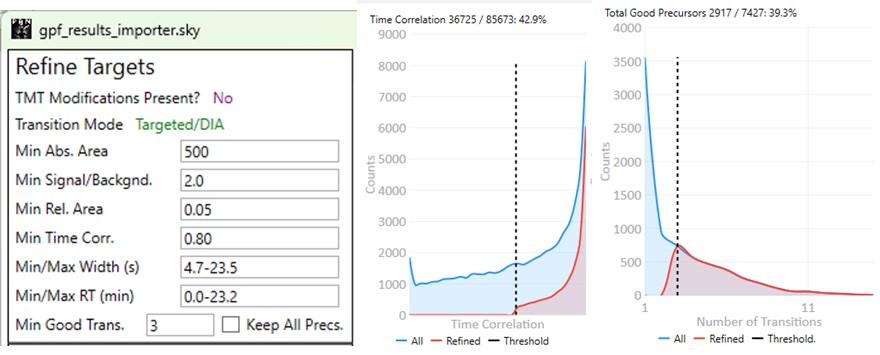

Refine Targets

These parameters configure a series of simple filters for the transitions, and allows to filter precursors by the number of good transitions that they have.

- TMT Modifications Present?

- This value is No if there are no TMT modifications. If TMT modifications are present, then the exported methods can account for this, for example by choosing MS3 precursors that have TMT tags.

- Transition Mode

- This value is Precursor/DDA if ALL the analytes have precursor transitions. The 1.0 PRM Conductor version would set this mode if ANY precursor transition was present, which was confusing. This DDA mode means that MS2 data may only be acquired once per LC peak, and so some metrics like Time Correlation are not valid. This mode is common for Small Molecule analysis when data are imported from Compound Discoverer. PRM Conductor is still useful in this case, to create instrument methods, but it may be advisable to select Keep All Precs. option, and apply filtering on a later targeted data set.

- This value is usally Targeted/DIA, enabling the full filtering functionality of the Time Correlation filter.

- Min. Abs. Area

- Filters transitions by requiring that they have a minimum absolute area value. This value may have to change for data acquired on different instruments. For example, the intensity scaling on Orbitrap instruments is arbitrarily scaled to a value that is ~10x the value for Ion trap and Astral data.

- Min. Signal / Backgnd.

- Filters transitions by requiring that they have a minimum signal to background ratio. Signal is the Skyline peak area, and Background is the Skyline background value. This value should be used sparingly, because the current background metric that we use is not as good as it could be.

- Min. Rel. Area

- Filters transitions by requiring that they have a minimum relative area to the largest transition for its precursor.

- Min. Time Corr.

- Filters transitions by requiring that they have a minimum correlation to the median transition. This value is called Shape Correlation by Skyline,and was defined by Searle et. al., and later in some detail by Heil et. al.

- Min./Max. Width (s)

- Filters transitions by requiring that their base LC width be within the given bounds. The bounds is computed from the Skyline FWHM and converted to a base peak width by assuming a Gaussian shape and multiplying by 2.54. See the supplement of Remes et. al. for a derivation.

- Min./Max. RT (min)

- Filters precursors by requiring that their retention time be within the given bounds.

- Min. Good Transitions

- Filters precursors by requiring at least this many transitions that satisfy the above filters.

- Keep All Precs.

- This option ensures that no precursors are filtered, and the top Min. Good Transitions are kept. If there are not enough good transitions, "bad" transitions are selected according to Area x Time Corr. This option is useful when the user wants to keep all the precursors and just clean up the transitions, or is using DDA mode.

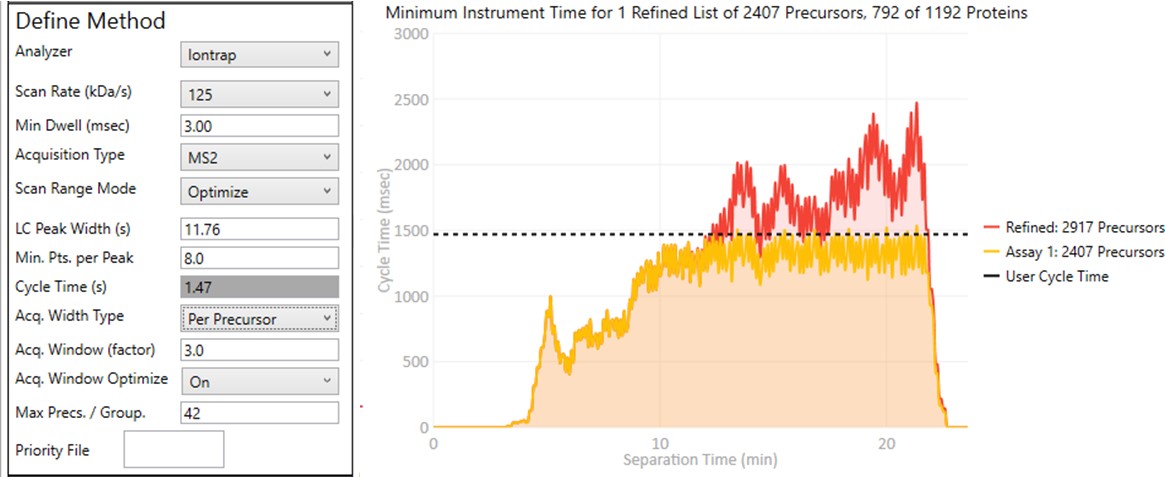

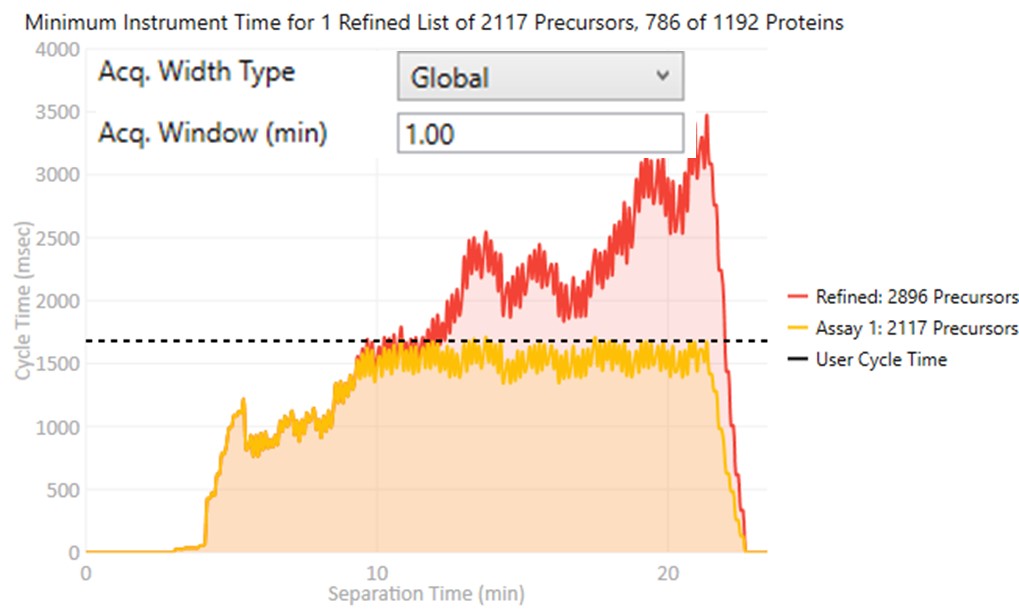

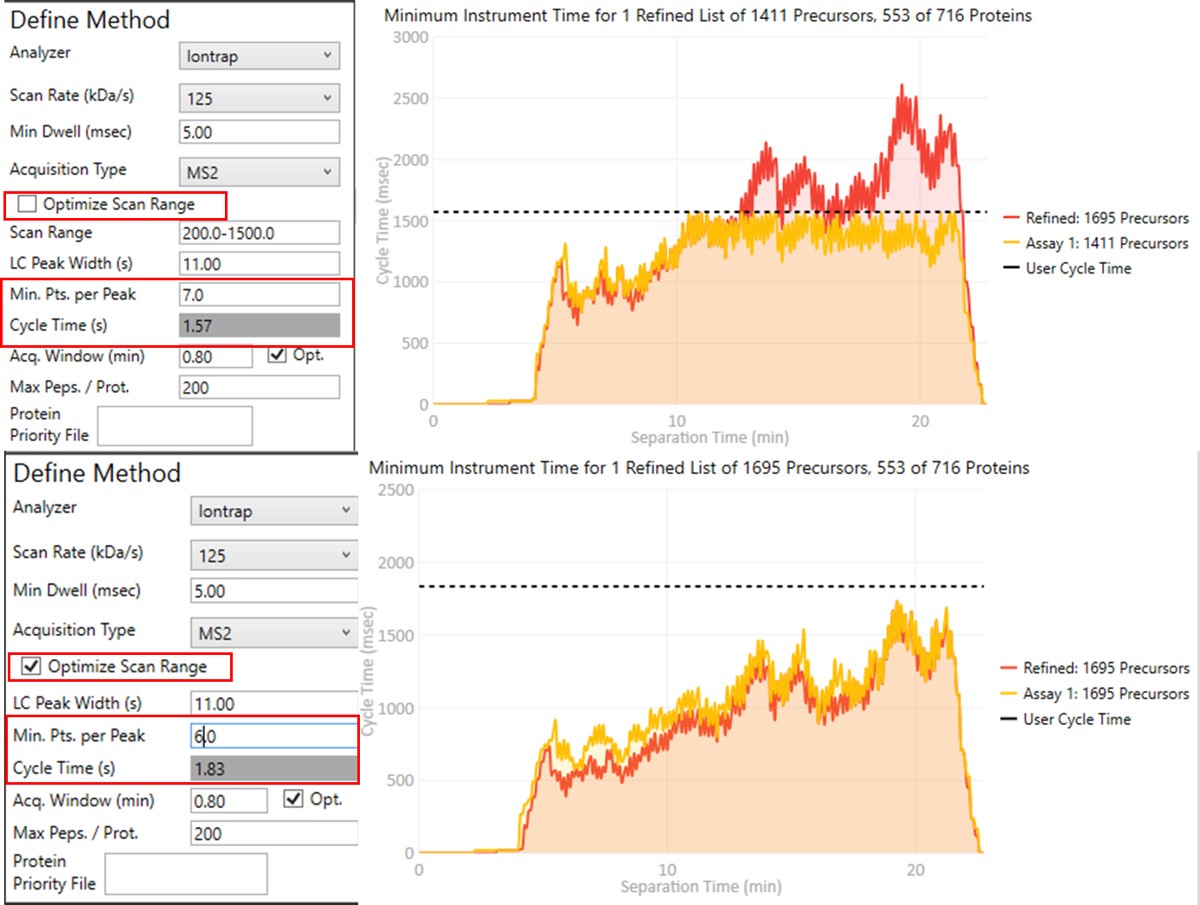

Define Method

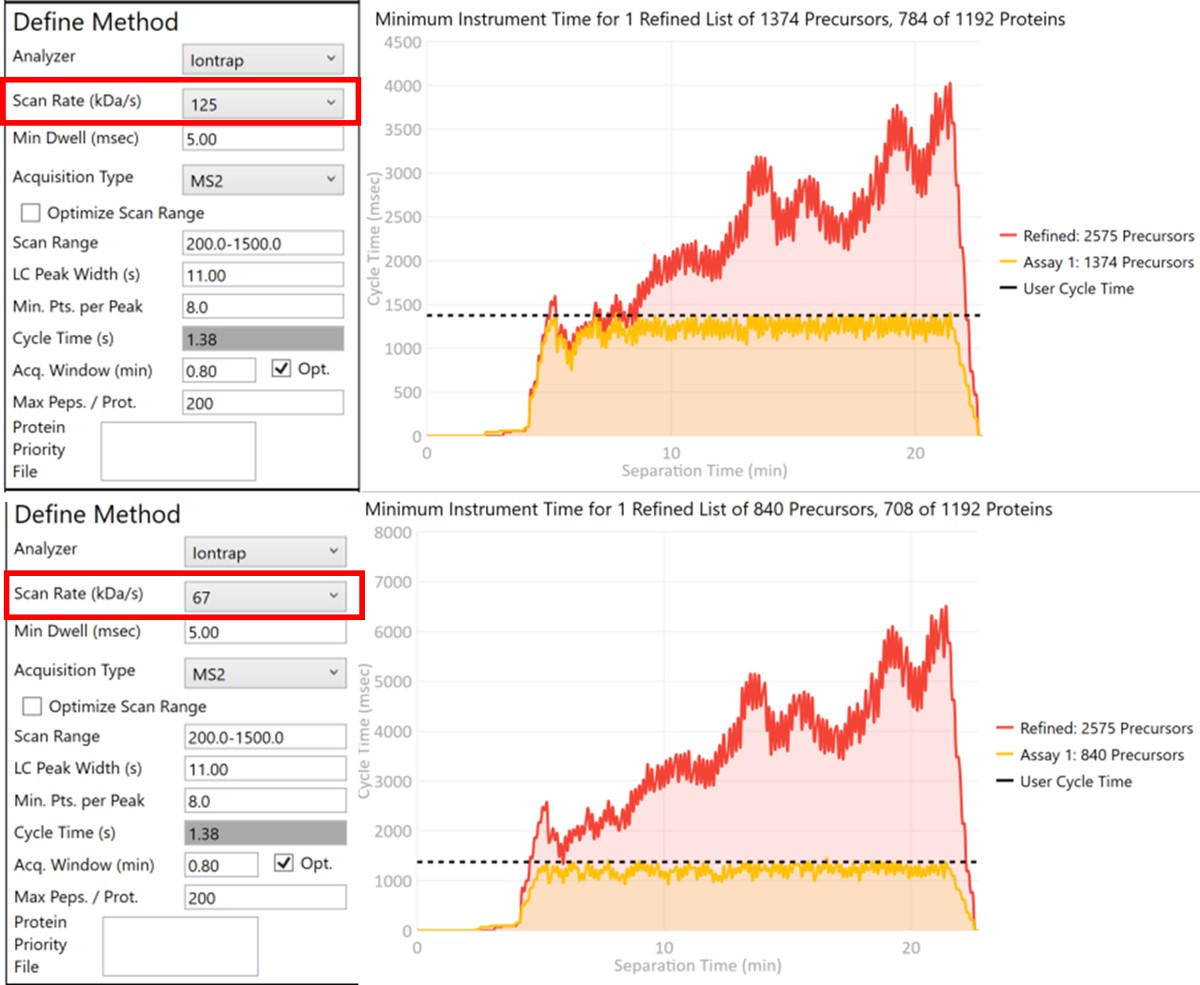

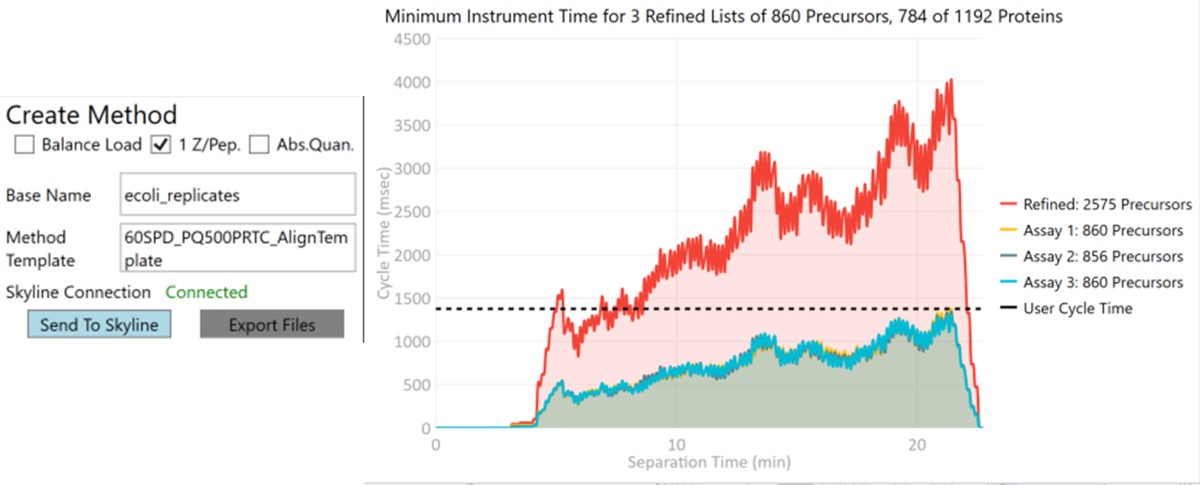

These parameters control how many targets can be included in the assay. When parameters in this section are changed, and the 'enter' key is pressed, the graph on the right will update, showing how many precursors can be included in the assay, while respecting Cycle Time defined by the LC Peak Width and the Min. Pts. per Peak.

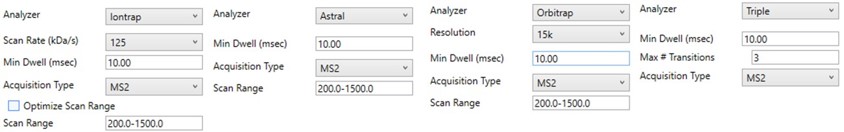

Analyzer

- Ion Trap

- Scan Rate (kDa/s)

- Sets the analysis scan rate. This value affects the acquisition rate, and therefore the assay target capacity.

- Scan Rate (kDa/s)

-

Astral

- Currently the Astral has no analyzer-specific parameters

- Currently the Astral has no analyzer-specific parameters

-

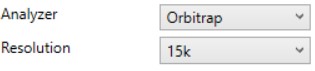

Orbitrap

- Resolution (k)

- Sets the analysis resolution. This value affects the acquisition rate, and therefore the assay target capacity.

- Sets the analysis resolution. This value affects the acquisition rate, and therefore the assay target capacity.

- Resolution (k)

-

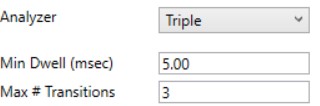

Triple

- Max #Transitions

- Only up to this number of transitions will be selected, since this value linearly decreases the assay target capacity.

- Only up to this number of transitions will be selected, since this value linearly decreases the assay target capacity.

- Max #Transitions

-

Min Dwell (msec)

- Also called injection time on many instruments, this value sets the smallest amount of precursor accumulation time per target. Typically we set this value small enough so that it does not affect the assay capacity, and rely on the dynamic AGC algorithm to boost the injection time according to the available time in the cycle. See Remes et. al. for a description of the dynamic AGC process.

- In some cases, the experimenter may know that to achieve high quality data, a minimum dwell time is needed, and will adjust this value accordingly.

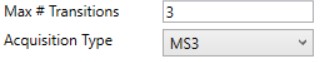

Acquisition Type

- MS2

- The standard analysis type, where each acquisition contains data for a single precursor that is mass selected and fragmented.

- MS3

- Currently only available when Ion Trap analyzer is selected, in this mode, each acquisition contains data for a single precursor that is mass selected, fragmented, and one or more fragments are further mass selected and fragmented.

- The Max # Transitions parameter becomes available in this mode, limiting the number of MS3 precursors that will be simultaneous mass analyzed in the second MS stage. For purposes of the acquisition rate estimations, this mode currently assumes that resonance CID with 4 msec activation time is used for the first activation, and HCD is used for the second activation. In reality if the user selects a method template the uses resonance CID for the second activation, then the activation for each MS3 precursor is performed serially, taking at least #transitions x activation_time to be performed.

-

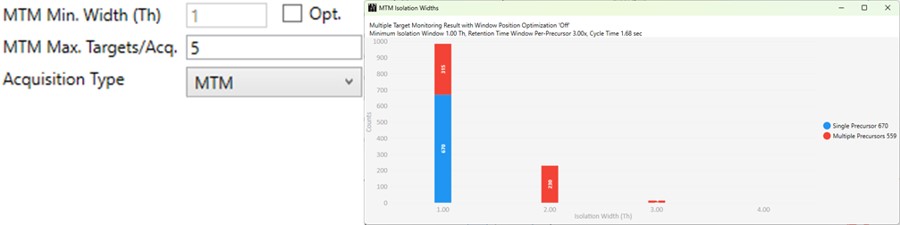

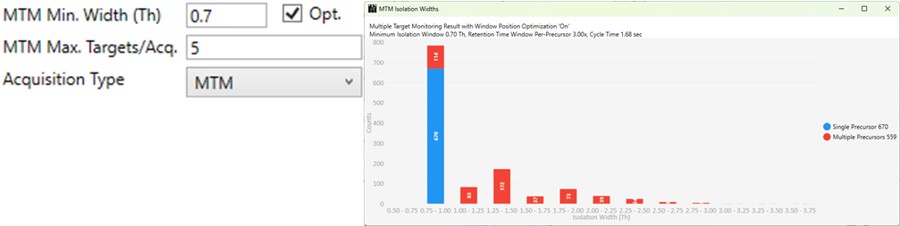

MTM

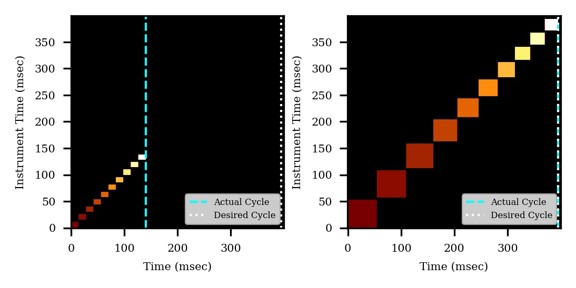

- Multiple target monitoring is a type of method that can be generated from 1 Th isolation window GPF DIA data. It analyzes the GPF data to determine when it is safe to expand the number of targets, or the isolation width, to encompass multiple targets. MTM can be used to expand the number of targets in an assay compared to normal PRM, or alternatively for the same number of targets, MTM can enable the use faster gradients and higher throughput, or higher injection times and better sensitivity.

- When the Opt. check box is unchecked, then the isolation widths are multiples of the GPF isolation width, which is shown in a disabled text box (1 here). A pop-up window shows the distribution of isolation widths, and how many of them are for single or multiple targets.

- When the Opt. check box is checked, then the isolation widths are customized for each acquisition, such that the window size is (largest_mz - smallest_mz) + MTM Min. Width (Th).

-

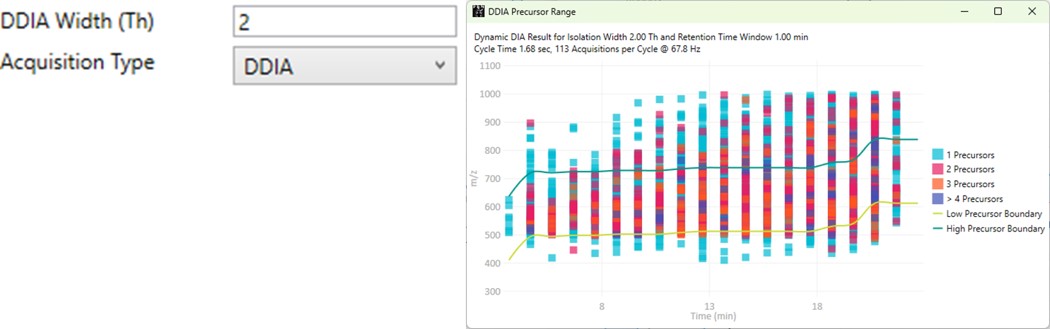

DDIA

- Dynamic DIA is a type of DIA method in which the precursor range shifts as a function of the experiment time. This method can be used to acquire data with a narrower isolation window than would be possible if data for the entire precursor range had to acquired on each cycle.

- The DDIA Width(Th) sets the isolation width that will be used. Narrower widths will acquire higher quality data, for fewer precursors.

- A pop-up plot appears that shows the density of precursors in the Skyline document, along with lines that trace the proposed precursor range as a function of experiment time.

Scan Range Mode

-

Define m/z Range

- Define an explicit first and last mass for the acquired spectra. The acquisition rate is inversely proportional to the size of the m/z range.

- Define an explicit first and last mass for the acquired spectra. The acquisition rate is inversely proportional to the size of the m/z range.

-

Define First Mass

- Define a constant first mass for all acquisitions. The last mass is determined based on the charge state, such that last_mass = precursor_mz * charge + 10

- Define a constant first mass for all acquisitions. The last mass is determined based on the charge state, such that last_mass = precursor_mz * charge + 10

-

Auto

- The first and last mass are determined automatically. The last mass is determined as in the Define First Mass mode. The first mass is determined as the lowest mass that still retains most of the ions at the last mass, based on experimental evaluations.

- The first and last mass are determined automatically. The last mass is determined as in the Define First Mass mode. The first mass is determined as the lowest mass that still retains most of the ions at the last mass, based on experimental evaluations.

-

Optimize

- The first and last mass are set based on the set of "good" transitions for a precursor plus a small buffer. This mode enables the fastest acquisition rates possible for the Ion trap analysis, where the mass analysis time is directly proportional to the scan range.

- The first and last mass are set based on the set of "good" transitions for a precursor plus a small buffer. This mode enables the fastest acquisition rates possible for the Ion trap analysis, where the mass analysis time is directly proportional to the scan range.

Other Define Method Parameters

-

LC Peak Width (s)

- The expected LC peak width at the base. This value is pre-populated from the input data, but can be updated as desired. The instrument maximum cycle time is this value divided by the desired points per peak.

-

Points per Peak

- The minimum desired acquisition points per LC Peak width. The cycle time is the LC peak width divided by this value.

-

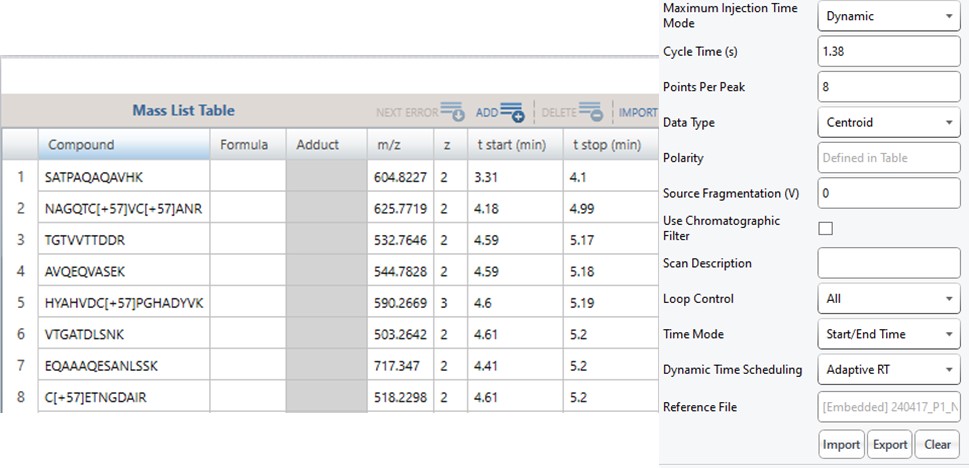

Cycle Time (s)

- The desired cycle time, based on the LC Peak Width / Points per Peak

-

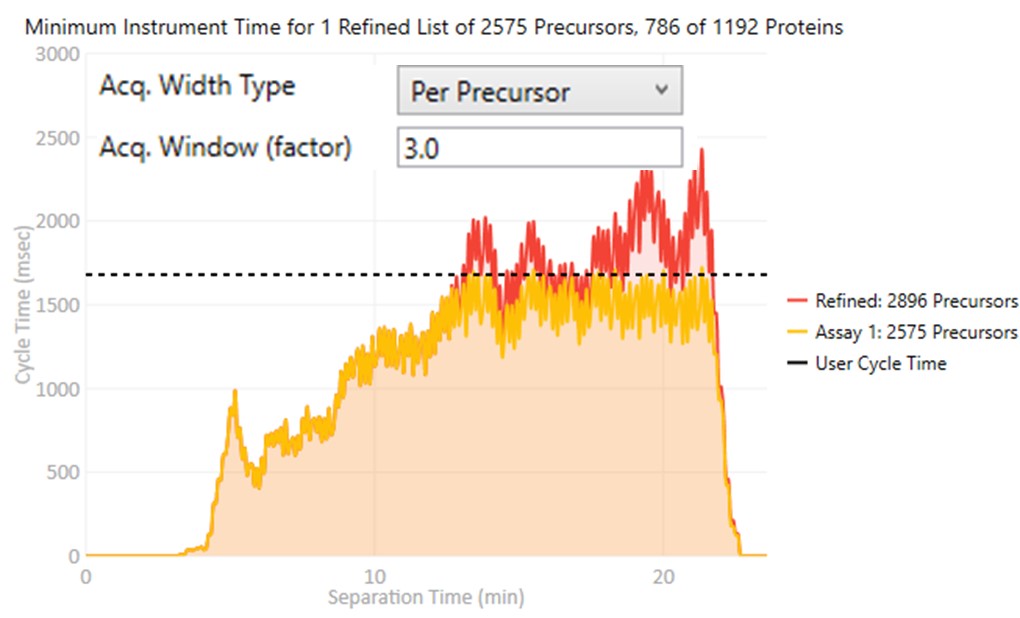

Acquisition Width Type

Defines the method used to set the acquisition window width around each target in the method.- Global

- All targets get the same, global acquisition window size, Acq. Window (min).

- All targets get the same, global acquisition window size, Acq. Window (min).

- Per precursor

Each target gets an acquisition window width defined as its individual base peak width, times the Acq. Window (factor) value.

- Global

-

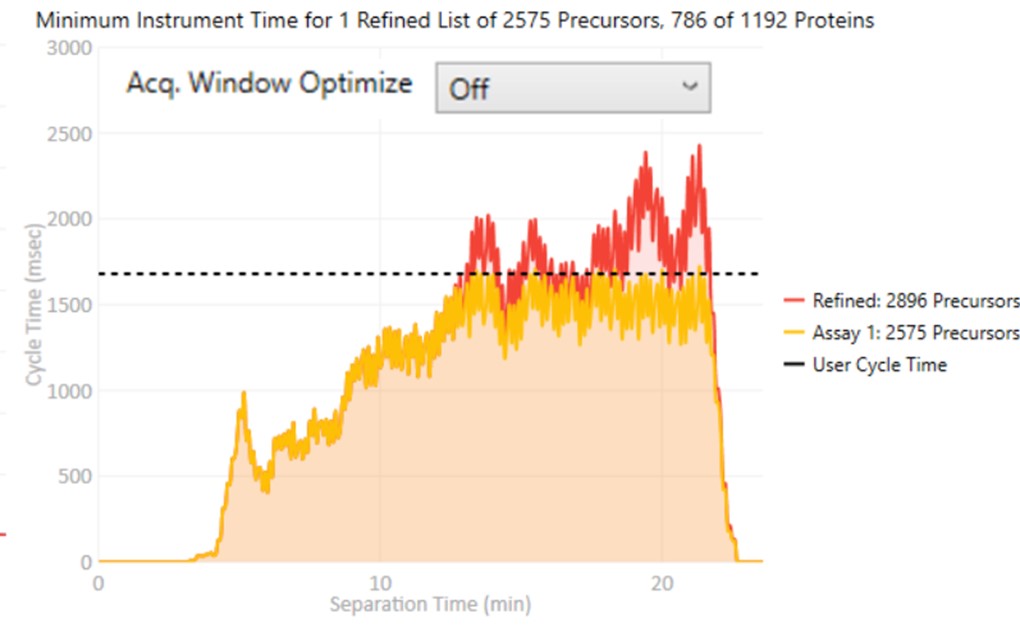

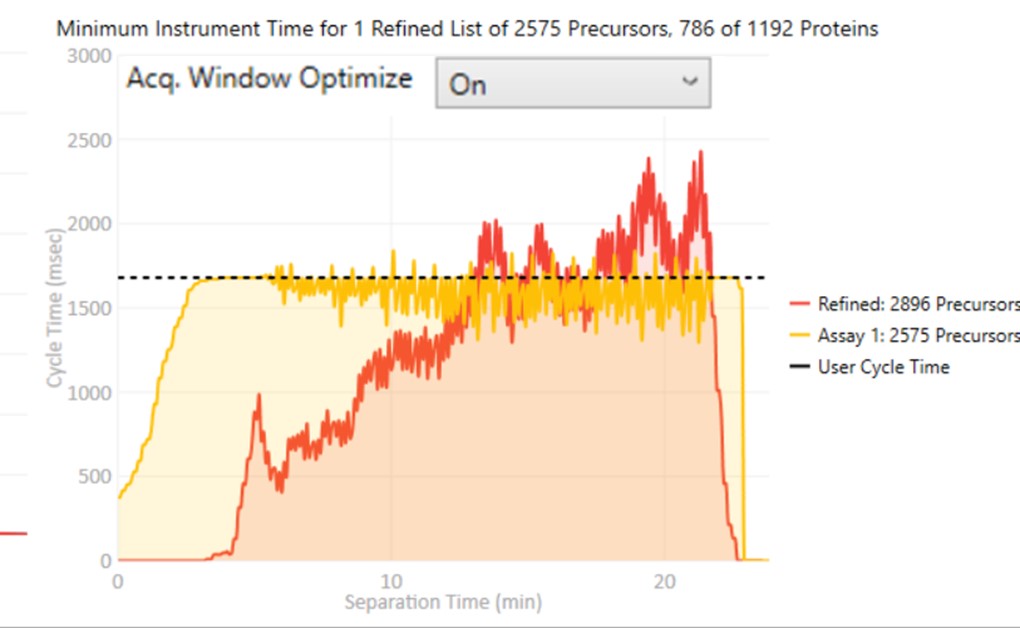

Acq. Window Optimize

- When set to Off, the acquisition windows will be exactly the ones determined based on the current Acquisition Width Type. Note how the Assay 1 density in yellow is the same as the Refined density in red in the time region from 0 to ~10 minutes.

- When set to On, then the acquisition windows widths are expanded by up to some factor (5x currently), while ensuring that the acquisitions don't exceed the desired cycle time. Note how the Assay 1 density matches the cycle time for much of the region between 0 and 10 minutes now. The reason for this mode is that early eluting molecules can have variable retention times, and Adaptive RT may have little information to be able to adjust the targeted schedule at these early times. Making the widths larger in a dynamic way is a nice way to make the assay acquisition more robust. In the future we could add a UI parameter that specifies the maximum expansion factor.

- When set to Off, the acquisition windows will be exactly the ones determined based on the current Acquisition Width Type. Note how the Assay 1 density in yellow is the same as the Refined density in red in the time region from 0 to ~10 minutes.

-

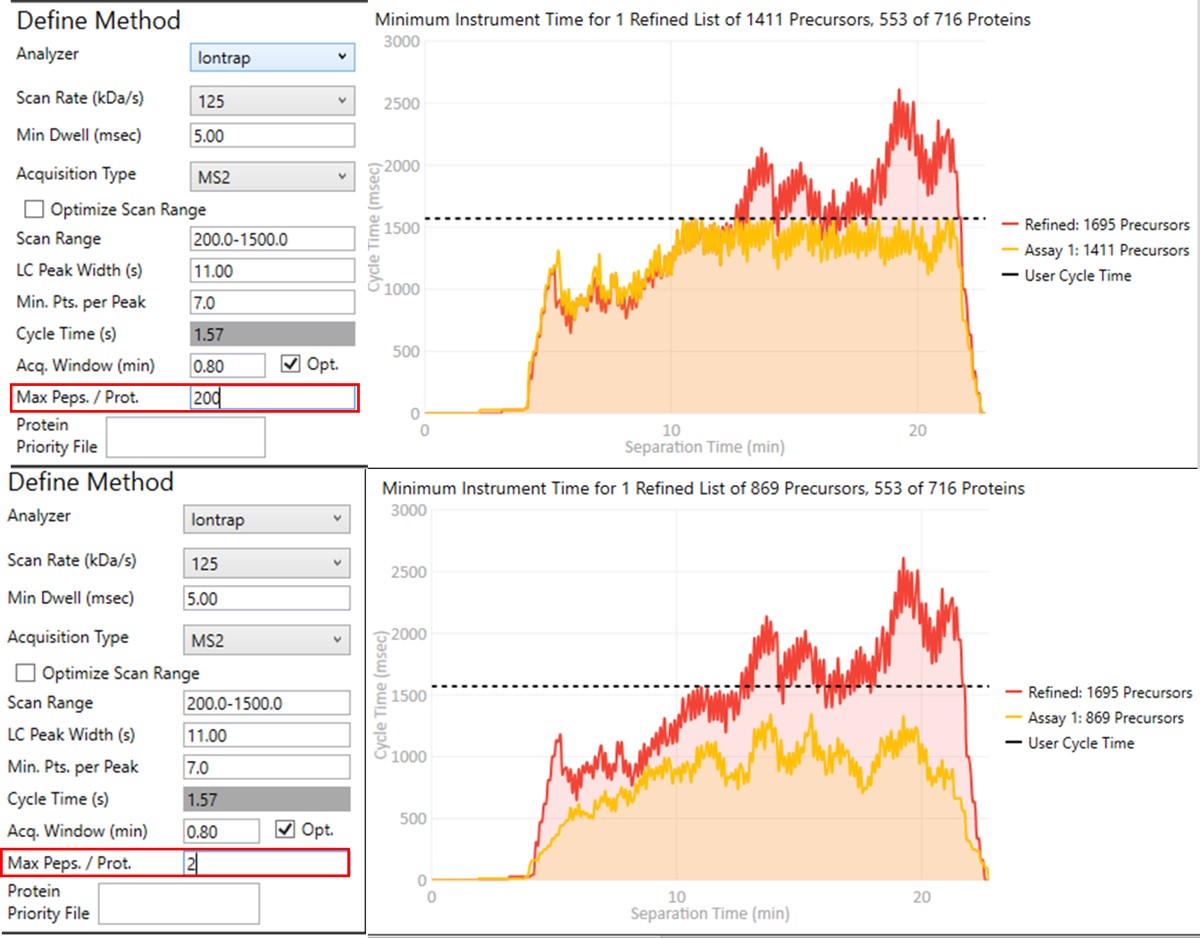

Max Precs. / Group

- Sets the maximum number of peptides per protein in Skyline's Peptide mode, or molecules per molecule group in Skyline's Molecule mode. This parameter can be used to increase assay quality by removing targets from the assay that may not add additional information. The included targets are selected by their quality, defined as their Area x Time Correlation.

-

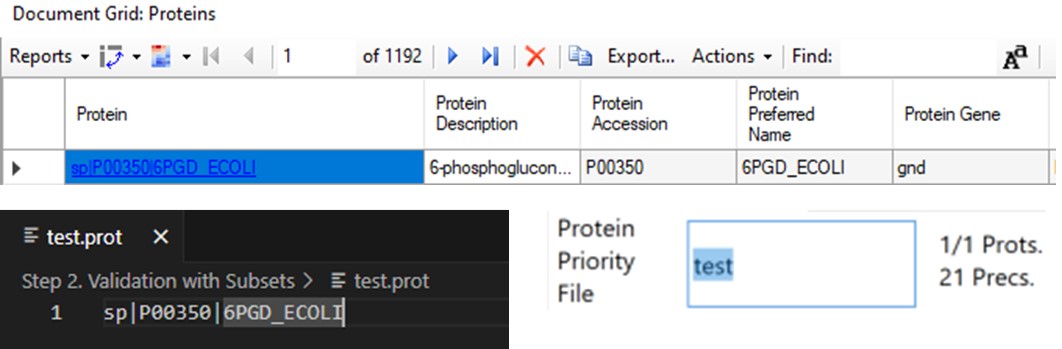

Priority File

- Double click to select a file with a .prot extension that you've made. Just create a text file, and save it with the .prot extension. On each line, you can enter a protein name, or molecule list name. Skyline puts either the protein name or molecule list name in the Report column called "Molecule List". Any precursors that belong to the protein or molecule list will be accepted into the assay, even if they don't pass all the filters.

- Using a Priority file is a good way to incorporate iRT compounds into an assay, when doing an initial search for heavy-labeled standards.

- If the Balance Load check box is not checked, then each of the multiple assays that may be generated will contain the prioritized targets.

- New for v1.1, you can also enter peptide sequences or molecule names. These are the values found in the Skyline report columns called, "Modified Sequence Monoisotopic Masses", or "Molecule", in the Skyline Protein or Molecule modes, respectively.

- For example, the file could have 2 peptide sequences and a protein name.

LPALFC[+57.021464]FPQILQHR

ESYGYNGDYFLVYPIK

SPEA_ECOLI

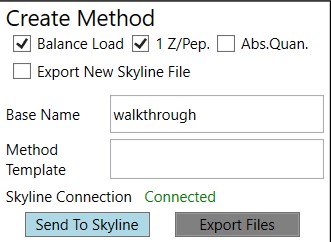

Create Method

These parameters control aspects of how the method will be created.

-

Balance Load

- When checked, a single assay will be created that respects the currently defined Cycle Time. The number of Refined precursors added will depend on the particular choice of Define Method parameters. Precursors are added to the assay iteratively from each protein or molecule group in turn, where the precursors from each group are added in order from highest to lowest quality. Quality is currently defined as area x time correlation. A balanced load refers to how the precursor time density becomes uniform at the limit of many potential precursors, instead of peaked in the middle of the run.

- When not checked, multiple assays will be created that analyze all Refined precursors. Each assay will be of the traditional, non-balanced type. If a Priority File is specified, prioritized precursors will be added to all of the assays.

-

1 Z/Pep.

- When checked, only a single precursor from each peptide (or molecule, in Skyline's Molecule mode) will be accepted into the assay. The selected precursor will have the highest quality, defined as area x time correlation.

- When not checked, multiple precursors having different charge states could be in the assay, if they all passed the various filters.

-

Abs. Quan.

- When checked, a light-version of each heavy-labeled peptide will be added to the assay. This is a nice way to create a light+heavy assay from an initial heavy-only assay. The instrument time plot will update to have 2x the number of targets, allowing to visualize whether enough points per peak will be acquired.

-

Export Skyline File

- When checked, a new Skyline file having the precursors with their corresponding filtered transitions will be created when the Export Files button is pressed. Because creating the new Skyline file takes some 10's of seconds, it can be annoying, and it was made into an option. The good part about using this option is that the new Skyline file will have its Transition Settings set to PRM with Stellar-specific parameters.

- When not checked, no new Skyline file will be created when the Export Files button is pressed. Often we use this mode, and rely on using the Sync to Skyline button to update the current Skyline document, and later save a new Skyline document manually.

-

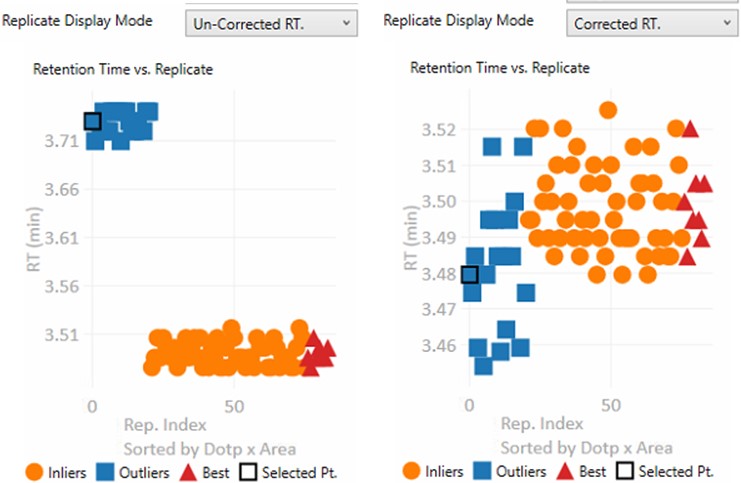

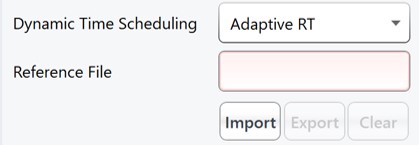

Combine DIA Windows for Reference

- This is a special option that only appears if the input data are DIA with isolation width less than 15 Th, and no large window Adaptive RT information is available.

- When checked, and if the .meth has specified Adaptive RT for Dynamic Time Scheduling, then the exported method will have an rtbin file that is made by combining acquisitions in silico. The DIA alignment acquisitions will have an isolation width of near 50 Th, and will adjust the target schedule accordingly.

- This is a nice option when creating Stellar MS targeted assays from Astral DIA data, which are likely acquired with small isolation widths, such as 2, 3, or 4 Th.

-

Base Name

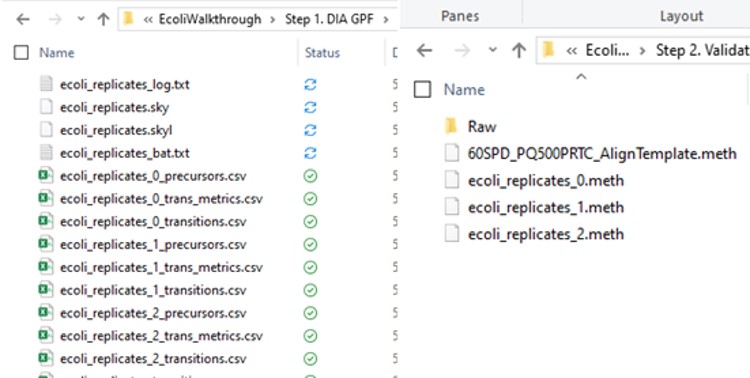

- Output files will have this value in them. For example, the .meth, .sky, and .csv files associated with a method will all have use this name.

-

Method Template

- Double click this text box to open a file chooser for a .meth file that will serve as a template for exported assay files. The template file should have a tMSn method in it, and the appropriate LC parameters, and Method and Experiment durations. Other parameters like activation type should also be specified. If Adaptive RT is specified in the Dynamic Time Scheduling, then an rtbin file will be created during export and saved in the .meth file. This is the preferred way to add Adaptive RT functionality to a method.

- You can make methods on your non-instrument computer if you have downloaded the Workstation version of the instrument software. This software is available at the Thermo Flexnet site.

- In this case, you can use the standalone method editor found at C:\Program Files\Thermo Scientific\Instruments\TNG\Stellar\1.1\System\Programs\TNGMethodEditor.exe.

- The standalone method editor only has access to MS information, not the LC driver information, but you can copy a .meth file from an instrument that has the LC information in it, and then resave and modify it on your laptop.

-

Sync to Skyline

- Pressing this button updates the current Skyline file by filtering the transitions, precursors, and proteins that are not in the final assay.

-

Export Files

- Pressing this button will export precursor lists, transitions lists, .meth files if specified in the Method Template field, and a .sky file if Export to Skyline is checked.

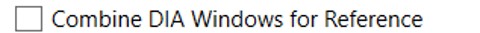

Expert Review

Expert Review Quick Reference

Expert Review is an application that helps achieve consistent peak integration boundaries, through the use of replicate-level and experiment level correlations to reference data of various types.

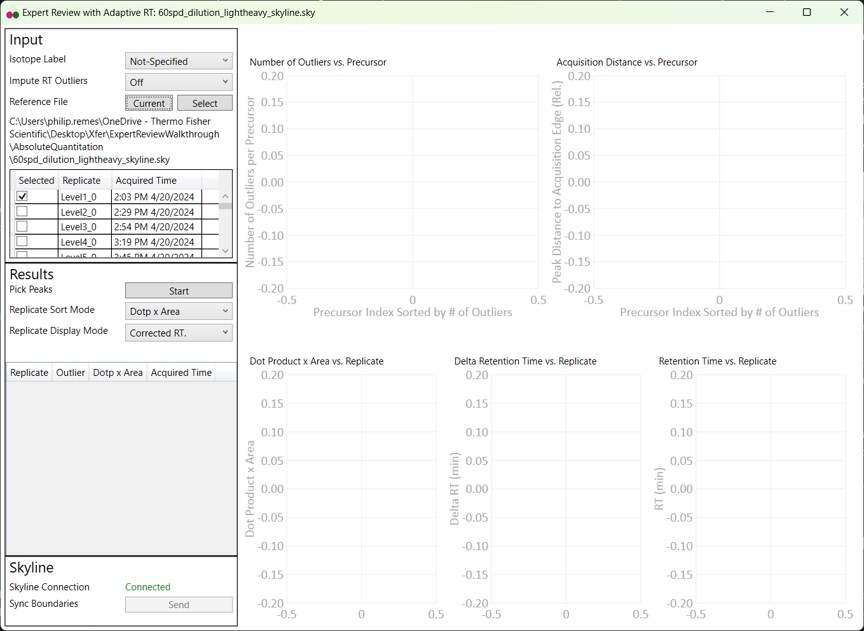

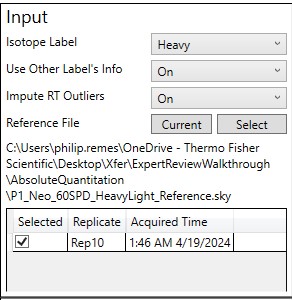

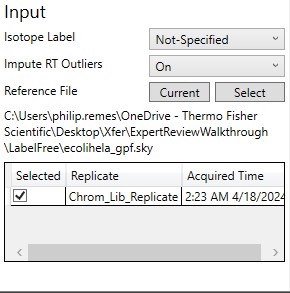

Input

These parameters change how the calculations will be performed. They take affect once the Results:Start button is pushed.

- Isotope Label

Determines whether a specific isotopically-labeled version of the precursor is used for setting the boundaries. Skyline's Isotope Label Type report column is used as input data.- Not Specified

- The isotope label type is not considered. Use this mode for label-free experiments. If this mode is used for data with light and heavy labels, the boundaries that are actually used could come from either molecule (not recommended).

- Heavy

- The integration boundaries from the heavy labeled precursors will be sent to Skyline.

- Light

- The integration boundaries from the endogenous, or light, precursors will be sent to Skyline.

- Not Specified

- Use Other Label's Info

- This parameter is only visible if the Isotope Label parameter is set to Light or Heavy.

- On

- Experiment-level replicate analysis will use information from the other label when attempting to place consistent integration boundaries. Ex. information from the light precursor is used to help determine the heavy precursor boundaries.

- We recommend to use "On", but include this option in case the light data are not consistent between replicates and are hampering the boundary location accuracy.

- Off

- Experiment-level replicate analysis will not use information from the other labeled precursor.

- Impute RT Outliers

- On

- Uses a local regression analysis of retention time and raw file acquisition time to attempt to identify and correct retention time outliers.

- This algorithm is applied after the cross-corrleation based experiment-level replicate analysis.

- This algorithm uses information from the retention times and acquisition times "best" replicates. If these best replicates are all acquired at the start of an experiment, and there is later significant RT drift, the algorithm is not very accurate.

- This algorithm can be useful if there are retention time drifts that vary smoothly with acquisition time. Only use this mode if there are no abrupt shifts in retention time between replicates. Such shifts will cause this algorithm issues.

- Off

- No local regression analysis of retention time and raw file acquisition time is used. The cross correlation-based experiment-level analysis is still used, however.

- On

- Reference File

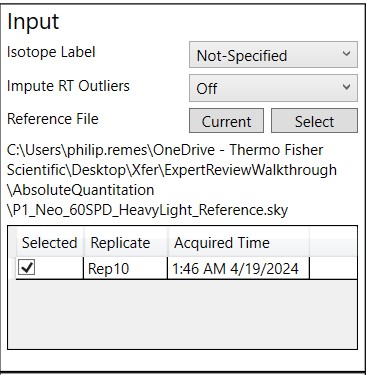

Expert Review relies on information from a reference replicate or replicates to perform integration boundary determination. The reference can be currently be selected in two ways.- Current

- Select this button to update the Replicates grid with information from the current Skyline file.

- Select

- This button will open a file chooser, whereupon the user can choose another Skyline file to use as a reference. This can allow for faster processing, since the reference data can be smaller and load faster. The only gotcha is that if your reference file transitions for some reason don't overlap with the experiment transitions, or there were precursors in the experiment file that aren't in the reference file, there would be an error.

- Current

- Reference File Text Box

- The current reference Skyline file is displayed here. Note that for convenience, Expert Review remembers your last choice of reference Skyline file. This can get you into trouble when you use Expert Review for a new experiment, and you have the old reference specified.

- Reference File Replicate Grid

- The replicates for the reference Skyline file are displayed. For files with multiple replicates, you can select which one or more replicates to use as reference.

- Multiple replicates might make sense in a multi-injection replicate scenario, where all the precursors are spread amonst replicates.

- If you selected multiple replicates, and the same precursor is in both replicates, the replicate information that is actually used is not well defined.

- The replicates for the reference Skyline file are displayed. For files with multiple replicates, you can select which one or more replicates to use as reference.

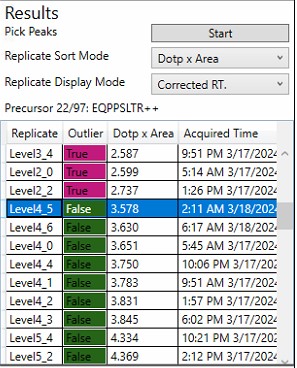

Results

These parameters are for starting the processing, or perusing the results.

-

Pick Peaks

- Press this button once the Input parameters have been set. The processing will start, which could take some time, depending on the size of the Skyline file. For many experiments, it takes less than a minute. The largest Skyline file we tried ha 1000 replicates for 83 precursors. It took 40 minutes for Skyline to export the report, and 5 minutes to determine the integration boundaries.

- After the processing finishes, the selected precursor in Skyline becomes the one with the most outliers that were corrected with Experiment-level scoring. If this gets annoying for users, we can remove this feature.

-

Replicate Sort Mode

- Dotp x Area

- The replicates in the grid and the lower plots are sorted from lowest-to-highest dot product times peak area. This can be useful because this is what Expert Review does when categorizing the replicates.

- Acquire Time

- The replicates in the grid and the lower plots are sorted from lowest-to-highest .raw file acquisition time.

- Dotp x Area

-

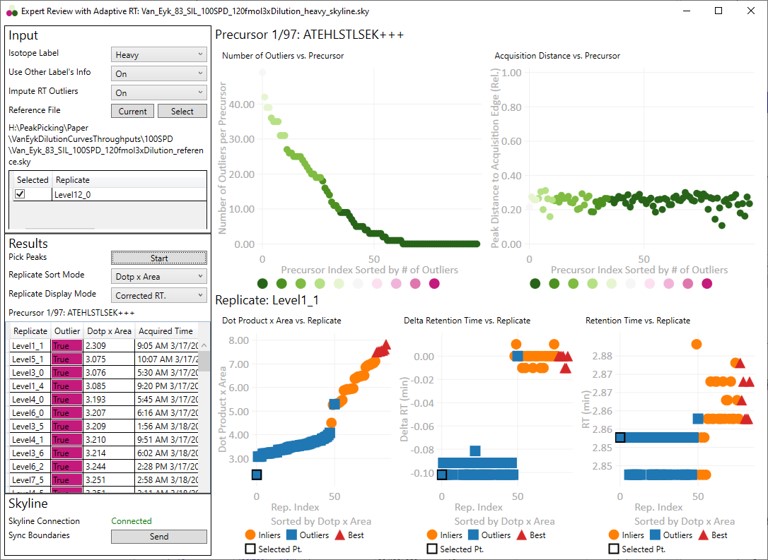

Replicate Display Mode

- Un-corrected RT.

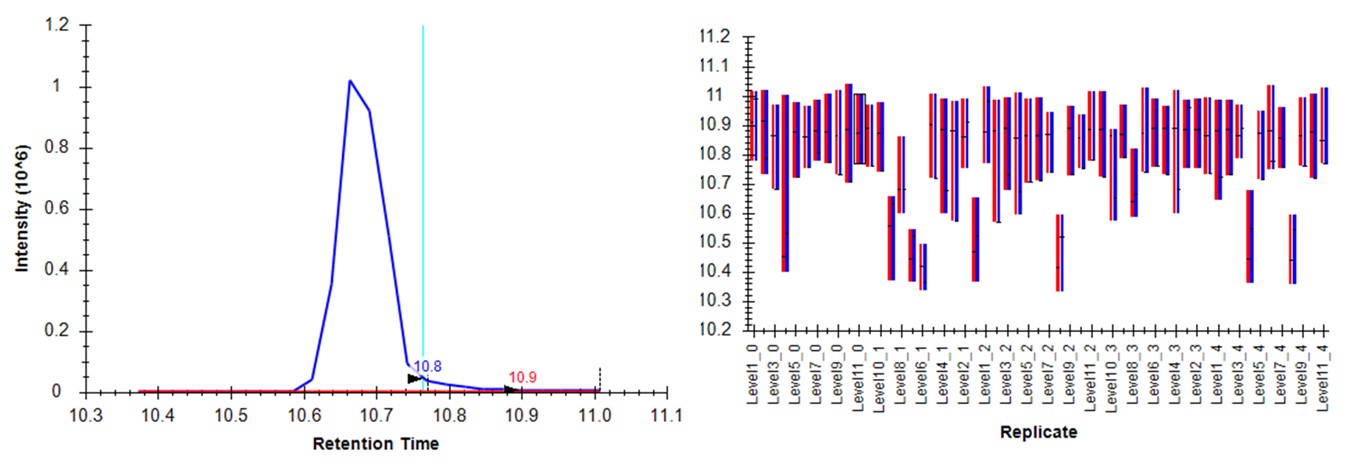

- The retention time graph presents the retention times as they were after the Replicate-Level scoring. These retention times are never sent to Skyline

- Corrected RT.

- These are the retention times after both Replicate-Level and Experiment-Level scoring. These retention times are sent to Skyline when the Send button is pressed.

- These are the retention times after both Replicate-Level and Experiment-Level scoring. These retention times are sent to Skyline when the Send button is pressed.

- Un-corrected RT.

-

Replicate Grid

- The currently selected precursor name, and outlier order number is displayed at the top of the grid. The outlier order number is the rank order of this precursor, where #1 has the most outliers that were corrected with Experiment-level scoring, and the last (97 for this assay) has the fewest outliers.

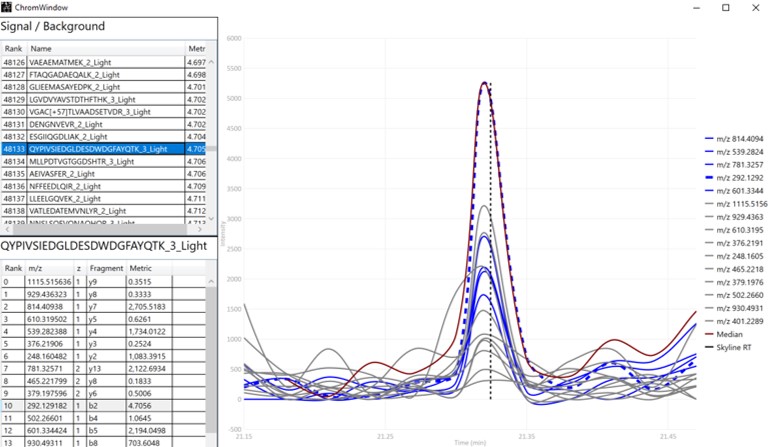

- Clicking any row will sync this precursor and replicate to Skyline, so you can see what the chromatogram looks like. The graphs on the right will be updated to display a black outline around the currently selected replicate.

- If the grid is selected, you can press the Up and Down key to advance the rows.

Skyline

This section is used to send the Expert Review integration boundaries to Skyline.

- Skyline Connection

- This field reports whether the associated Skyline file is open or not. If the Skyline file is closed, then the integration boundaries can no longer be sent to that file

- Send

- Pressing this button causes the calculated integration boundaries from Expert Review to be imported to the associated Skyline document.

Graphs

Clicking on any point is synchronized with Skyline, such that the chromatogram for that precursor is displayed. Clicking on a point will update the lower graphs with the replicate information for that precursor. Pressing the Left or Right key will advance the precursor in that direction.

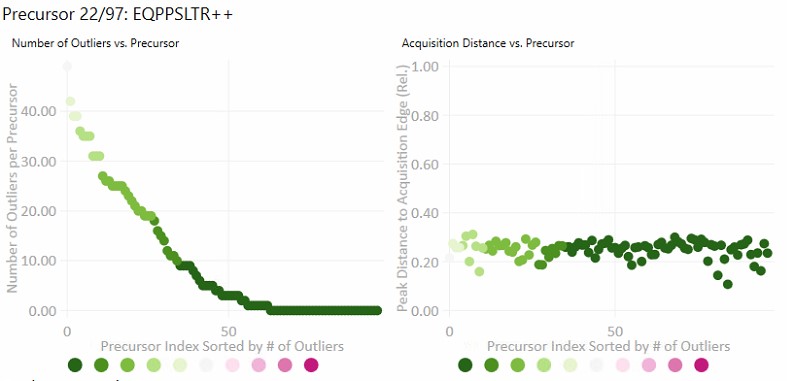

- Precursor-level graphs

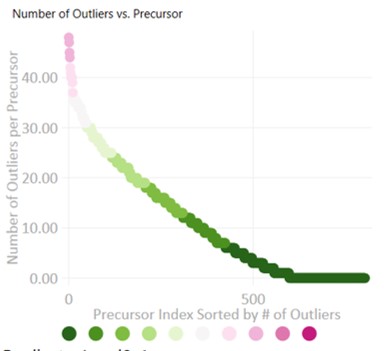

- Number of Outliers

- The left plot displays the sorted number of outliers that were corrected by the Experiment-level scoring for each precursor. The fact that a precursor has many corrected outliers does not necessarily mean that there will be any integration boundary errors in the final output. It does mean that there is some ambiguity though in the data, and it is probably worth investigating the precursors with the most errors.

- Acquisition Distance

- This plot depicts the average relative distance of the peak apex to an edge of an acquisition. For example, if the peak is at 10.4 minutes, and the acquired spectra spanned the range from 10.0 to 12 minutes, then the relative distance would be 0.4 / 2.0.

- It may be worth investigating precursors that have very small acquisition distances to see if there is any truncation of the peaks.

- Number of Outliers

- Replicate-level graphs

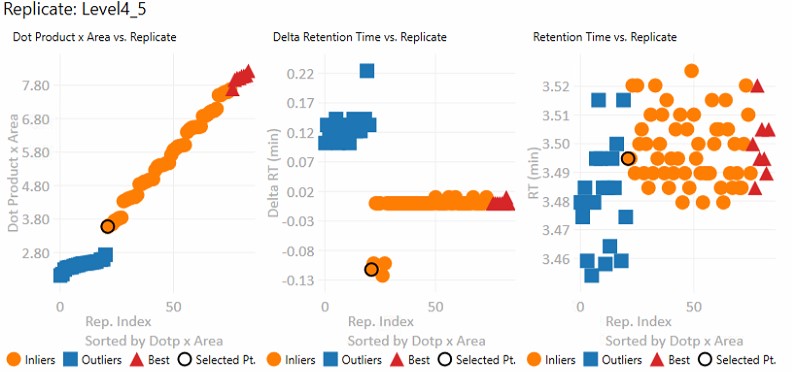

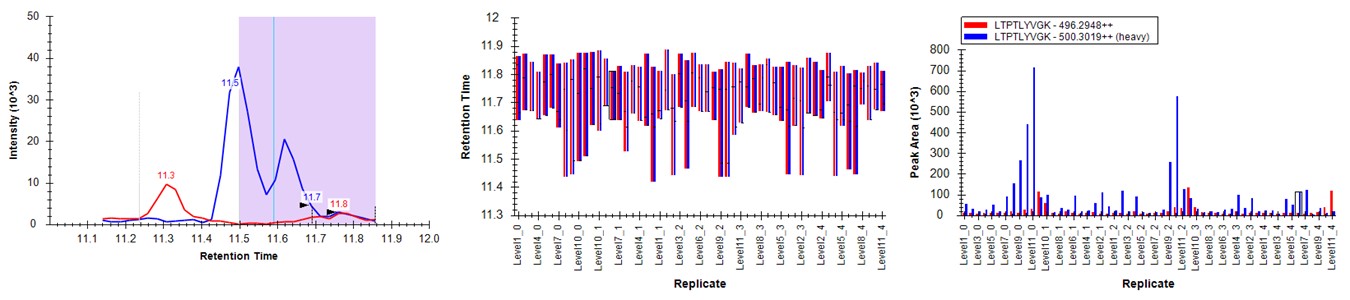

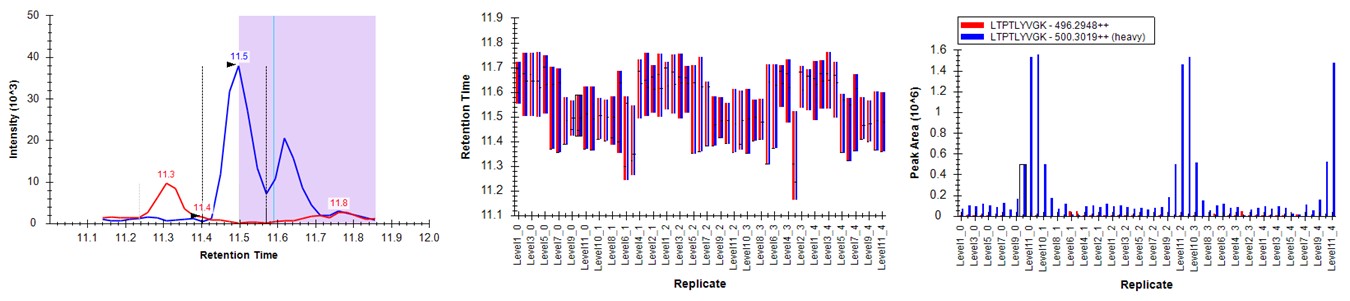

These graphs show information for all the replicates for the currently selected precursor.- Dot product x Area

- The dot product is relative to the reference data set. This metric is a way to rank the quality of a replicate.

- The "best" group, in red triangles is determined by this metric.

- Inliers are replicates that aren't in the best group, but did not have their RT updated by Experiment-level scoring.

- Delta Retention Time

- This is the difference between the peak RT. and its average neighbor's peaks RT. This value is likely to be conserved even when there is an absolute RT shift across replicates, and we have investigated using this value for outlier identification and correction.

- Retention Time

- These are the apex retention times of the picked peaks. They can be displayed with the Experiment-level outliers corrected, or uncorrected, depending on the value of Results:Replicate Display Mode.

- These are the apex retention times of the picked peaks. They can be displayed with the Experiment-level outliers corrected, or uncorrected, depending on the value of Results:Replicate Display Mode.

- Dot product x Area

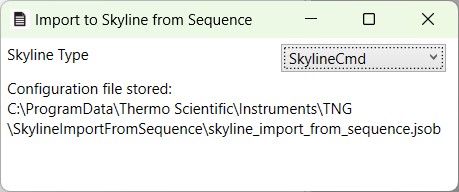

Sequence Import Configure

Sequence Import Configure Reference

Sequence Import Configure is a tiny application that saves a file on the instrument computer, which allows to automatically import .raw files into a Skyline file after the .raw file acquisition is finished, freeing you from manual File / Import / Result operations.

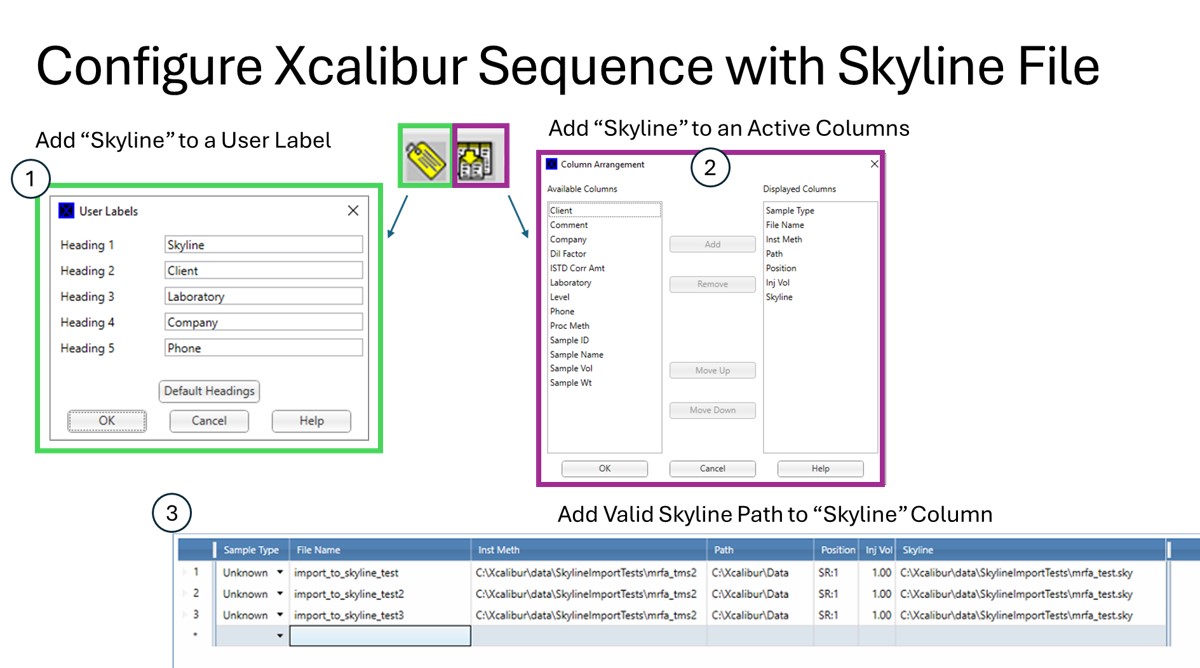

Steps to configure .raw file import to a Skyline file

-

Run this program. There's no options, just run it. The program finds the SkylineCmd.exe application path, and saves it to a fixed location so that the instrument software can use it to import .raw files.

-

In a future Xcalibur 4.8 release, there will be a native Skyline column, that has a file chooser, and does some simple validation. This column will be accessed by a future Stellar MS software, 1.2.

- For Xcalibur < 4.8 and Stellar 1.1 software the following few steps should be taken:

- Add Skyline to an Xcalibur "User Label".

- Configure the new Skyline column by adding it to the Active columns.

- In the Xcalibur sequence, add the full file path to an existing Skyline file, including the .sky extension. For example, D:\MyFiles\skyline_test.sky

That's it!

- The import task has to wait for the LC to finish doing what it's doing, for the .raw file to close and become available for import. This can take a while, even 5-10 minutes, depending on your LC setup and equilibration settings.

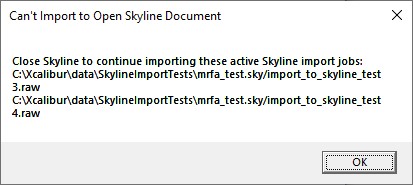

- Note that if you have the Skyline file open when the acquisition ends, we will raise a little dialog telling you that we can't import the .raw files until you close the Skyline file.

- We'll keep a queue of the pending import jobs for up to 72 hours, then we'll give up.

- If you manually imported the .raw file, we will not import it again.

PRM Conductor Walkthrough

PRM Conductor Introduction

PRM Conductor is a software program that plugs into the Skyline External Tool ecosystem. The purpose of PRM Conductor is to aid in the creation of parallel reaction monitoring (PRM) mass spectrometry methods. The basic functions of the program are as follows:

- Receive DIA or PRM data

- Filter transitions with a set of filters

- Filter precursors that have at least N good transitions

- Visualize the scheduling of one or more PRM assays with particular acquisition parameters

- Export an instrument method for the new assay(s)

- Support the setup of the Adaptive RT algorithm for real-time chromatogram alignment

Location of the Walkthrough Materials

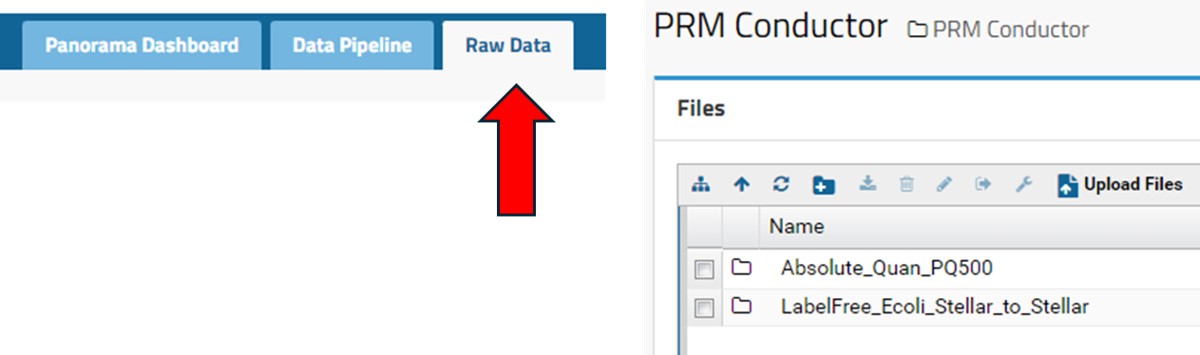

All the documents needed to perform the walkthroughs can be found by clicking the Raw Data tab on the top right hand side of this page.

Example Scenario

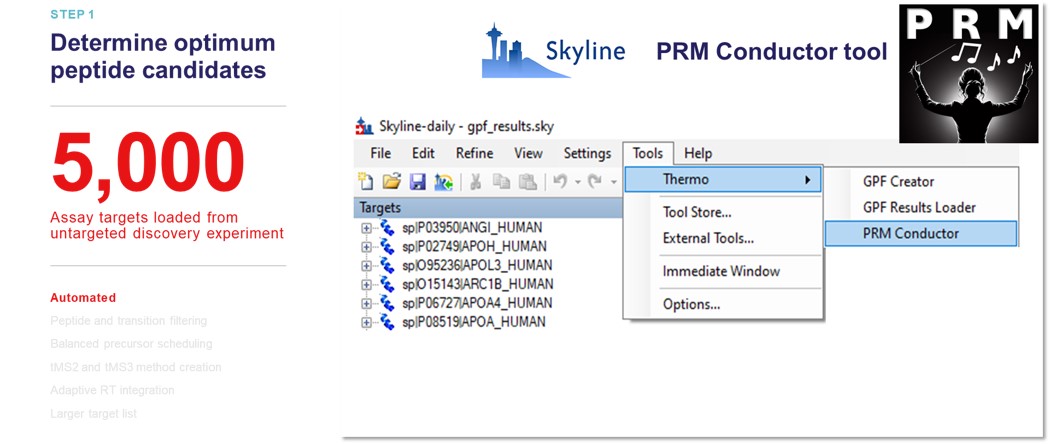

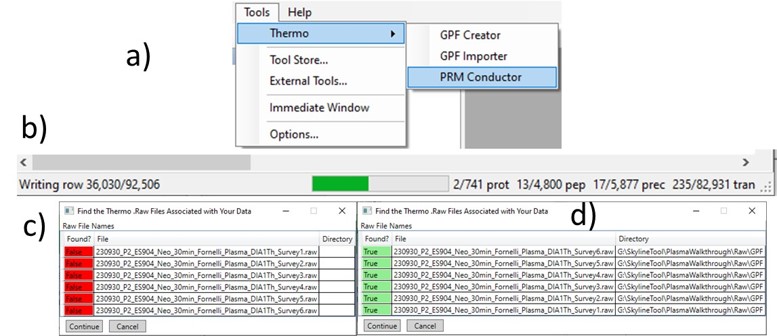

PRM Conductor is launched from Tools / Thermo / PRM Conductor. In one test case, we imported DIA data containing more than 5000 unique peptide identifications.

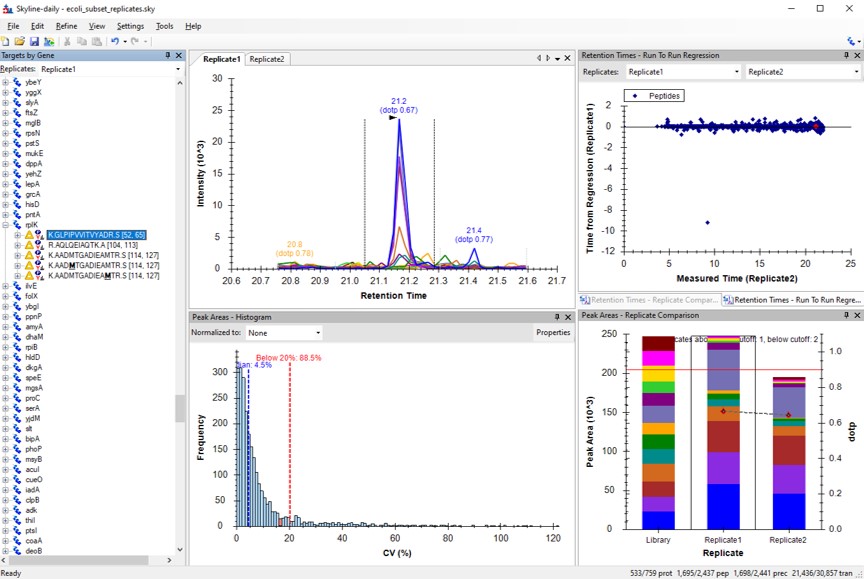

After applying the transition filters listed in the Refine Targets section, there were about 3000 'good' precursors left. The user can alter parameters in the Define Method, such as the Analyzer type, the minimum points per LC peak, and the scheduled acquisition window, and see how the 'good' precursors fit into a scheduled assay. The yellow trace shows precursors that can be acquired in less than the cycle time, while the red trace shows those precursors that can't be acquired. The user can then export a method with the Create Method section, where they can specify an instrument method template with LC details, which will be used to create a new method with the acquisition settings and precursor list filled in.

Walk-throughs

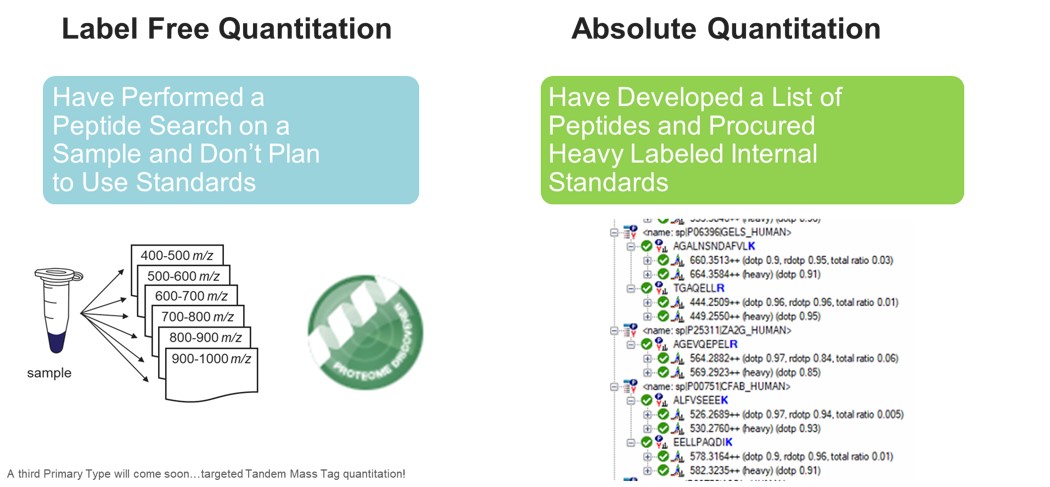

Several walk-throughs have been created to teach users how to make targeted methods with PRM Conductor. These methods generally fall into two categories at present; the absolute quantitation category, where there are heavy standards created for each endogenous peptide to be monitored, and the label-free category, where the assay is created directly from peptide search results, and there are no heavy standards.

Available Walk-throughs

- Absolute Quantitation

- PQ500

- Label Free

- E. coli in HeLa

- Hypothesis-driven Discovery with Multiple Target Monitoring

Absolute Quantitation - PQ500

Biognosys PQ500 Introduction

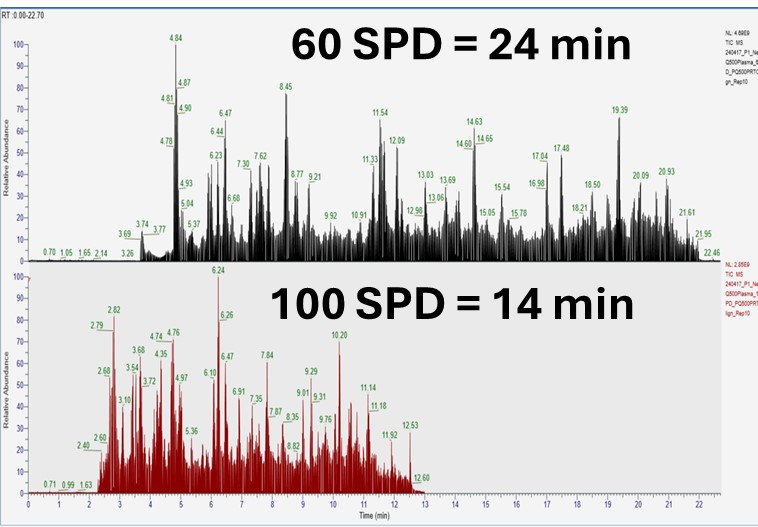

This tutorial will show you how to create a targeted MS2 assay that uses heavy standards for absolute quantitation. The Biognosys PQ500 standard is used as the source of heavy standards. We used the Vanquish Neo LC, ES906A column and a trap-and-elute injection scheme with a 60 SPD method and a 100 SPD method. The gradients have been designed so that compounds elute over a large portion of the experiment spans.

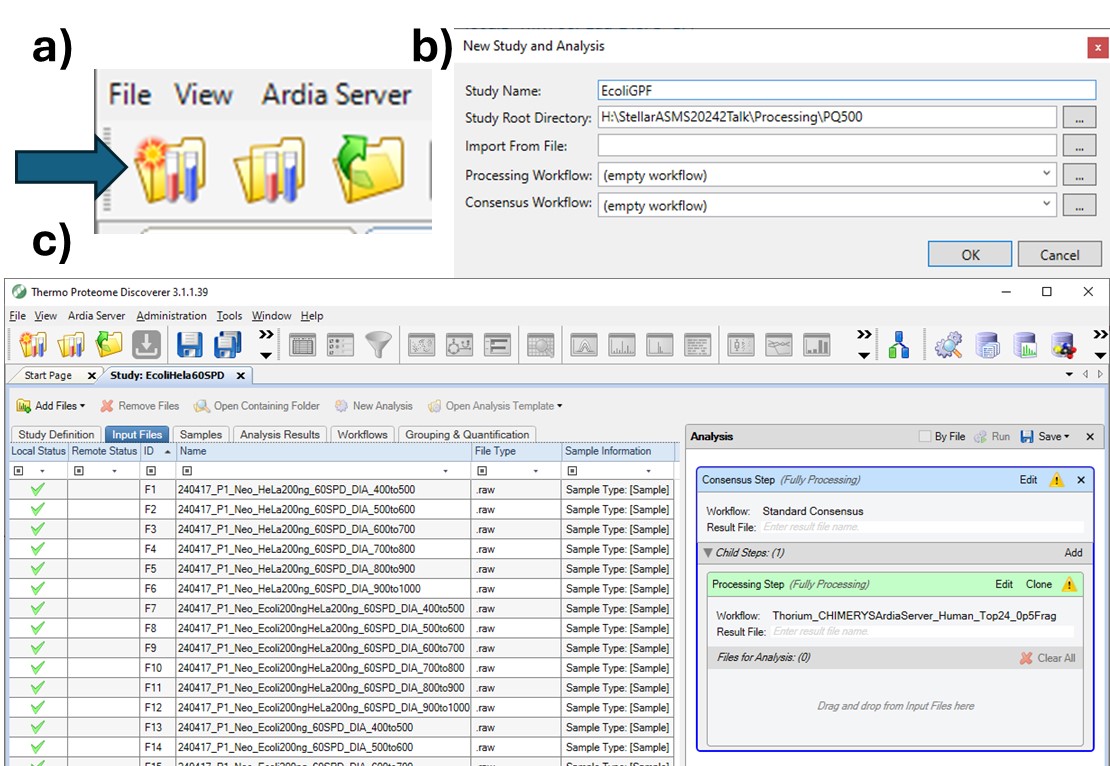

Setting up the Skyline Document

Pierce retention time calibration mixture (PRTC) is used here to create an indexed retention time (iRT) calculator. Along with a spectral library, the iRT calculator will aid Skyline in picking the correct LC peaks in the steps that follow. See the Skyline iRT tutorial for more details. Here we will use an iRT calculator created with Koina. After setting up the LC and column, we run unscheduled PRTC injections to ensure that the LC and MS system is stable. The method file 60SPD_PRTC_Unscheduled.meth can be used for this. The prtc_unscheduled.csv file could be used to import into a tMSn table if making a method from scratch. We like to use Auto QC with Panorama to store all our files, and to automatically upload and visualize QC data.

Now we will create a Skyline document for analyzing PQ500 heavy labeled peptides. Biognosys supplies a transition list with intensities and iRT values that can be used to create a spectral library and iRT calculator. We'll show you though also how you can use use Koina integration with Skyline to create a spectral library and iRT library from a list of peptides sequences if you don't know anything about them. We also tend to like to use Koina spectral libraries even if supplied lists of transitions, because we will be using PRM Conductor to automatically filter the transitions. At the end of this section, we’ll be ready to perform unscheduled PRM for the PQ500 heavy-labeled peptide standards.

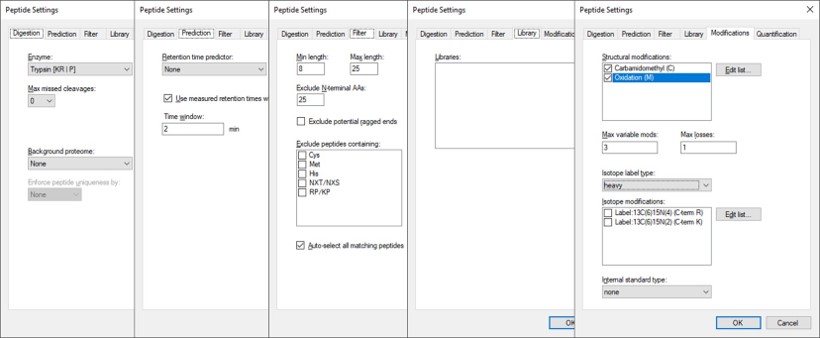

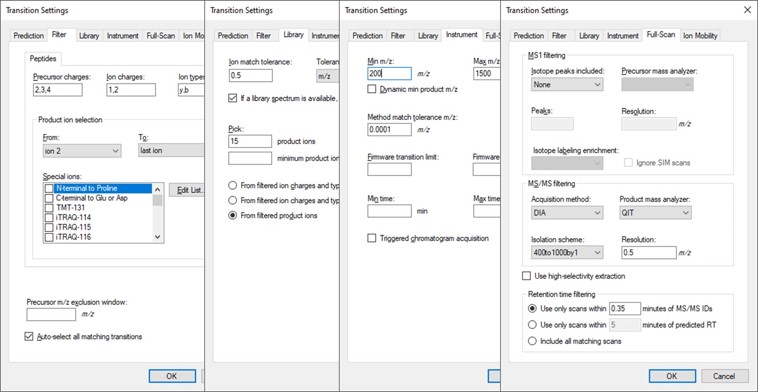

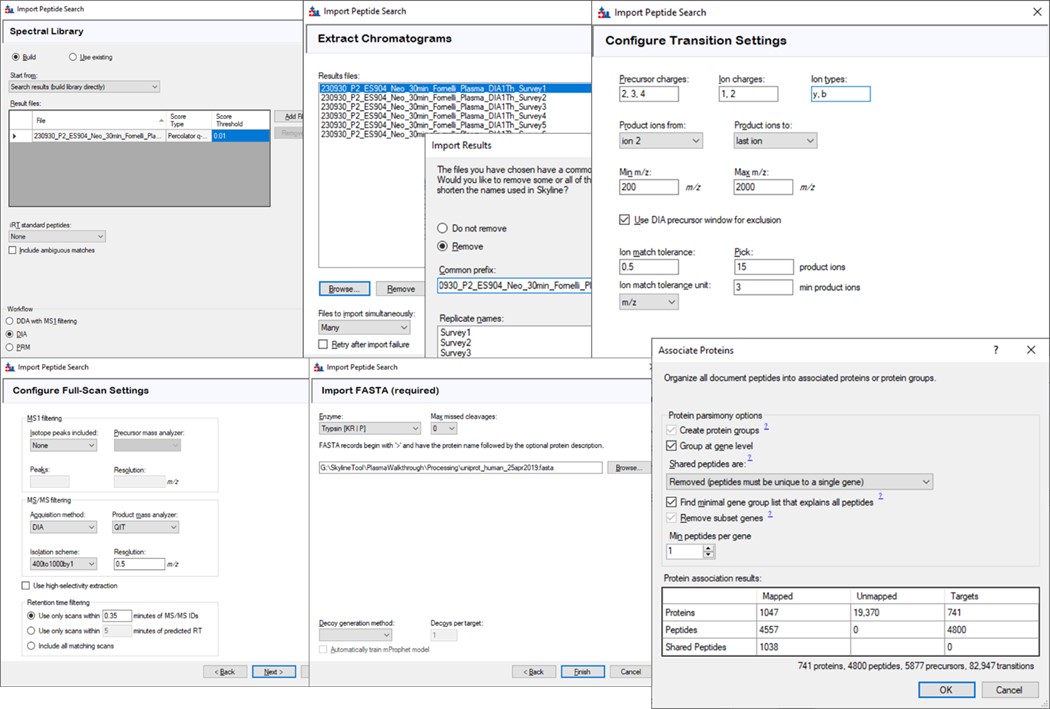

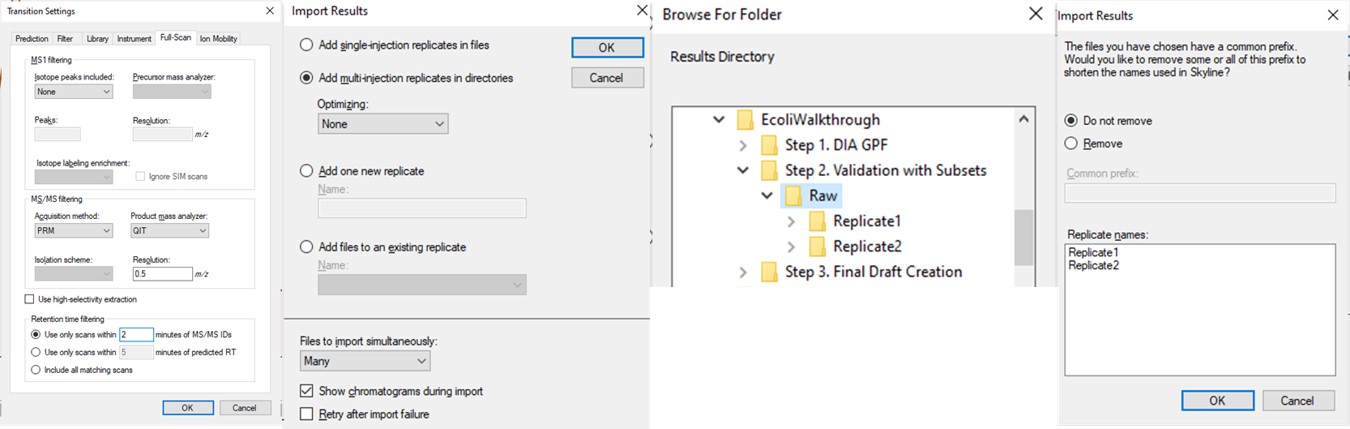

Transition Settings

Open up Skyline Daily and create a new document. Save the document as Step 1. Setup Skyline Documents/pq500_60spd_neat_multireplicate.sky.

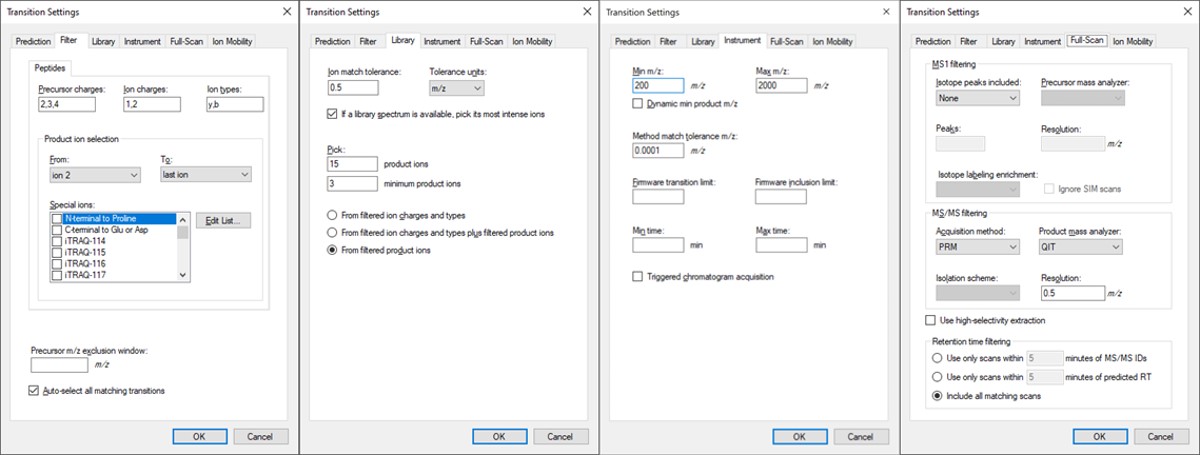

- Open Settings / Transition Settings / Filter. Set Precursor charges to ‘2,3,4’, Ion Charges to ‘1,2’, and Ion types to ‘y,b’. In the Product ion selection section, select ‘From ion 2’ to ‘last ion’. Un-select N-terminal to Proline and keep “Auto-select all matching transitions” checked.

- In the Library tab, set Ion match tolerance 0.5, check the box “If a library spectrum…”, set Pick 15 product ions with minimum 3 product ions. 15 is a large number, but we will refine the transitions later with the PRM Conductor tool. Select “From filtered product ions”.

- In the Instrument tab, set Min m/z 200, and Max m/z 2000. Set the Method match tolerance m/z to 0.0001. This helps Skyline to differentiate between precursors that have very close m/z. As we’ll see later, there are sometimes still peptides with different sequences but the same exact m/z.

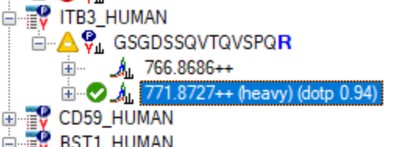

- In the Full-Scan tab, set MS1 filtering / Isotope peaks included to None. If there are any precursor transitions, PRM Conductor will think that the document is in DDA mode, and will not be full featured. Set MS/MS filtering / Acquisition Method to PRM, Product mass analyzer to QIT, and Resolution to 0.5 m/z. Set Retention time filtering to Include all matching scans. Press Okay to close the Transition Settings.

Peptide Settings

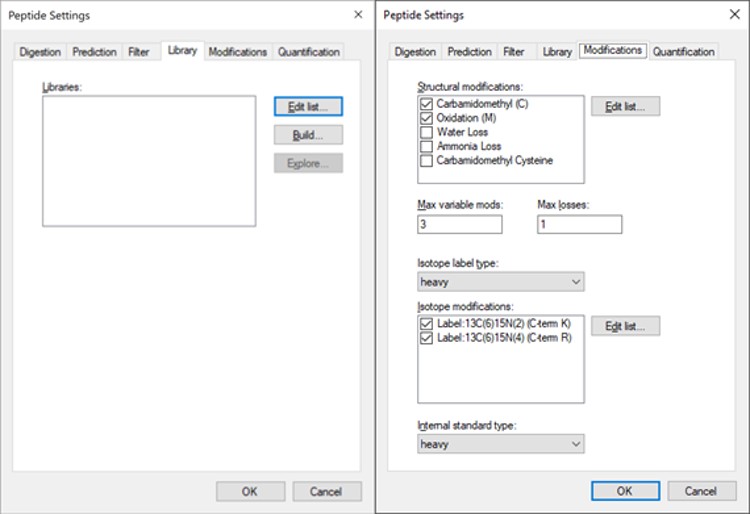

Open Settings / Peptide Settings.

- In the Library tab, uncheck or remove any libraries that are there, for simplicity's sake.

- In the Modifications tab, make sure that Carbamidomethyl (Cysteine) and Oxidation (Methionine) modifications are enabled. The C-term R and C-term K isotope modifications should be enabled. Isotope label type and Internal standard type should be set to heavy.

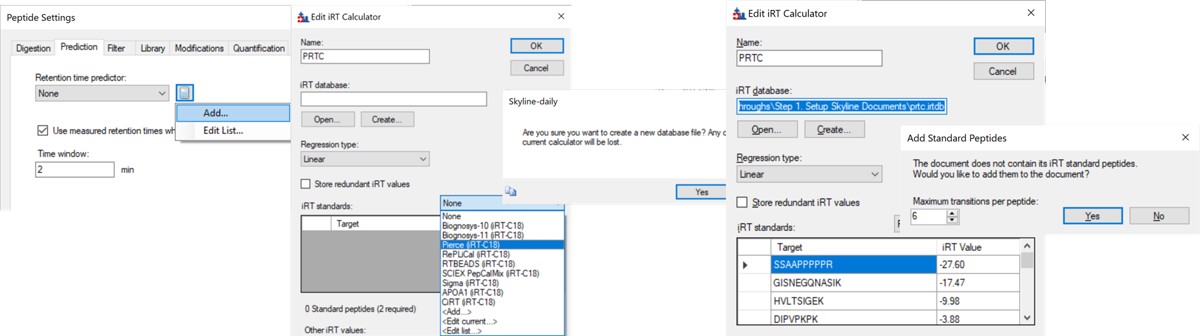

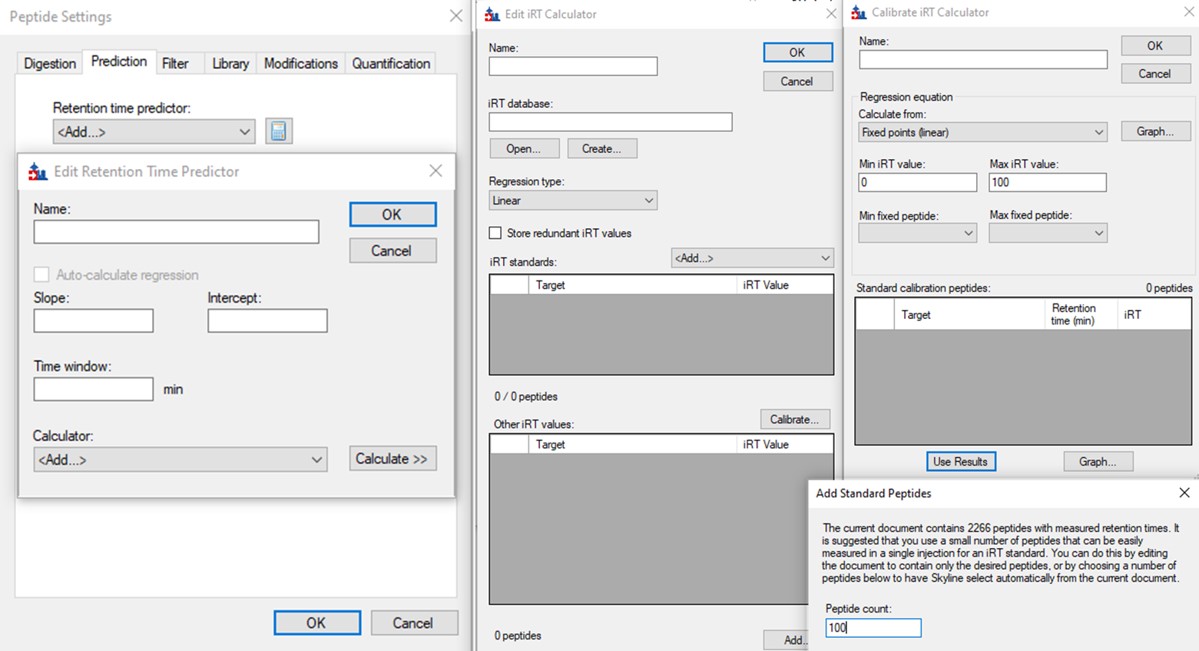

iRT Calculator from PRTC

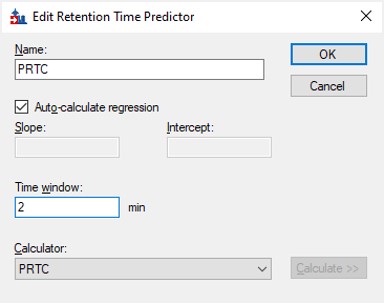

- In the Prediction tab, select the calculator icon and press Add. Add a name like PRTC. In the iRT Standards drop-down menu, select Pierce (iRT-C18). Press the Create button, and select Yes when asked if you want to create a new database file. Give the file a name like prtc.irtdb. Press Okay.

- Go back into the Peptide Settings / Prediction tab, click the dropdown arrow and select Edit current. Set the window to 2 minutes, and press Okay.

Importing the Transition List

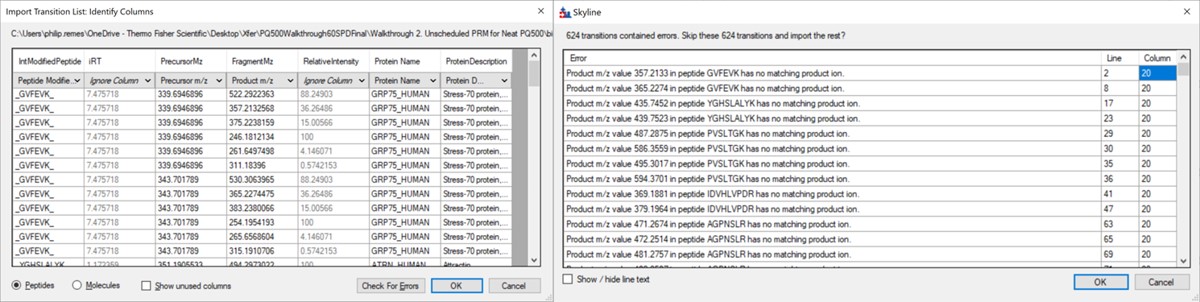

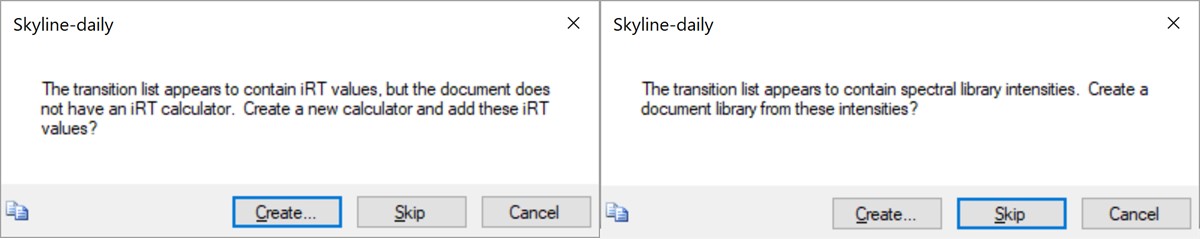

Use File / Import / Transition List and select the file Step 1. Setup Skyline Documents/biognosis_pq500_transition_list.csv. A dialog opens that shows the mapping of the file headers to Skyline variable names. Press Okay to continue on. A new dialog prompts us that 624 transitions are not recognized. These are water losses that we don't necessarily need. We could define water loss transitions in the Settings tabs if we really wanted them. Press Okay twice to exit the iRT calculator dialogs. A new dialog will ask if you want to add the Standard Peptides, choose 6 transitions and press Yes.

Another dialog appears, asking if we want to make an iRT calculator. The Biognosys values are presumably based on experiment, and are slightly more accurate than the in silico predicted iRT values from Koina, so Click Create. You'll be asked if you want to create a spectral library from the intensities in the transition list. Feel free to press Create if you want, but we will press Skip and use Koina to predict the intensities next. The Skyline document will update and in the bottom right border will be displayed 579 prot, 818 pep, 1622 prec, 9020 tran.

Koina

Now we’ll generate a spectral library with Koina.

- Open Tools / Options and go to the Koina tab. Set the intensity model to ms2pip_2021_HCD, the iRT model to AlphaPept_rt_generic, and NCE to 30. Note that our original assay was created when this option tab said Prosit, but it had changed in a new Skyline Daily release by the time that this walkthrough was created. Press Okay.

- Go to Settings / Peptide Settings / Library / Create and select Data source Koina, NCE 30, and set a name and output path for the library. Press Finish. Make sure the library is selected in Peptide Settings Library and press Okay. If everything works correctly and you have internet access, your computer will communicate with the Koina server and return the spectral library to you, and the peptides in the Skyline tree will receive little spectra images to the left of the sequences, like below.

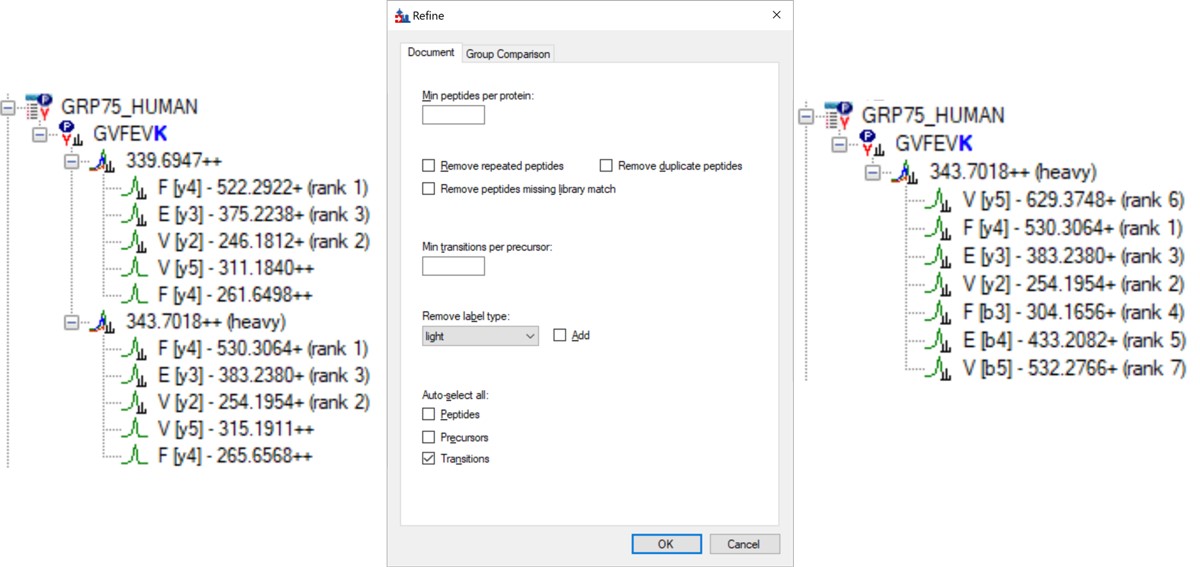

- Open Refine / Advanced and select Remove label type light, and click the box for Auto-select all Transitions, and press Okay. If you expand a peptide, like the first one, GVFEVK, it will have up to 15 transitions, instead of the up to 6 in the Biognosys transition file. Save the Skyline document. This is the end of Step 1: Setting up the Skyline Document.

Neat Unscheduled Runs

- Unscheduled PRM for Neat Heavy PQ500 with Multiple Replicates

Unscheduled PRM for Neat Heavy PQ500 with Multiple Replicates

In this step, we’ll use Skyline to create a set of unscheduled PRM methods for the 804 PQ500 peptides. At the end of this step, we will have created 10 Unscheduled PRM methods for both 60 and 100 SPD, acquired data for them, loaded the results into Skyline, and assessed the results. We’ll be ready to look at our standards spiked into matrix in the next step.

Alternative DIA GPF Acquisition

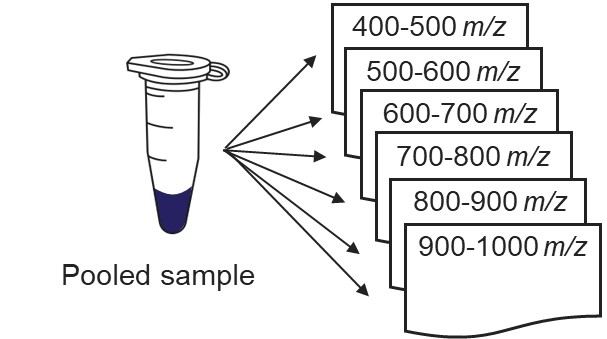

Alternatively, especially as the number of heavy peptides increases, one could opt to use data independent acquisition (DIA) of the neat, heavy standards to find their retention times. One would simply find the smallest and largest m/z of the peptides in question and use the Thermo method editor to create a DIA method. For example, we have had success in some neat standard cases using a single injection with 4 Th isolation width. However multiple gas-phase fractions (GPF) could be acquired with narrower isolation widths, as in the technique we use for identification of unknowns. We included a little helper application in our Thermo suite of external tools called GPF creator that spawns GPF instrument methods. Given a set of parameters, namely a precursor m/z range and a Stellar DIA method template, it will create a cloned set of methods with the appropriate Precursor m/z range filled in. In the case below with Precursor m/z range 400-1000 and 6 experiments, methods would be created for the ranges 400-500, 500-600, all the way to 900-1000. The resulting .raw files could be used in the much the same way that we’ll use the unscheduled PRM data files in the coming steps, only that we would have to configure the Skyline Transition / Full Scan / Acquisition to DIA with the appropriate window scheme (Ex. 400 to 1000 by 1 with Window Optimization On). As it is, we continue on, using the Unscheduled PRM technique.

Creating Unscheduled PRM Methods

The newest Skyline release supports Stellar for exporting isolation lists and whole methods, which is convenient as it saves the step of importing isolation lists for each of the methods. However, for completeness we'll also describe how to use the isolation list dialog with manual import into method files.

Isolation List with Manual Import to Instrument Method Files

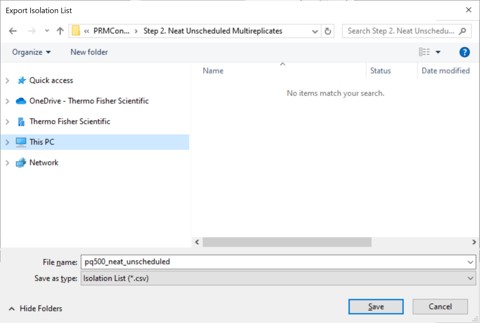

- Open the dialog using File / Export / Isolation List. Set Instrument type Thermo Stellar, select Multiple methods, Max precursors per sample injection to 100, Method type Standard. Note that the max value of 100 is approximate. A smaller number could be required for more narrow peaks or shorter gradients. Press Okay.

- A Save-As dialog will open. Use the name pq500_neat_unscheduled in the folder Step 2. Neat Unscheduled Multireplicates. In a moment, 10 .csv files with the suffix _0001 to _0010 will appear in the folder. An important aspect of the iRT workflow is that Skyline included the PRTC compounds in each of the 10 files. This allows Skyline to calculate the relative positions of the rest of the peaks, which will eventually be added to our iRT library.

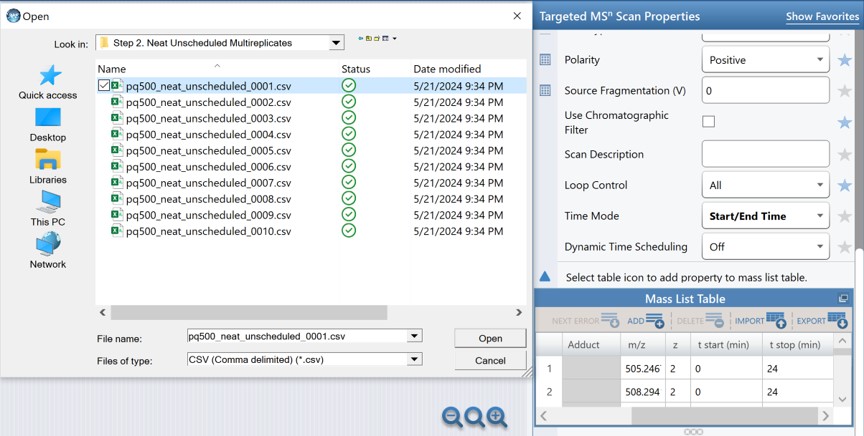

- Open the Stellar method editor and open the file Step 2. Neat Unscheduled Multireplicates/pq500_60spd_neat_multireplicate.meth. In the tMSn experiment in the bottom right you can find the Mass List table and press the Import button to load the first of the created isolation lists, pq500_neat_unscheduled_0001.csv. Use File / Save-As to save the method as pq500_neat_unscheduled_0001.meth. Do this for each of the other 9 isolation lists, creating a total of 10 methods. At this point you would create an Xcalibur sequence with these 10 methods, injecting an appropriate amount of neat standard (ex 10-100 fmol for a 1 ul/min, 24 min assay), and creating 10 raw files. We have performed this experiment for both 60 and 100 SPD methods, and put the resulting .raw files in the folder Step 2. Neat Unscheduled Multireplicates/Raw.

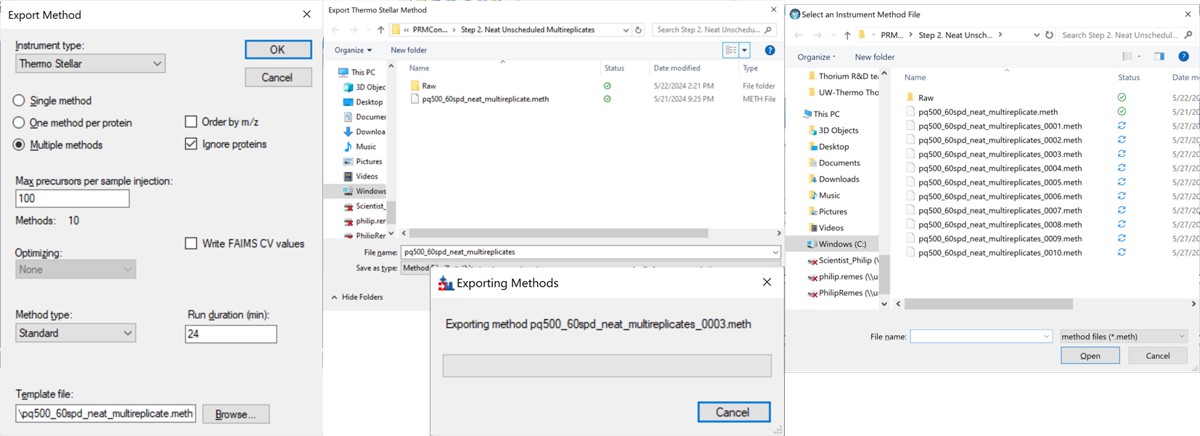

Skyline Method Export

The more convenient way to create the unscheduled replicates is to use the Skyline File/Export/Method functionality. In the Export Method dialog, select Instrument type Thermo Stellar. Select Multiple methods, with Max precursors per sample injection 100. This is a ballpark number that has given enough points per peak for identification purposes for neat standards for a variety of experiment lengths. Click the Browse button and choose the pq500_60spd_neat_multireplicate.meth file in the Step 2. Neat Unscheduled Multireplicates folder. Use a name like pq500_60spd_neat_multireplicates and press Save, and Skyline will present a progress dialog. When it finishes, 10 new methods will be created with suffix _0001 through _0010, as shown below.

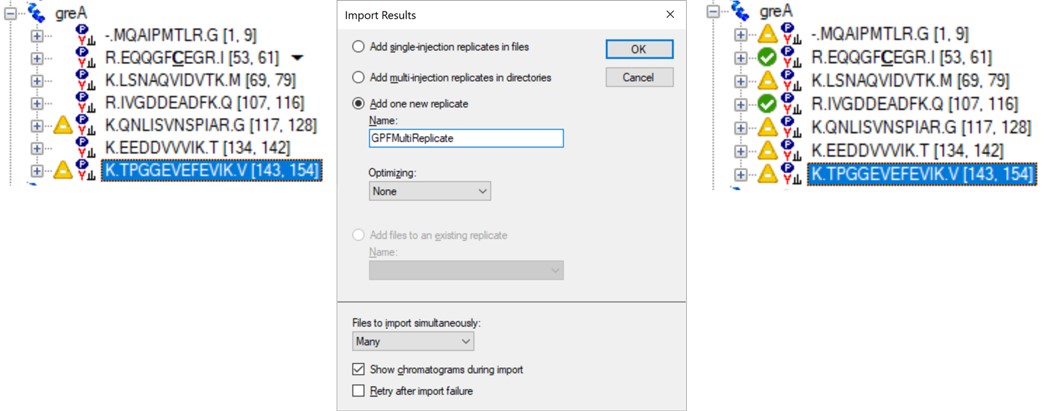

Loading Unscheduled PRM Data

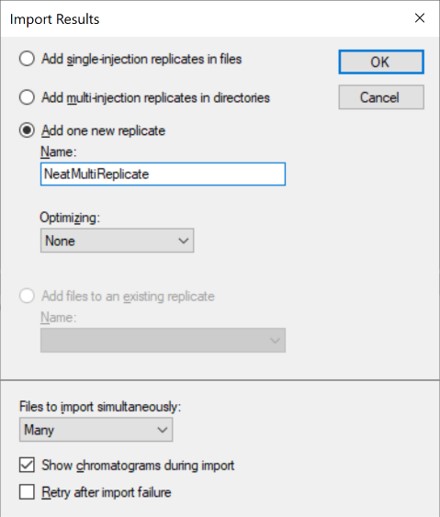

- Take the .sky file that we have created, Step 1. Setup Skyline Documents/pq500_60spd_neat_multireplicate.sky and use File / Save As to save a copy with the name Step 2. Neat Unscheduled Multireplicates/pq500_60spd_neat_multireplicate_results.sky. If you want to work along with the 100 SPD method as well, you can save another version of the file with the corresponding name.

- Use File / Import / Results and select Add one new replicate. Give it the name NeatMultiReplicate. Press Okay, and then select all the raw files in the folder Step 2. Neat Unscheduled Multireplicates\Raw\NeatUnscheduled60SPD and press Open. Skyline will load the results. When they are finished loading, Save the Skyline document. Do the same for the 100 SPD .raw files in their .sky file.

- Now we will inspect the results to see if any peaks have been chosen incorrectly. Use File / Save As to save the Skyline document as Step 2. Neat Unscheduled Multireplicates/pq500_60spd_neat_multireplicate_results_refined.sky (and one for the 100 SPD file).

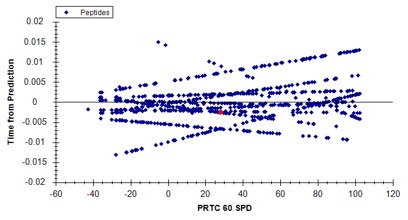

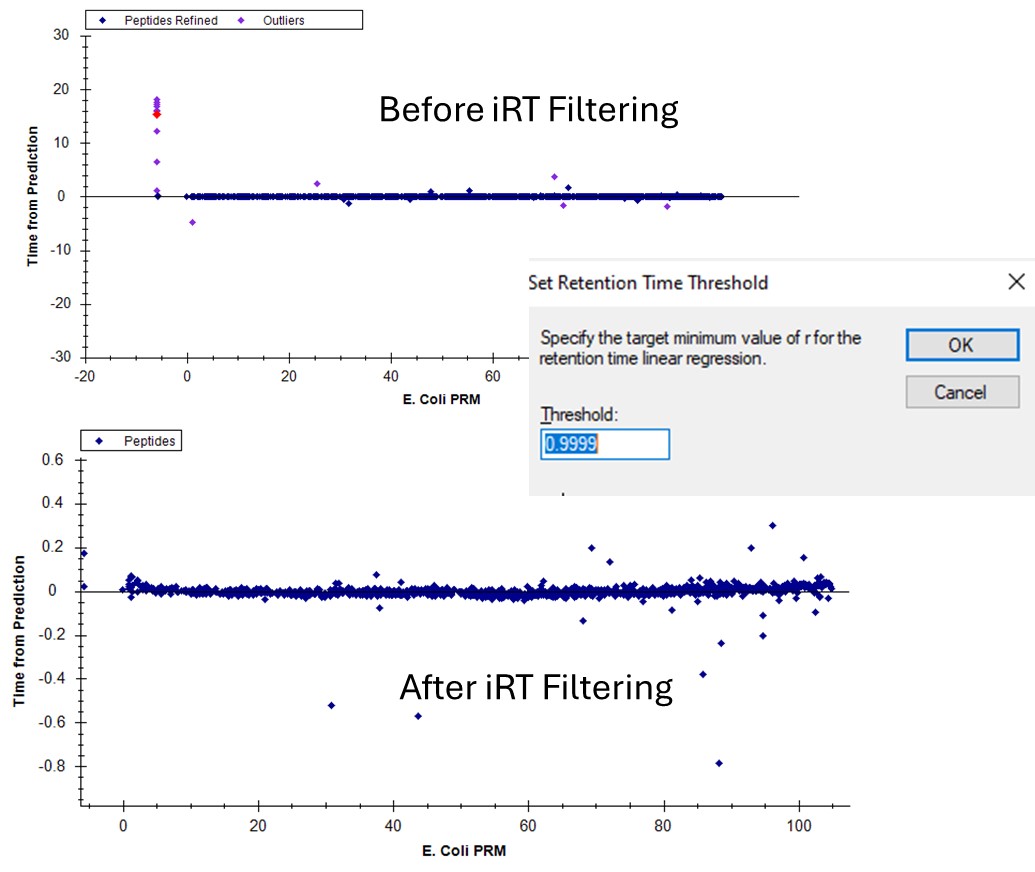

- Use View / Retention Times / Regression / Score to Run. Right click the plot and select Plot / Residuals. Right click the plot and make sure that Calculator is set to the calculator that we created, PRTC.

- Right click on the plot and select Set Threshold and enter 0.99. If the plot does not update to have some of the blue dots turn pink, then right click the plot and select Plot / Correlation, and then switch back to Plot / Residuals.

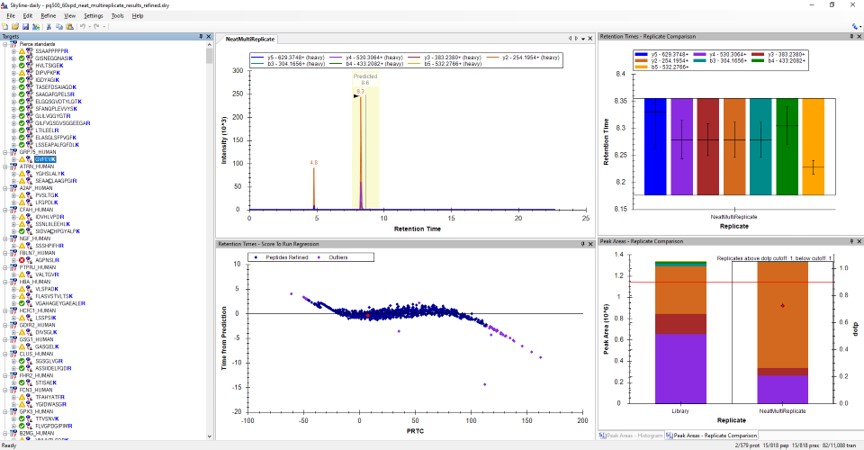

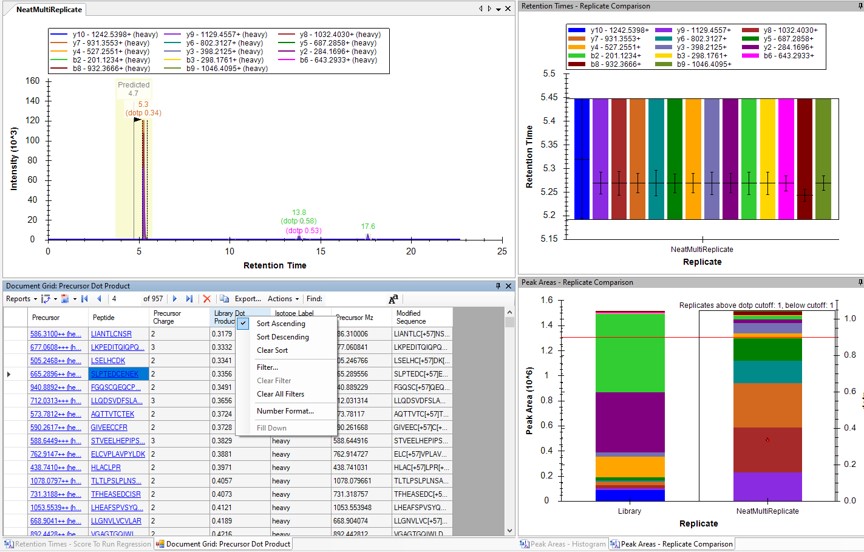

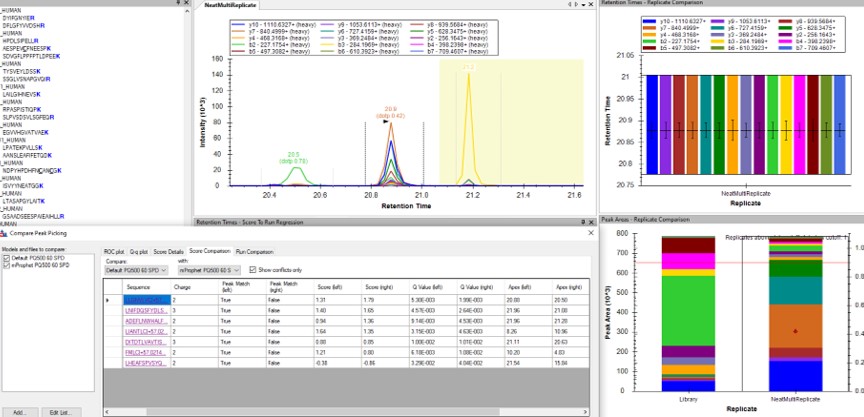

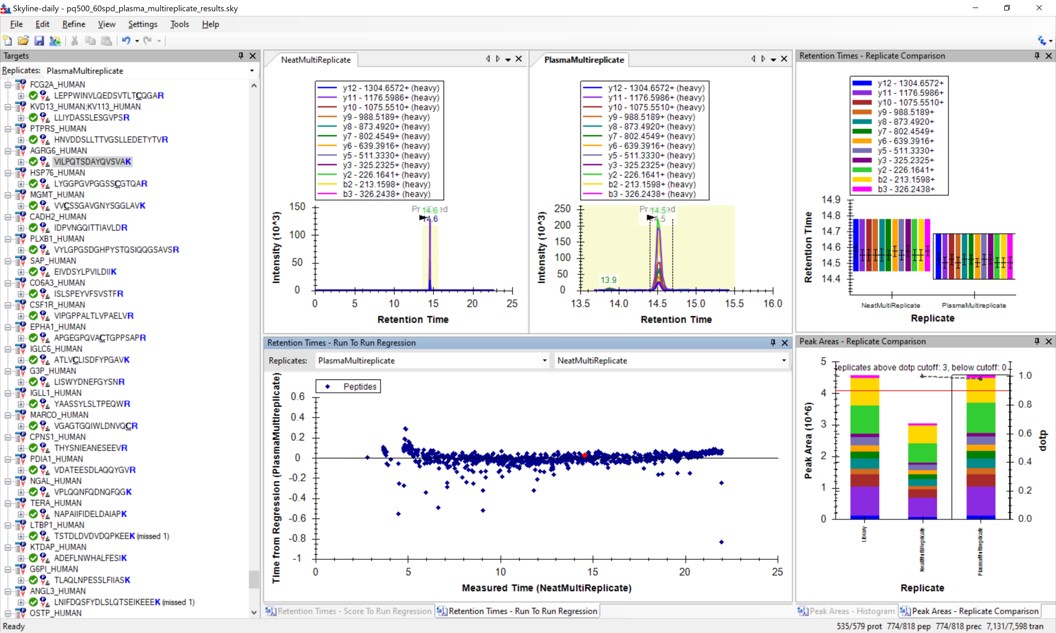

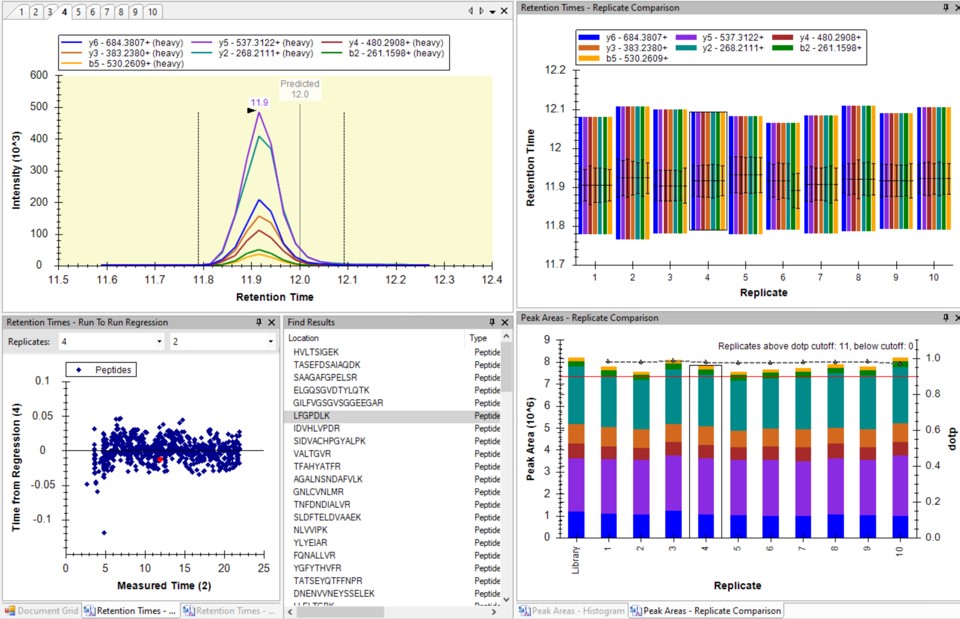

- Use View / Peak Areas / Replicates Comparison and setup the Skyline document something like in the figure below.

Reviewing the Unscheduled PRM Results

Using Score-to-Run RT Outliers

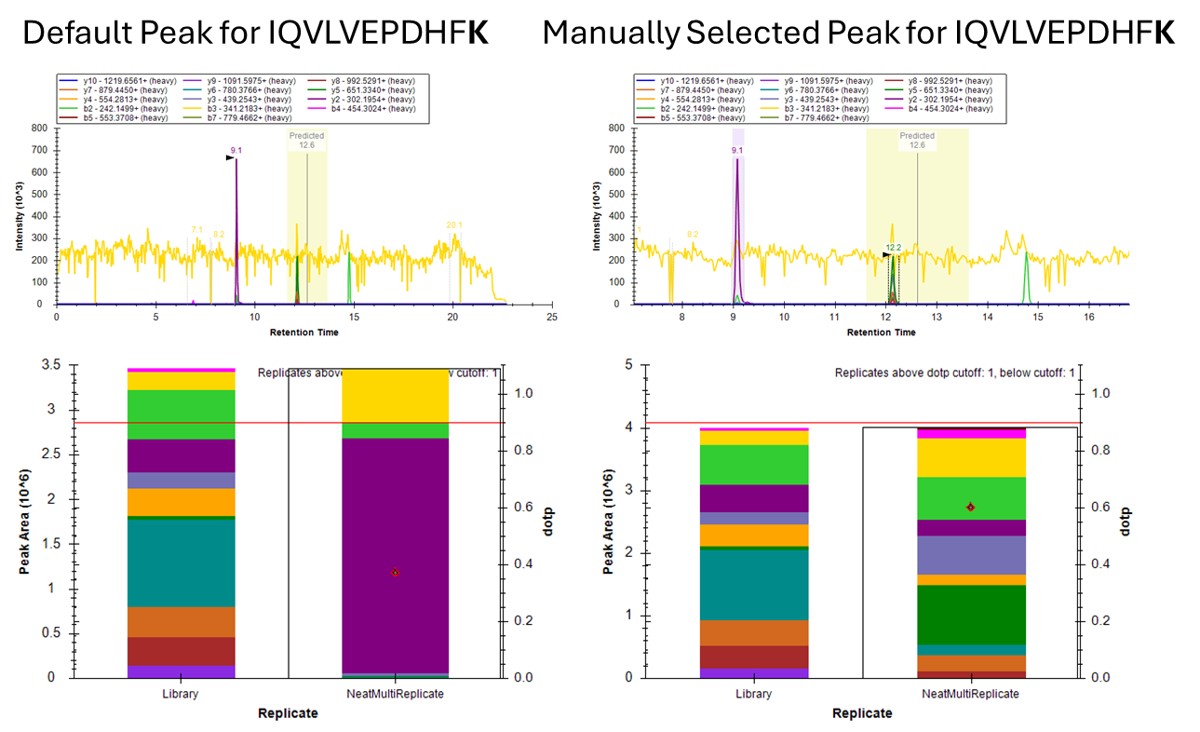

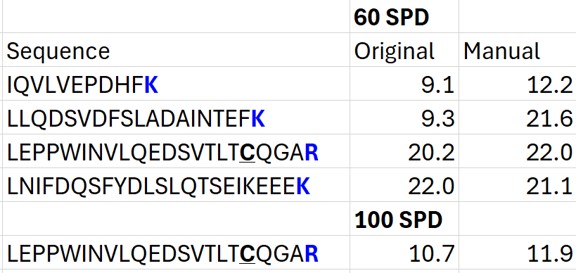

- The Residual Score-to-Run plot is very useful for this step to flag any potential missed peaks. Here we want to click on any of the pink "outlier" dots and inspect them. For example the IQVLVEPDHFK was picked at 9.1 minutes, but the peak in the predicted window at 12.6 has a better library dot product match. Also based on the 100 SPD data, we think that the LLQDSVDFSLADAINTEFK is probably the low intensity peak at 21.6 minutes and not the larger peak at 9.3 minutes that is far away from the predicted RT.

Using Dot Products

-

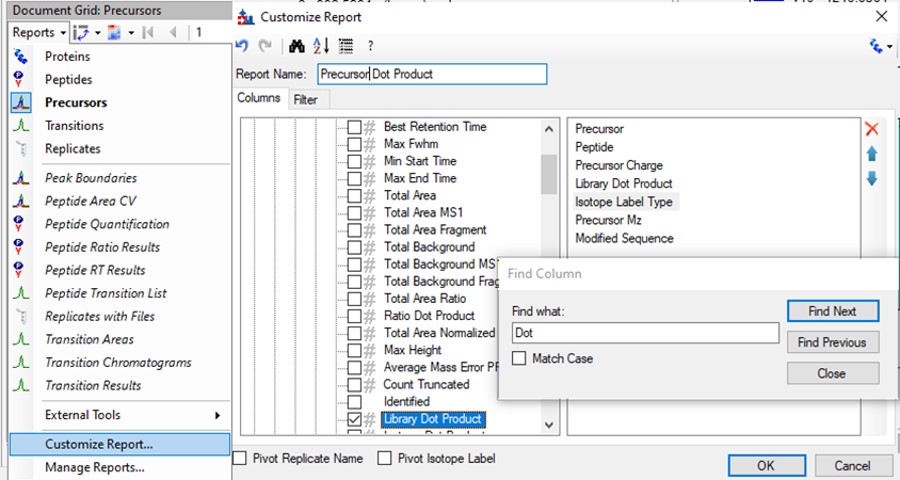

Another way to find picked peak issues like this is to view a Document grid report that has the dot product scores. Use View / Document Grid (or Alt + 3) to bring up the Document grid, and dock it in the same window as the Retention Time Score-to-Run.

- If you ever can't dock a window where you want it, dock the window in some place that Skyline lets you, and then you will be able to dock it where you originally wanted.

-

Click the Precursor Report, then Customize Report to bring up a dialog menu. Erase columns from the right hand side and then click the binoculars and type 'Dot', and press Find Next until you find Library Dot Product. Select this column to add it to the right hand side, and then press okay.

- Now right click on the Library Dot Product column and select Sort Ascending. You can click through on the worse dot product scores to inspect them. Even the ones with poor dot product scores are actually pretty ambiguously belonging to a single precursor at the correct retention time.

Using Peak Picking Models

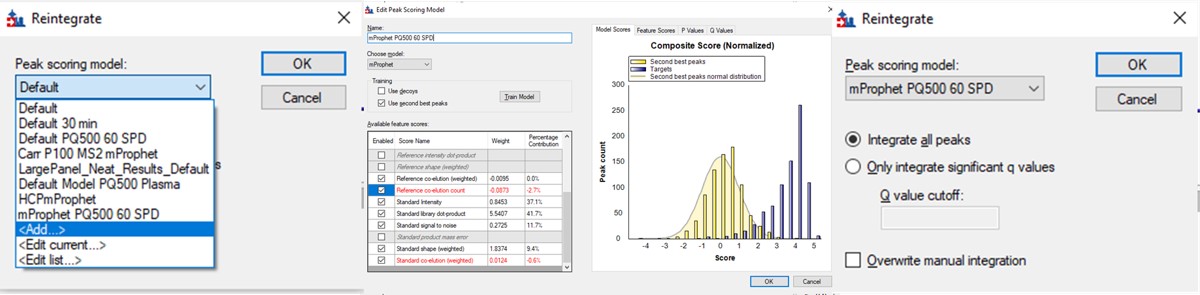

A final way that can be useful for inspecting this kind of result is to compare the results from the two Skyline peak picking models available at this time. Although in the present case there is not much use for this technique, we'll demonstrate it now.

- Select Refine / Reintegrate. In the Peak scoring model dropdown box, select Add. In the dialog that shows up, keep mProphet as the model, and select Training / Use second best peaks. Using decoys is the method used when you have DIA data and have added Decoy peptides to the document. For targeted methods, the second best peak for the peptide serves as the null distribution for training the peak model. Click Train Model. In the feature scores there are several scores that are not available because there are no light peptides in the document. Scroll down the see the enabled features. Several features are red because they decreased the classification accuracy. Unselect those features and press Train Model again, and click Okay to exit the Edit Peak Scoring Model dialog.

- In the Reintegrate dialog, Add another peak scoring model. This time select the Default model and press Train Model. Then press Okay, and press Okay again in the Reintegrate dialog. This should give us the original peaks selected when the data were imported.

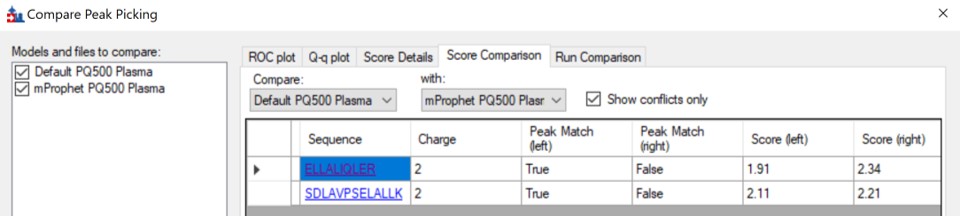

- Go to Refine / Compare Peak Scoring. Use the Add button to add the two peak models that we just made. Go to the Score Comparison tab, select the Default model for the Compare dropdown and the mProphet model for the "with" dropdown. Click the Show conficts only button. Of the 8 conflictin peaks, the greatest ambiguity is probably the first one, LLGNVLVCVLAR. Here there is a very nice peptide peak at 20.9 minutes, but the one at 20.5 although it is further from the predicted RT, has a better dot product. We selected the 20.9 peak, but potentially this is misassigned. We made the same assignment in the 100 SPD method.

Summary of Changes made to Default Skyline Peak Picking

The figure below summarizes the manual changes we made to the Skyline default peak picking. Note that at the time that we first did the study, Skyline could connect to the Prosit server for library generation. By the time this tutorial was written, Skyline was using something called Koina to do the in silico predictions. While the Prosit models are in theory supported, there was an issue with using them. Therefore there could be some small differences. Note that the most conservative approach would be to search the unscheduled PRM data against a PQ500 .fasta file with static R and C heavy modifications, and only select those peptides that passed some threshold FDR value.

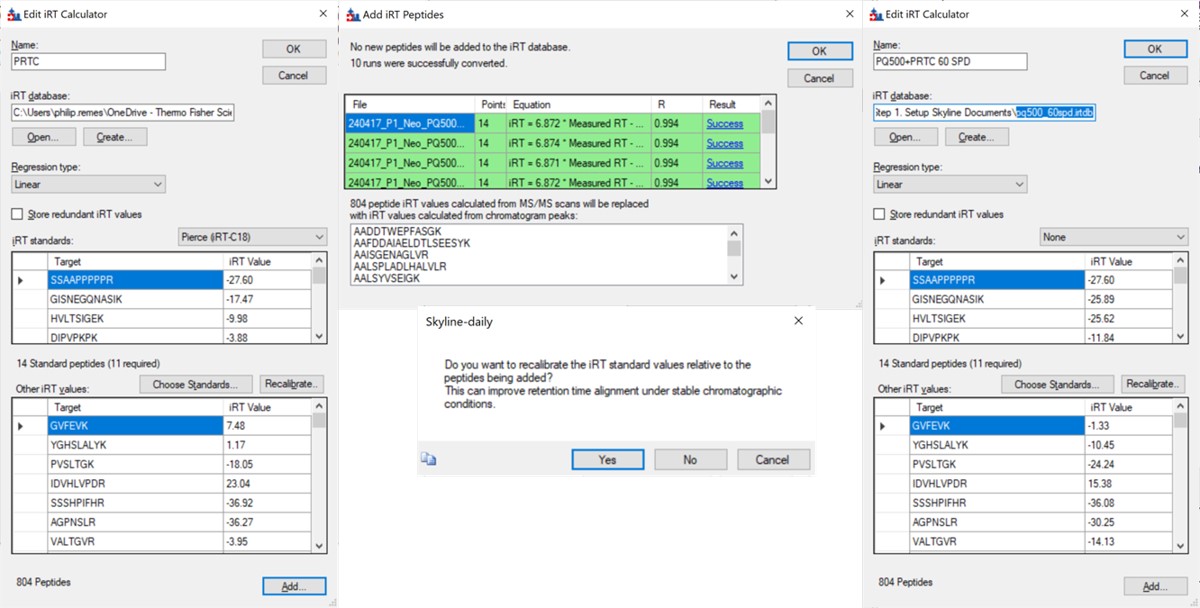

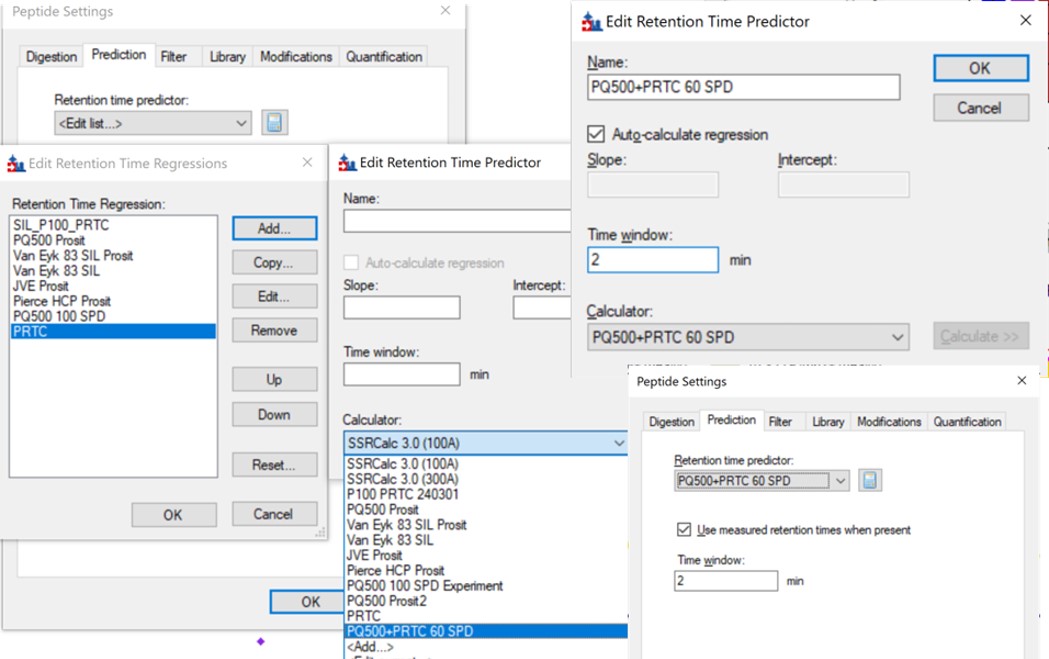

Saving a New, Empirical iRT Calculator

- Now we will update the iRT library so that future experiments can benefit from this curated peak picking. Use Settings / Peptide Settings / Prediction, and click the calculator button to Edit Current. In the dialog that opens, click Add in the bottom right corner and add results.., and an Add iRT Peptides dialog announces that 804 peptide iRT values will be replaced. Click Okay. Click Yes when asked if the iRT Standard values should be recalibrated. Now in the iRT database line, use the name pq500_60spd.irtdb, and give it a new name, PQ500+PRTC 60 SPD, press Okay to exit.

- We've created a new iRT calculator, but have to set it as the active one. Click the calculator icon and Edit List. Press the Add button and then click the dropdown menu, and the new calculator will be there, select that one. Set the Time window to 2 minutes, then press Okay. Select the PQ500+PRTC 60 SPD calculator in the Peptide Settings / Prediction dialog and press Okay to exit to main Skyline window.

- In the score-to-run plot, right click, select Calculator, and choose the PQ500+PRTC 60 SPD. The plot will update to show an interesting pattern where the errors are all very close to zero. We have now created a new calculator that will make it easier for Skyline to pick peaks next time. We did the exact same set of iRT steps for the 100 SPD Skyline file, because using the new 60 SPD calculator for the 100 SPD does not work perfectly. In the 100 SPD we think that probably the LEPPWINVLQEDSVTLTCQGAR is the peak at 11.9 minutes and not the one at 10.7 minutes.

Filtering Transitions with PRM Conductor

This was a neat sample, but we can filter out the transitions that we don't need at this point, with the understanding that when we spike into plasma we may have to refine the transitions even further.

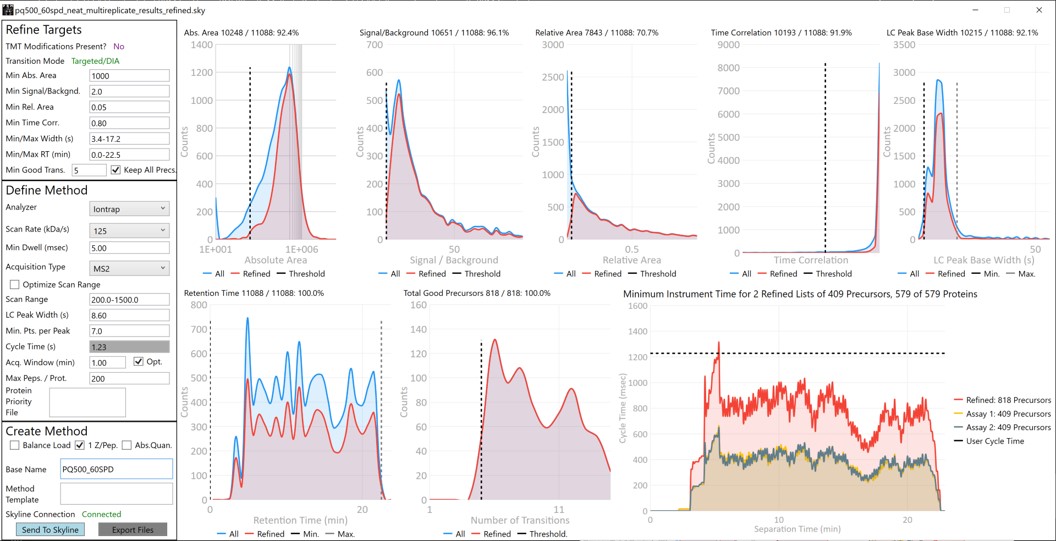

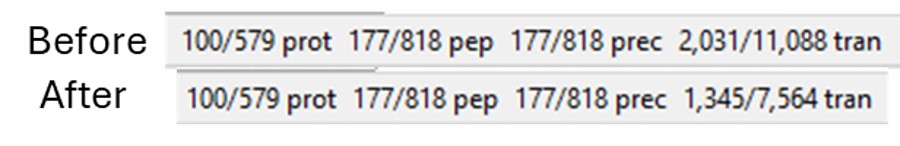

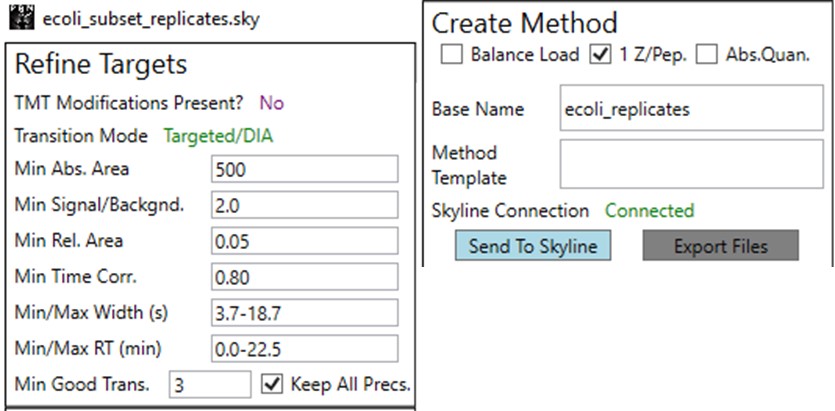

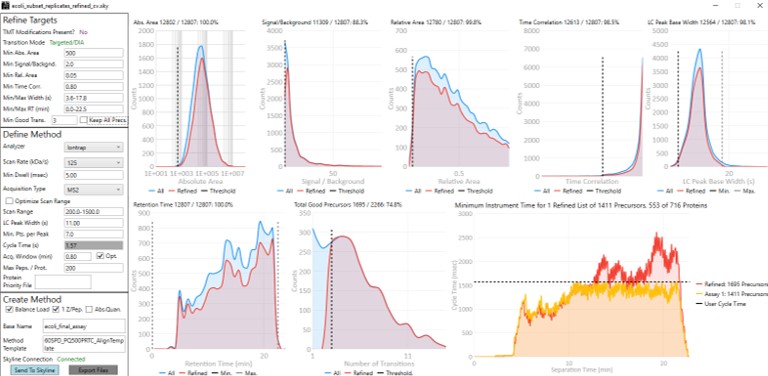

- Select Tools / Thermo / PRM Conductor. The tool opens up. We used the settings in the figure below, where importantly you set Min Good Trans to 5, press Enter, and also select the Keep All Precs box. Press the Send to Skyline button on the bottom left. Save the Skyline document and close the PRM Conductor.

- The number of transitions displayed in the bottom right hand of Skyline will have updated. With the settings we used, the number of transitions dropped from 11,088 to 7,564.

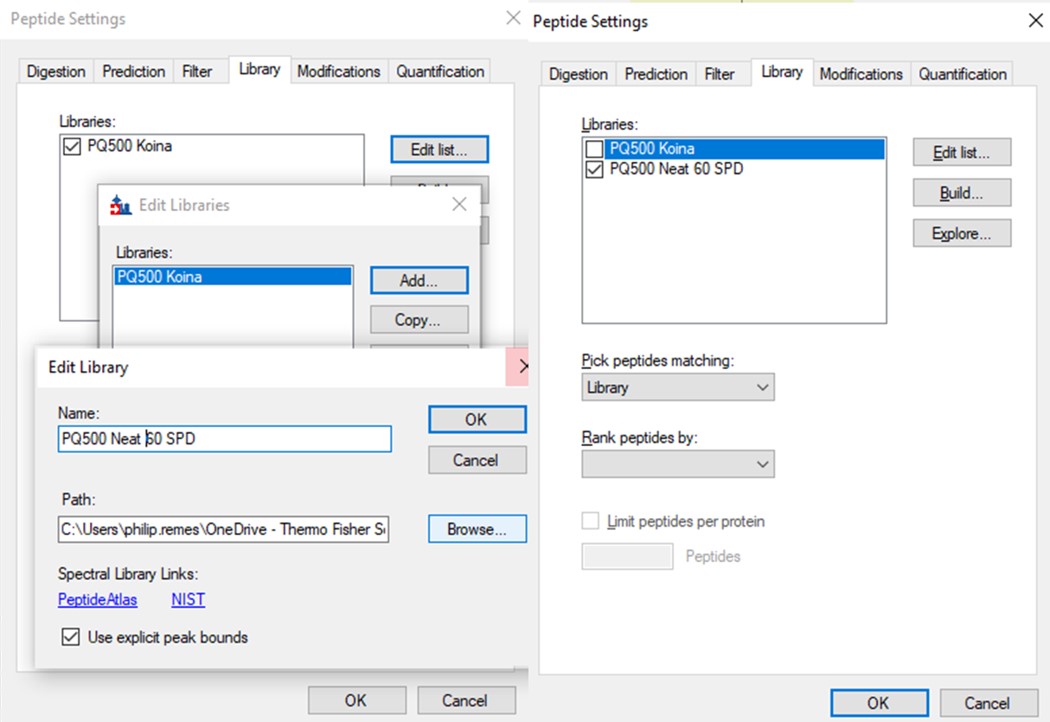

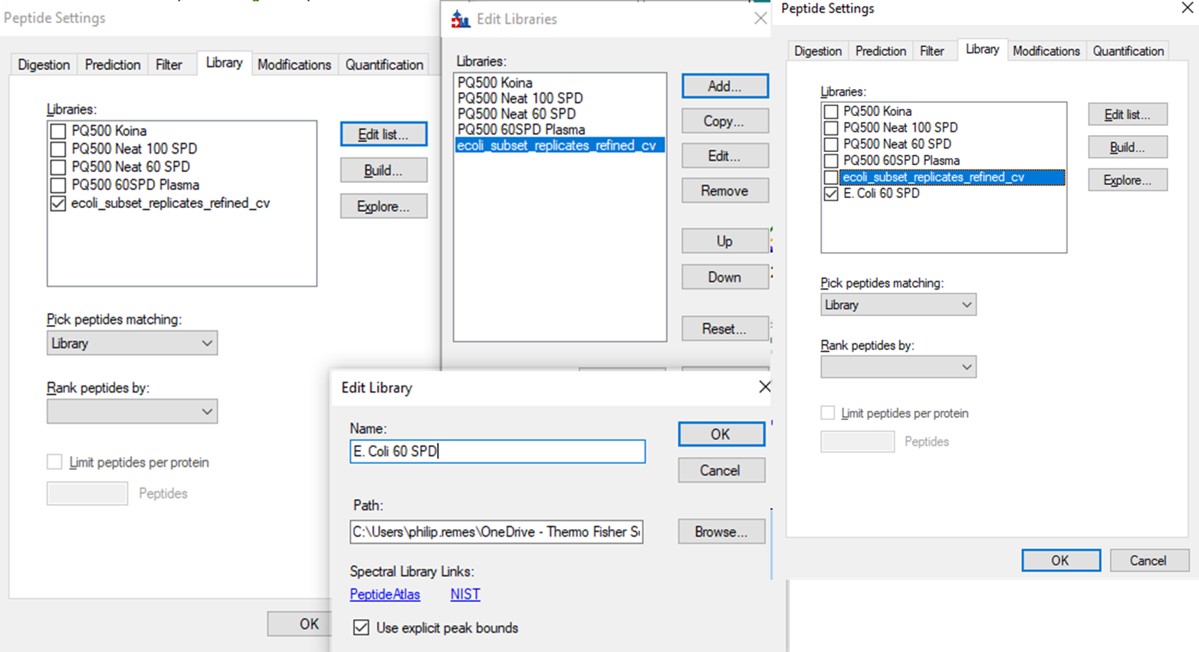

- Use File / Export / Spectral Library and save as Step 2. Neat Unscheduled Multireplicates/PQ500_Neat_60SPD_Refined. Use Settings / Peptide Settings / Library and select Edit List, then Add, and Browse for the file you just saved. Give the library the name PQ500 Neat 60 SPD. Press Okay, and in the Library tab, deselect the PQ500 Koina library and select the new library. Press Okay and save the Skyline document. Make a spectral library in the same way for the 100 SPD document. Close the PRM Conductor instances. We've reached the end of the second step, and are ready to acquire data in plasma.

Plasma Wide Window Scheduled

Scheduled PRM for Heavy PQ500 in Plasma with Wide Acquisition Windows

In this step we will create a wide-window PRM method to verify the RT locations of the PQ500 heavy peptides in plasma. Sometimes it can be the case that the RT’s of peptides will be much different when spiked into matrix compared to when analyzed neat. This is expected and likely due to the binding properties of the chromatography stationary phase, which depend on the concentration of analytes in the liquid phase in an equilibrium sometimes referred to as an isotherm. At the end of this section we will have a candidate final method that includes both heavy and light peptides, and that also includes Adaptive RT real-time chromatogram alignment.

Creating Wide Window Methods that Include Adaptive RT Acquisitions

-

Use File / Save As on our files from the last step, pq500_60spd_neat_multireplicate_results_refined.sky and pq500_100spd_neat_multireplicate_results_refined.sky and save in the folder Step 3. Plasma Heavy-Only Wide Window as pq500_60spd_plasma_multireplicate_results.sky and pq500_100spd_neat_multireplicate_results_refined.sky.

-

Use Tools / Thermo / PRM Conductor.

-

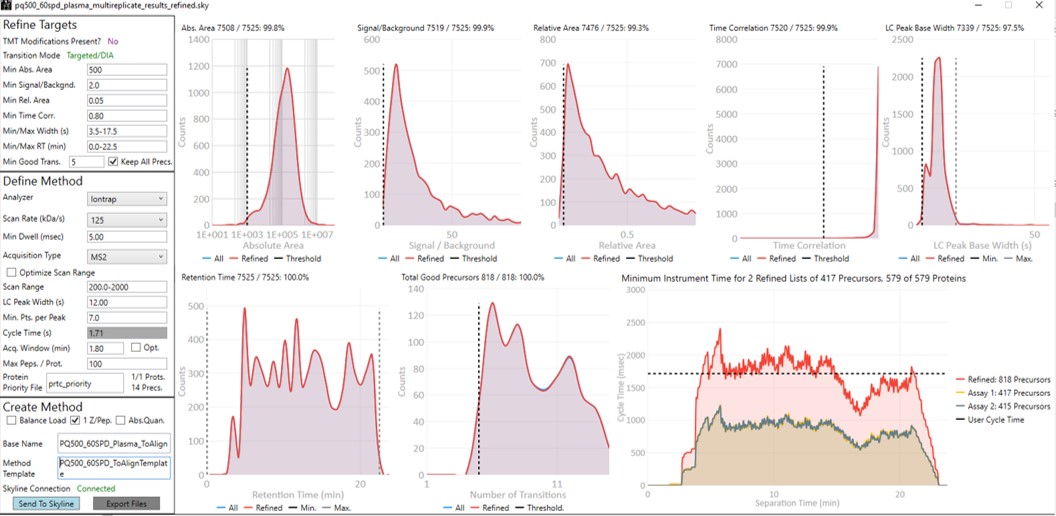

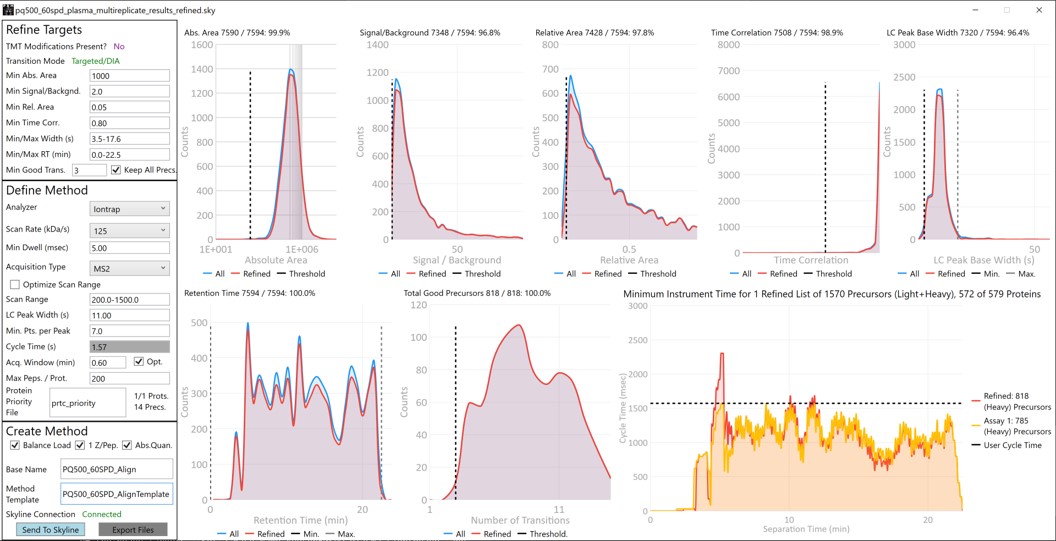

Update the settings as in the figure below. After changing any number value, be sure to press the Enter key on the keyboard. The prtc_priority.prot file is selected by double clicking the Protein Priority File text box. This is just a text file with the line “Pierce standards”, the protein name that Skyline gave to the iRT standards. The peptides from any proteins listed in this file (with Skyline's protein names, not accession numbers) are included in the assay, whether or not their transitions meet the requirements. If the Balance Load checkbox is not selected and there are multiple assays to export, each assay will contain the prioritized proteins, and Skyline will be able to use the iRT calculator for more robust peak picking.

-

Note that with the 1.8 minute acquisition window, the right-most plot in PRM Conductor tells us that the 818 precursors in the assay require up to almost 2500 milliseconds to be acquired, and as we have the Balance load box unchecked, they will be split into 2 assays. If Balance Load was checked, then we would create a single assay, for only the precursors that can be acquired in less than the Cycle Time.

-

Enter a suitable Base Name like PQ500_60SPD_Plasma_ToAlign. This reflects the fact that we are including acquisitions to perform Adaptive RT, but we are not actually adjusting our scheduling windows in real time. Our neat standards were not suitable for performing aligning in the complex plasma matrix background.

-

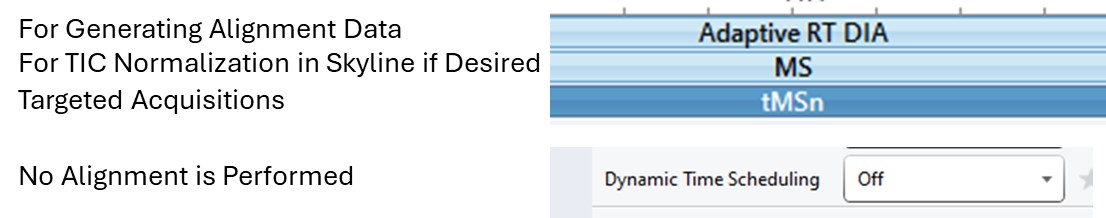

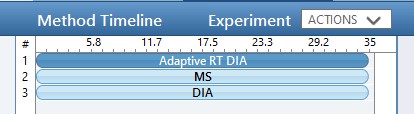

Double click the Method Template field and select the Step 3. Plasma Heavy-Only Wide Window/PQ500_60SPD_ToAlignTemplate.meth file. This file is standard targeted method for Stellar, with 3 experiments. The first is the Adaptive RT DIA experiment, which is being used to gather data for real-time alignment in future targeted methods. The second is a MS1 experiment, which isn't strictly needed, but enables the TIC Normalization feature in Skyline to be used, and can be helpful for diagnostic purposes. Removing it would save on computer disk space. The tMSn experiment can be simply the default tMSn experiment, where we ensure that Dynamic Time Scheduling is Off. If it were on, then PRM Conductor would try to embed alignment spectra from the current data set into the method. Here we leave it off.

-

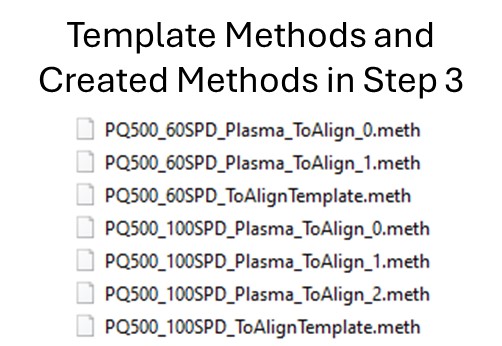

Press the Export Button. PRM Conductor will open a progress bar and do some work to export a .sky file, and two .meth files with the names PQ500_60SPD_Plasma_ToAlign_0.sky and PQ500_60SPD_Plasma_ToAlign_1.sky. The new .sky file has our new transition list imported and sets the Acquisition mode to PRM. This can be useful especially when discovery data is acquired in a DIA mode, however in this case we want to still compare our neat PQ500 data with the spiked plasma data we’ll be collecting, so we’ll continue using our file pq500_60spd_plasma_multireplicate_results.sky.

-

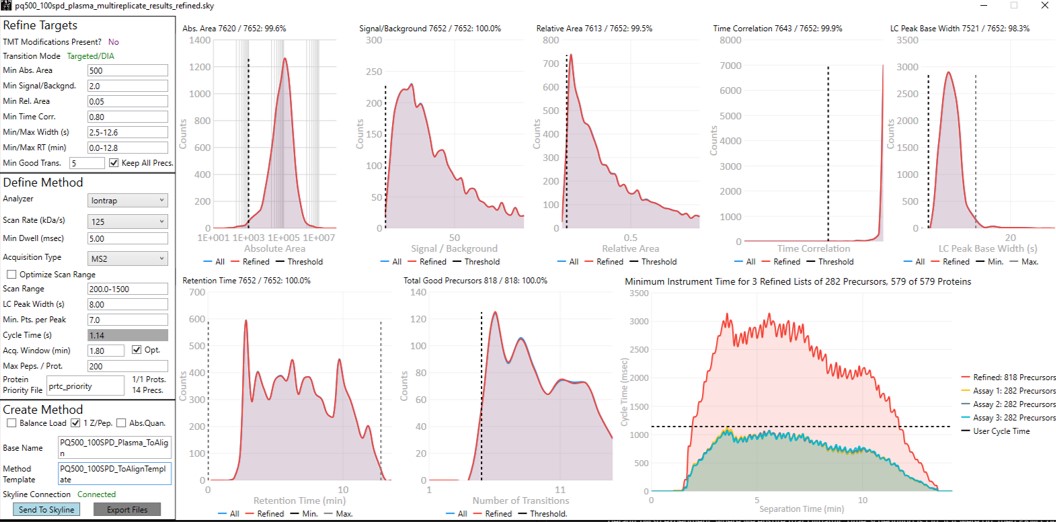

Do the same thing for the 100 SPD method. Here we can create 3 methods if the LC Peak Width is set to 8. Change the Base Name and Method Template to the 100 SPD versions and press Export Files.

- The created methods in this step are as in the figure below. We are now ready to acquire data using these methods. We did this by spiking in PQ500 at the Biognosys recommended concentration into 300 ng of digested plasma that we got from Pierce. Pierce will offer this as a product for sale in the near future (this is written mid-2024).

Reviewing the Wide Window, Plasma, Scheduled PRM Results

-

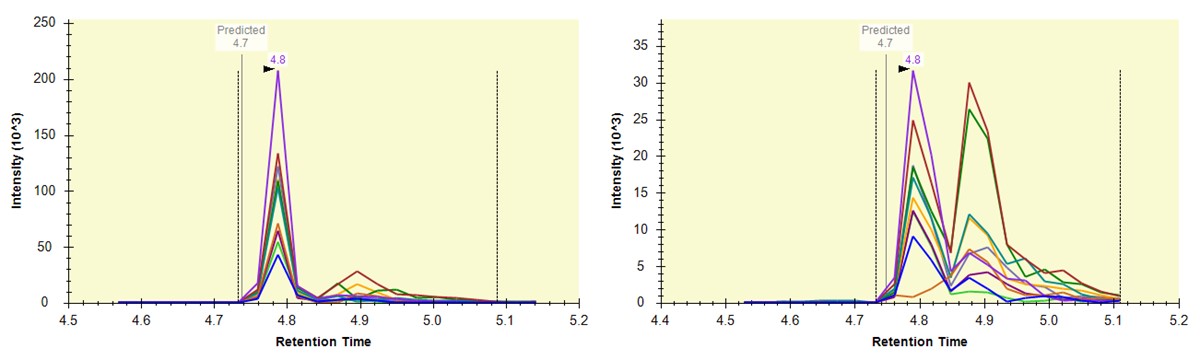

Open Settings / Transition Settings and set the Retention time filtering option to Use only scans within 1 minutes of MS/MS IDs. You want to be careful with this filtering because if the RT shifts were greater than +/- 1 minute, some data could be missing. You can always use one number and then change it, and use Edit/Manage Results, and select the replicate and Reimport, to use a wider or narrower filter. In this case the IDs are coming from the spectral library that we created in the previous step. Alternatively one could use Use only scans with X minutes of predicted RT option. We need some kind of RT filtering of this sort to help Skyline differentiate between the iRT peptide SSAAPPPPPR and the PQ500 peptide FQASVATPR, which have the same exact m/z.

-

We acquired data for PQ500 spiked into 300 ng of plasma and put the resulting .raw files in the folder Step 3. Plasma Heavy-Only Wide Window\Raw. Load the results with File / Import Results / Add one replicate, use a name like PlasmaMultireplicate, and select the .raw files for the appropriate 60 or 100 SPD throughput. Skyline will load these data files as a single replicate.

-

Use View / Arrange Graphs / Row so that we can view the Neat and the Plasma replicates at the same time. Right click a chromatogram plot and use Auto Zoom X Axis / None, so that we are zoomed out as far as we can go.

-

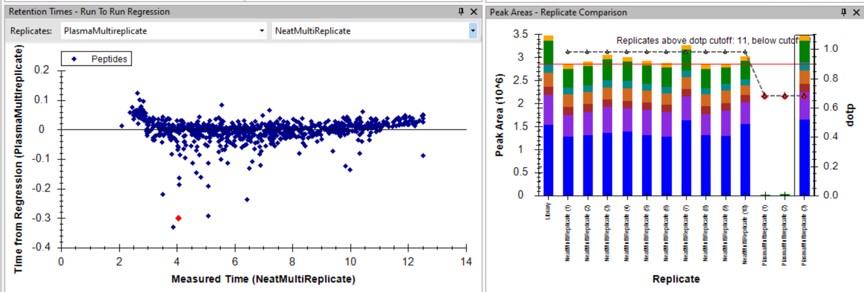

Use View / Retention Times / Regression / Run-to-Run. Your Skyline document should look something like the figure below.

-

Use Save As to save a new version of this file in case we make any changes to the picked peaks. You can use pq500_60spd_plasma_multireplicate_results_refined.sky.

-

We can do the same steps as above. Make an mProphet and Default Peak picking models with Refine / Reintegrate. Then use Refine / Compare Peak Scoring, select the two new models with the Add button, then select the Score Comparison tab. Select the two models, and click Show conflicts only. We see two descrepancies, and changed the ELLALIQLER peptide from 20.0 to 20.2 minutes, which had a higher dot product and better predicted RT. We kept the default peak for the other peptide.

-

Click on the various outliers and make sure that the peak area plots are showing a good correspondance of the transitions with a high dot product.

-

Use a report with the Library Dot Product sorted from Low-to-High and investigate the worst cases. Even the lowest dot product cases look okay to us.

-

For the 100 SPD data, investigating the Plasma-to-Neat retention times has a similar patterns as for the 60 SPD. There is one case, the FQASVATPR peptide, that has the same exact m/z as the SSAAPPPPPR iRT peptide, which elutes at a similar RT. Reducing the Settings / Transition Settings / RT filtering time to +/- 0.5 minutes can separate these two peptides. We kept all the Skyline picked LC peaks for the 100 SPD document, and saved a new file pq500_100spd_plasma_multireplicate_results_refined.sky.

Creating a Final Method

-

Remove the NeatMultiReplicate using Edit / Manage Results. It's easy to forget to do this. We don’t want PRM Conductor to consider the neat peaks, which are already very clean. Save the Skyline file again.

-

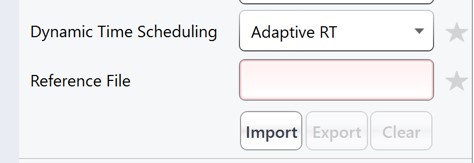

Launch PRM Conductor to clean up interferences and create a final method. Set Min. Good Trans. 5 and check Keep All Precs. Set Min Dwell 5 msec. Set LC Peak Width 11, Min. Pts. Per Peak 7, Acquisition Window 0.6 minutes, and check the Opt. box. This option increases the acquisition windows slightly, especially at the start of the experiment, without going over the user's Cycle Time. Select the prtc_priority.prot file, which in in this case just makes sure that those peptides can't get filtered. Check the Balance Load, 1 Z/prec., and Abs. Quan boxes. This last option instructs the Export command to include light targets for each of the heavy targets. Set a Base Name PQ500_60SPD_Align, and select the PQ500_60SPD_AlignTemplate.meth.

-

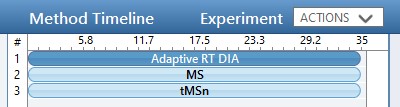

This template method is the same as the ToAlign version, only the Dynamic Time Scheduling is set to Adaptive RT. Now when PRM Conductor exports a method, it will compress the qualifying alignment acquisitions in the data and embed them into the created method.

-

We have a small issue here in that there more refined targets (red trace) than we can target. We have to trick PRM Conductor here and set the LC Peak Width to 20 so that all targets are exported, then in the created file change the LC Peak width back to 11 and points per peak to 7. In the future we'll allow the user to just export an "invalid" method.

-

Press Export Files to create the new instrument method.

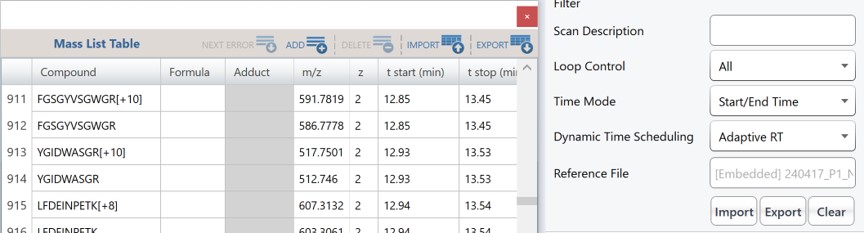

- Open the PQ500_60SPD_Align.meth file, change the LC Peak width from 20 to 11, and the Points per Peak to 7. Note that the Adaptive RT Reference file has a file name that starts with Embedded, and the Mass List table has entries with heavy peptides having names ending in [+10] or [+8] followed by the corresponding light peptides.

-

Use the Send to Skyline to filter the remaining few poor transitions from these targets, and save the Skyline document state. Export a spectral library like we did before, giving it a name like PQ500_60SPD_Plasma. Configure this library in Settings / Peptide Settings / Library. This is the end of step 3.

-

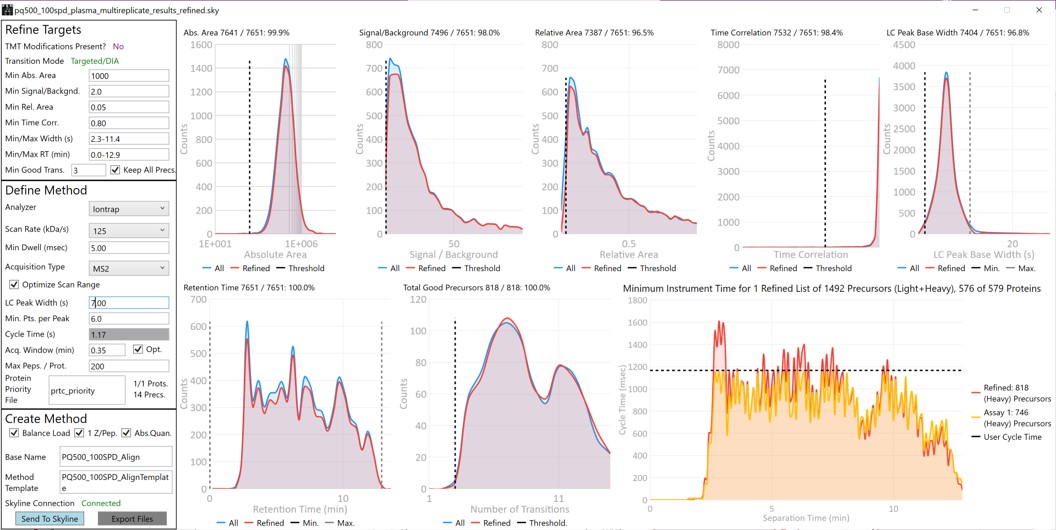

For the 100 SPD case, from the pq500_100spd_plasma_multireplicate_results_refined.sky file, Launch PRM Conductor. Check the Optimize Scan Range box. This will produce targets with customized scan ranges for each target, significantly increasing the acquisition speed, at the cost of some injection time and sensitivity. Set an appropriate Base Name and select the PQ500_100SPD_AlignTemplate.meth for the Method Template. Use the same trick as for the 60 SPD, setting the LC peak width to 20 seconds and Export the method. Then open the method that is created and change the LC peak width back to 7 with 6 points per peak.

-

Press the Send to Skyline button. Export the spectral library and configure it in the Peptide Settings / Library tab. Save the pq500_100spd_plasma_multireplicate_results_refined.sky.

- We now have candidate final methods created for 60 SPD and 100 SPD, respectively PQ500_60SPD_Align.meth and PQ500_100SPD_Align.meth. We are ready to move to the next step and validate the assay.

Plasma Narrow Windows Scheduled

Scheduled PRM for Light-Heavy PQ500 in Plasma with Narrow Acquisition Windows

Analysis of the Heavy Peptides

In step 3 we created two candidate final methods for the 60 and 100 SPD assays. Take the file pq500_60spd_plasma_multireplicate_results_refined.sky and pq500_1000spd_plasma_multireplicate_results_refined.sky, and resave them in the folder Step 4. Plasma Light-Heavy Narrow Window, with names like pq500_60spd_plasma_final_replicates.sky and _pq500_100spd_plasma_final_replicates.sky. In this step we’ll analyze the results of the light/heavy methods created in Step 3. Of particular interest will be the histogram of coefficient of variance values for the peak areas.

-

Use File / Import Results / Add single-injection replicates in files and press Okay. Select the 10 files in Step 4. Plasma Light-Heavy Narrow Window\Raw\60SPDReplicates and press Open. Remove the common prefix and press Okay to load the results. Remove the PlasmaMultiReplicate with Edit / Manage Results and Save the document.

-

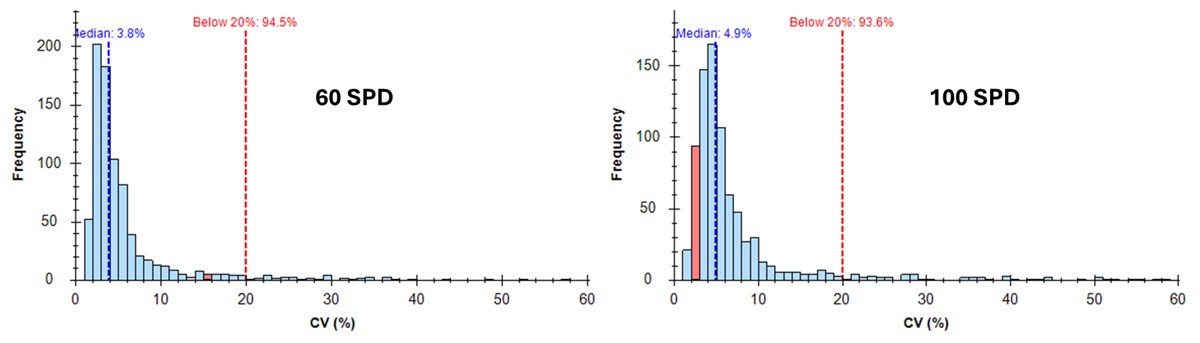

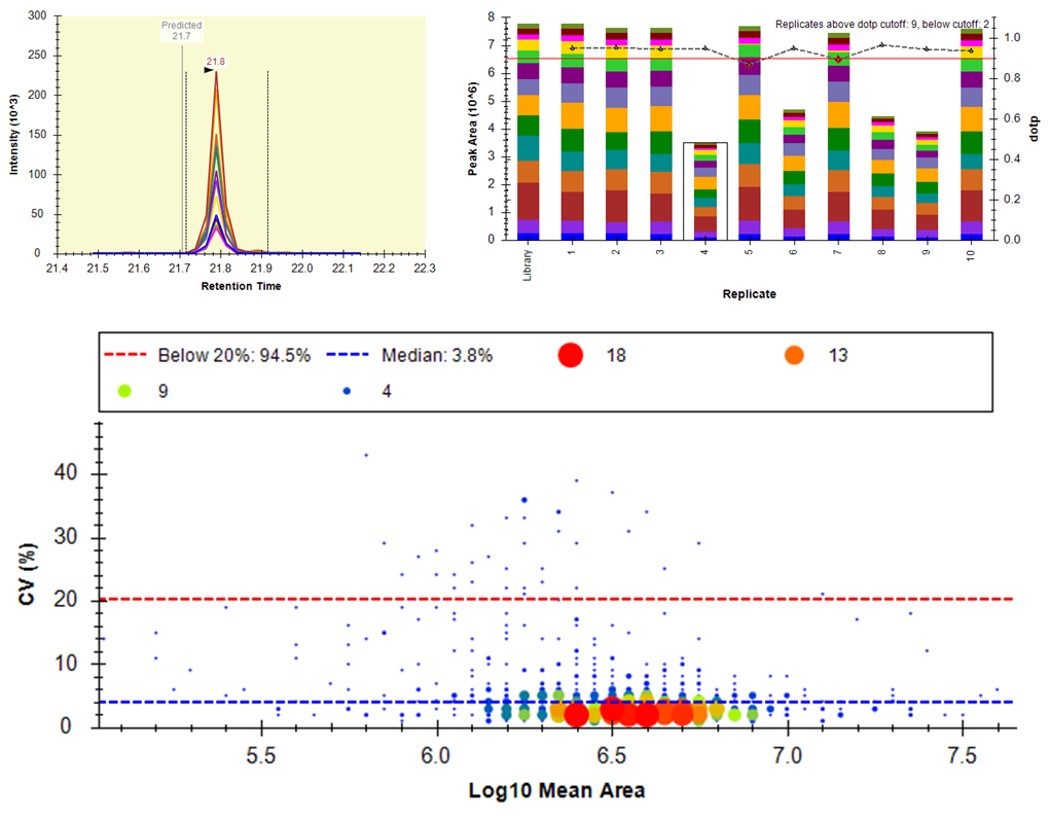

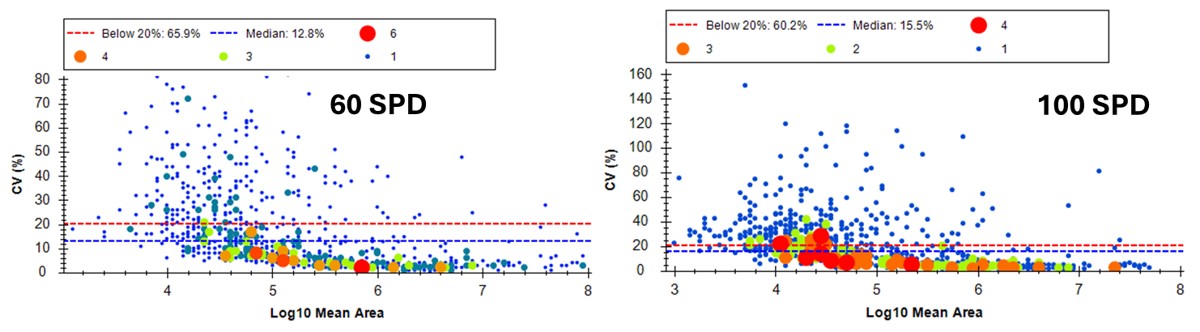

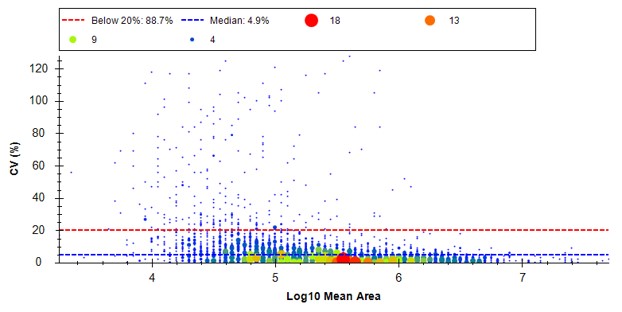

Select View / Peak Areas / CV Histogram. The CV histograms have ~94% of the targets with CV < 20%, with medians of 3.8 and 4.9%, which are excellent.

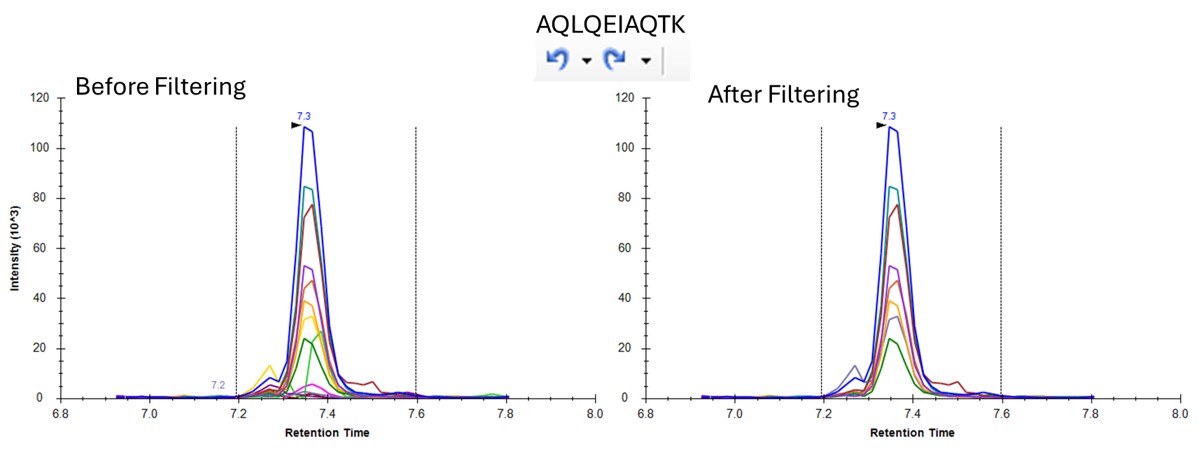

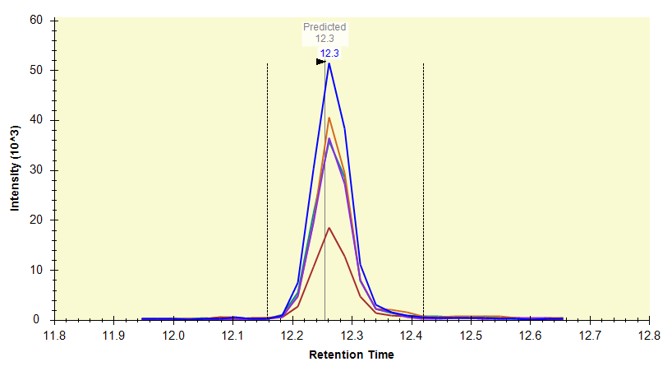

You can click on the histogram, which will open a Find Results window with some of the peptides that are close in CV to the value that was pressed. Double clicking any peptide sequence in the Find Results table will make that peptide active, whereupon one can check the peak shape, peak area, and retention time variations for the 8 replicates. Many/most peptides have results like LFGPDLK below.

- Another interesting plot is the 2D CV Histogram, found under the View / Peak Areas menu. Here we see that there are 45 targets with CV > 20%. Clicking on the dots makes the peptide in question active. An example peptide SLADELALVDVLEDK is shown below, that has > 20% CV. It has a very skinny peak shape, eluting during the column wash portion of the run. Our analyses show that peptides very early and late in the assays have a high probability of have poor CV. One way to filter these peptides out is based on their narrow peak shape. PRM Conductor can set a minimum LC peak width bounds to do this.

- Some peptides have bad CV's because they have interference an interference that varies from one replicate to another, like ANHEEVLAAGK below.

- One could simply filter all the peptides with a CV greater than a threshold from the document, using Refine / Advanced / Consistency, and set 20% in the CV cutoff box. Or one could try using MS3 acquisition in PRM Conductor, to save those peptides with poor CV's.

Analysis of Both Light and Heavy Peptides

-

Now we will add in the light precursors, that were measured but currently are not in the Skyline document. Save the document and then Save again with the names pq500_60spd_plasma_final_lightheavy_replicates.sky and pq500_100spd_plasma_final_lightheavy_replicates.sky.

-

Use Refine / Advanced, and select the Add box. The Remove label type combo box title changes to Add label type. Select light and press Okay to close the Refine dialog. Each peptide will now have its light precursor added. Use Edit / Manage Results, select all the replicates and press the Reimport button.

- Use View / Peak Areas / 2D CV Histogram, and set Normalized to Heavy. The PQ500 standards were designed to look for a set of proteins of interest, not all of which are expressed in normal plasma. For this reason many of the Light / Heavy area ratios are low, and have high coefficient of variance.

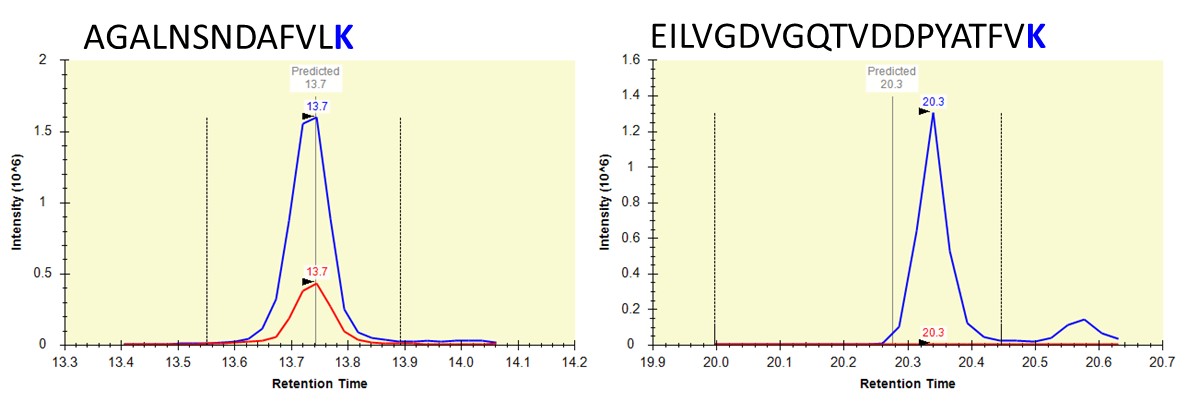

- Some peptides, like AGALNSNDAFVLK have significant endogenous peptide, and therefore have very good CV. Other peptides like EILVGDVGQTVDDPYATFVK have no observable endogenous peptide, and the area ratio therefore has a much higher CV. This general trend is observed in the CV histogram above.

-

This is the end of Step 4. We've demonstrated how to analyze replicate data for absolute quantitation with light and heavy peptides. A next step that some users will want to perform is a dilution curve. For absolute quantitation this takes two forms.

- Heavy Dilution: Constant endogenous (light) peptide amount, varying heavy spike-in concentration

- Light Dilution: Constant heavy spike-in concentration, varying endogenous sample.

-

The Light Dilution is a little easier to perform, because with the Settings / Peptide Settings / Modifications / Internal standard type is set to heavy, and thus Skyline uses the integration boundaries of the heavy peptides to integrate the light signals and determine whether the light/heavy ratios are sufficient for quantitation.

-

The Heavy Dilution is difficult, because eventually Skyline can't find the heavy peptide signal, and doesn't keep a constant integration boundary. Sometimes Skyline will jump over to the next biggest LC peak and ruin the dilution curve. We have sometimes used a script to set constant integration boundaries and solve this issue.

-

Calculating LOQs and LODs for large scale assays is still a little difficult, and we have used python scripts to do this. Skyline is also working on making improvements, and there will be updates in the future. We are submitting a paper soon that will have links to these scripts, for the intrepid that might be interested in exploring them.

Label Free - E. Coli

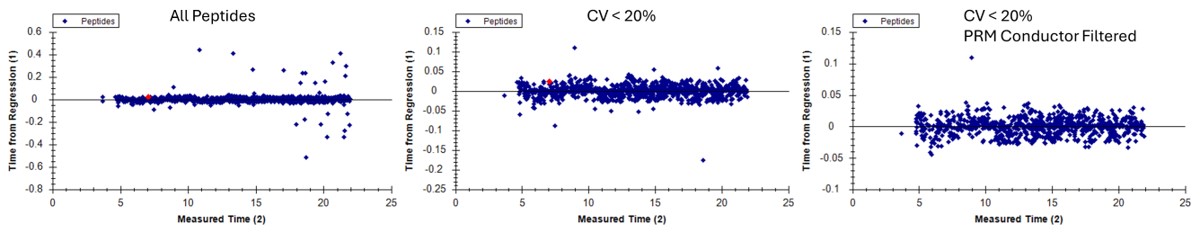

E. coli Assay Development Overview

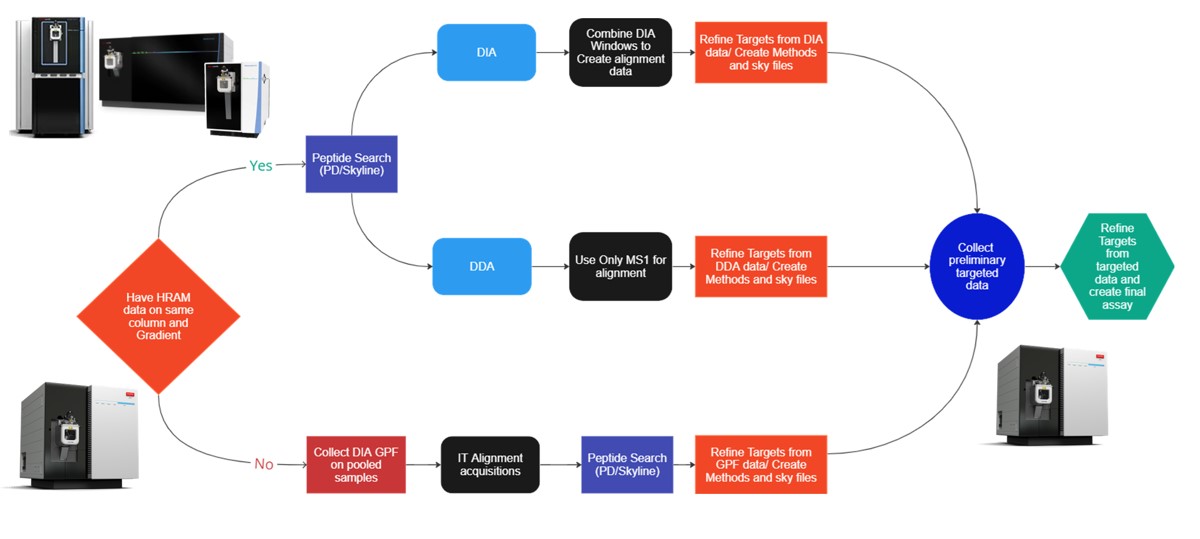

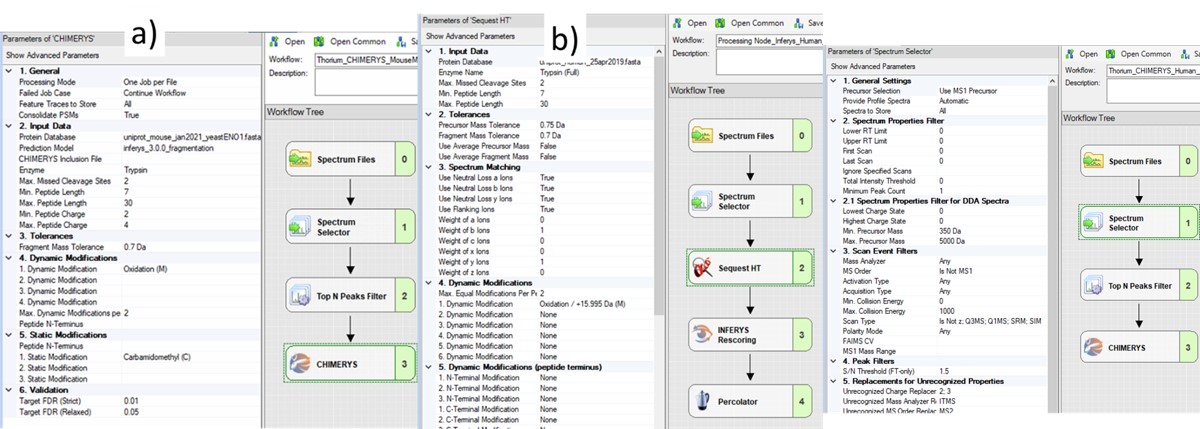

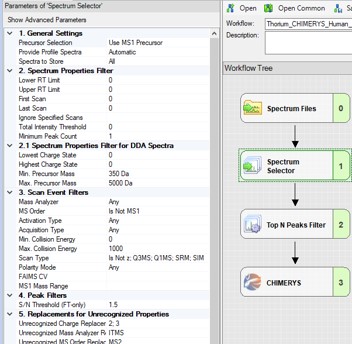

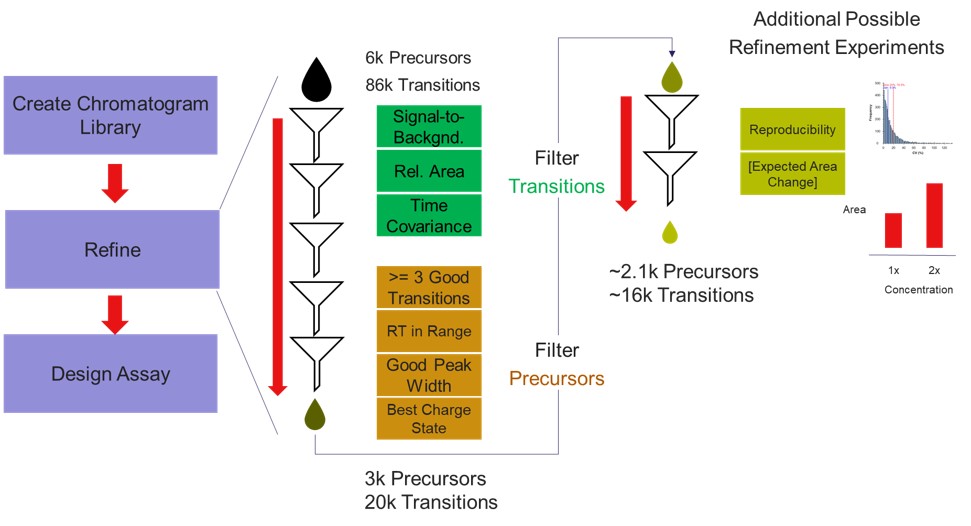

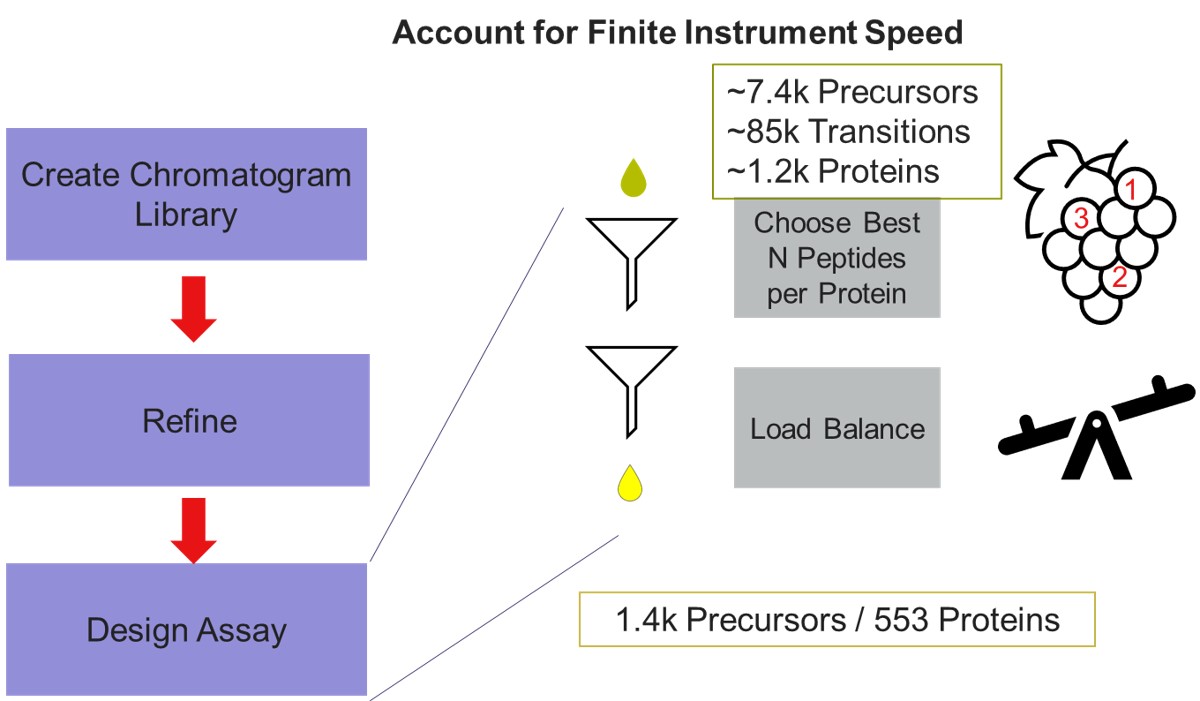

This tutorial will show you how to create a label-free targeted assay from discovery results. As shown in the figure below, there are two main routes to creating this kind of assay, to main difference being whether the discovery data comes from a high resolution accurate mass instrument like Astral, Exploris, or a Tribrid, or whether the discovery data comes from a Stellar. Once we have the discovery results in Skyline, the steps are largely the same. In this tutorial we'll look at an assay created from Stellar MS gas phase fractionation results.

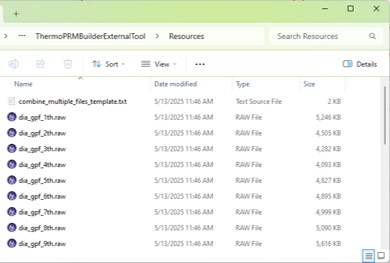

Collect Gas Phase Fractionation Data on Pooled Samples

Overview of the Library Methods